Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Logging a Stack Trace event with Nifi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Logging a Stack Trace event with Nifi

- Labels:

-

Apache NiFi

Created on 07-10-2017 03:42 PM - edited 08-18-2019 02:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, we are attempting to log a stack trace event using Nifi of the following type:

Note that continues until it hits a line such as "... 23 more"

We currently have a setup where we read each log file, line by line and if we find a timestamp, we count that line as an event. We regex to extract the timestamp. You can see where this would cause an issue as it creates a new line after "unwinding now" and then that next line and the subsequent lines after do not have timestamps. Currently we are just filtering the non-timestamp lines into a folder but we'd like to be able to put them with the other non-timestamp lines. Is there a way in Nifi to say "Take everything between two timestamps as an event despite if it has newlines in it" but still use the SplitText processor to manage the grouping of the lines (or an alternative?) Has anyone else had to deal with Stack Trace events not processing correctly?

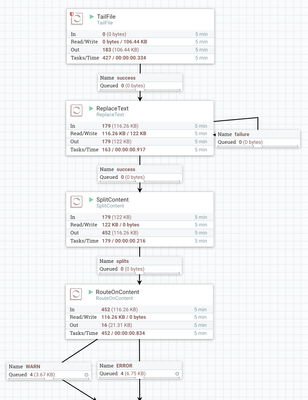

Below is a screenshot of the relevant portion of the flow:

Created on 07-11-2017 02:31 PM - edited 08-18-2019 02:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

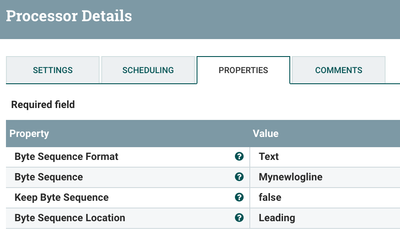

Another option (not as nice as the GrokReader) is to use SplitContent instead of SplitText processor.

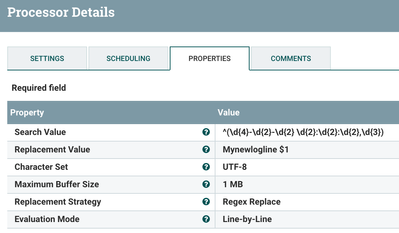

So here I use the ReplaceText processor to date string format every log line starts with and prepend to that a unique string that i can use later to split the content.

I then use the SplitText processor to split based on that unique string. This means that any stack trace that follows a log line will be captured with the preceding log entry.

After that you can do what you want with the resulting splits. I chose to filter out the splits for ERROR or WARN log lines and auto-terminate everything else.

Here is an example output of one of my log lines with a stack trace:

2017-07-11 10:21:38,087 ERROR [Timer-Driven Process Thread-2] o.a.n.p.attributes.UpdateAttribute java.lang.StringIndexOutOfBoundsException: String index out of range: 40 at java.lang.String.substring(String.java:1963) ~[na:1.8.0_77] at org.apache.nifi.attribute.expression.language.evaluation.functions.SubstringEvaluator.evaluate(SubstringEvaluator.java:55) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.Query.evaluate(Query.java:570) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.Query.evaluateExpression(Query.java:388) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.StandardPreparedQuery.evaluateExpressions(StandardPreparedQuery.java:48) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.StandardPropertyValue.evaluateAttributeExpressions(StandardPropertyValue.java:152) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.StandardPropertyValue.evaluateAttributeExpressions(StandardPropertyValue.java:133) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.processors.attributes.UpdateAttribute.executeActions(UpdateAttribute.java:496) ~[nifi-update-attribute-processor-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.processors.attributes.UpdateAttribute.onTrigger(UpdateAttribute.java:377) ~[nifi-update-attribute-processor-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) ~[nifi-api-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1099) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_77] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_77] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_77] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_77] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_77] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_77] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_77]

Thanks,

Matt

Created 07-11-2017 05:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Eric Lloyd

It seems you're using NiFi 1.3.0, if so, GrokReader might be helpful to extract whole stacktrace. Actually GrokReader doc has an example which reads java stacktrace, please check.

Created 07-11-2017 02:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you this looks very promising. I am testing it out now.

Created 07-11-2017 02:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am hesitant about this because ExtractGrok its options are to either put the log file portions into attributes or to rewrite it to the log file as JSON, neither of which I want to do. I just wanted to split based on the timestamp rather than on line.

Created 07-11-2017 03:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Koji is suggesting the use of GrokReader in a record-aware processor (such as QueryRecord or PartitionRecord), rather than the ExtractGrok processor. With a GrokReader, you can do your split using SQL (with QueryRecord), perhaps something like:

SELECT * FROM FLOWFILE WHERE tstamp < ${now():toNumber():minus(1000)}and

SELECT * FROM FLOWFILE WHERE tstamp >= ${now():toNumber():minus(1000)}to route the lines whether the timestamp (in a "tstamp" field) was before a second ago. Alternatively you can use PartitionRecord to group records into individual flow files, with each flow file containing the records that have the same values for the specified fields.

Created on 07-11-2017 02:31 PM - edited 08-18-2019 02:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Another option (not as nice as the GrokReader) is to use SplitContent instead of SplitText processor.

So here I use the ReplaceText processor to date string format every log line starts with and prepend to that a unique string that i can use later to split the content.

I then use the SplitText processor to split based on that unique string. This means that any stack trace that follows a log line will be captured with the preceding log entry.

After that you can do what you want with the resulting splits. I chose to filter out the splits for ERROR or WARN log lines and auto-terminate everything else.

Here is an example output of one of my log lines with a stack trace:

2017-07-11 10:21:38,087 ERROR [Timer-Driven Process Thread-2] o.a.n.p.attributes.UpdateAttribute java.lang.StringIndexOutOfBoundsException: String index out of range: 40 at java.lang.String.substring(String.java:1963) ~[na:1.8.0_77] at org.apache.nifi.attribute.expression.language.evaluation.functions.SubstringEvaluator.evaluate(SubstringEvaluator.java:55) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.Query.evaluate(Query.java:570) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.Query.evaluateExpression(Query.java:388) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.StandardPreparedQuery.evaluateExpressions(StandardPreparedQuery.java:48) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.StandardPropertyValue.evaluateAttributeExpressions(StandardPropertyValue.java:152) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.attribute.expression.language.StandardPropertyValue.evaluateAttributeExpressions(StandardPropertyValue.java:133) ~[nifi-expression-language-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.processors.attributes.UpdateAttribute.executeActions(UpdateAttribute.java:496) ~[nifi-update-attribute-processor-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.processors.attributes.UpdateAttribute.onTrigger(UpdateAttribute.java:377) ~[nifi-update-attribute-processor-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) ~[nifi-api-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1099) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) [nifi-framework-core-1.1.0.2.1.4.0-5.jar:1.1.0.2.1.4.0-5] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_77] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_77] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_77] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_77] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_77] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_77] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_77]

Thanks,

Matt

Created 07-11-2017 02:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting. This might be what we need because GrokReader is putting everything into attributes which isn't what we are looking for.

Created 07-11-2017 03:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Matt

Your solution works very well. Its not as pretty as GrokReader true, but it is just Splitting the content rather than putting it into attributes or switching the format of the log file to JSON. I have one unusual result. It is putting a newline in between each event. I don't think this is going to be a big issue for us but is there a reason you can think of that it would do that? Is it doing that on yours?

Created on 07-11-2017 03:17 PM - edited 08-18-2019 02:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

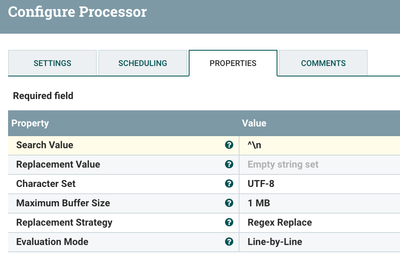

Must be a by-product of the splitContent operation. It is reading the last line return before it sees the next bytes sequence. If the blank line becomes an issue, you can remove blank lines using a ReplaceText processor also.

This will replace any line that starts with a line return with nothing.

Thanks,

Matt

Created 07-11-2017 03:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks thats a solution if the newlines become a problem. You know what else worked for me? I want to put this out there Matt and see if you see any errors in this logic.

Instead of the ReplaceText and all that, I simple added a SplitContent and since I know each event starts with the year, I did a Split Text on:

"(newline)

20"

I realize this solution wouldn't work forever but it would work for the remainder of the century I think. I guess an issue might arise if somehow, in someway, a log produces a newline and then a 20... I am just concerned ReplaceText might be an extrenous time-consuming add-on