Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Merge multiple directories into one table in H...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Merge multiple directories into one table in Hive

- Labels:

-

Apache Hadoop

-

Apache Hive

Created 07-01-2016 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi experts,

I've multiple files distributed by different directories (according to date) into my HDFS. All this files follows the same schema and only the first column (which represents the date) allows us to differentiate each text file. I wanna to merge all this directories into one table in Hive. I don't know which column I can put as partitioned column. My text files have this schema: - Date - ID - Investment - Country - City The column date of each file column is what allows us to differentiate each file. Each directory aggregate multiple files from one date and have the day as name. Which is the PARTITIONED column in this case?

Created 07-01-2016 06:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I understand is that you have set of files inside a hdfs directory which is split by dates, would the directory structure look like this:

/user/test/data/2016-03-12/<FILES>

/user/test/data/2016-03-13/<FILES>

/user/test/data/2016-03-14/<FILES>

Like these, if yes then you will not be able to create a partition based table, what you can do is to create a regular table pointing the location to /user/test/data and set the below properties, this will look at all the files inside the subdirectory and get the result set.

set hive.input.dir.recursive=true;

set hive.mapred.supports.subdirectories=true;

set hive.supports.subdirectories=true;

set mapreduce.input.fileinputformat.input.dir.recursive=true;

Created 07-01-2016 03:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is difficult to say what should be your PARTITION which this information. Best way to get to finding partition is from future query patterns. If you know there will be a where clause in most of the queries and the value is not high cardinality, then that could be your partition. If you think your queries mostly hit a date range, you could partition by date.

Created 07-01-2016 03:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think my column partition is the date. But I need to include this information in my table to. However When I create the external table with Date column I can't use it in partition clause...

Created 07-01-2016 03:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why do you need to include date information as a column? If you are creating a merge using Pig (or hive query), you can move the date field that is a column into a partition.

Created 07-01-2016 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But If I want one table that aggregate all the files, I think I will need the date as column to query the table. Right?

Created 07-01-2016 03:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you want to use the files as is, then yes. But do you have the file already split by dates? In that case, you will need to have the date column as both a column and a partition (with different names). But you may be better off reorganizing these files into ORC for better lookup speeds. If you want to do that you will create a second table as ORC and can do an insert overwrite.

Created 07-01-2016 04:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I already split the files using Pig. What I want is join all this direcotires into one table in Hve for data analysis.

Created 07-01-2016 06:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From what I understand is that you have set of files inside a hdfs directory which is split by dates, would the directory structure look like this:

/user/test/data/2016-03-12/<FILES>

/user/test/data/2016-03-13/<FILES>

/user/test/data/2016-03-14/<FILES>

Like these, if yes then you will not be able to create a partition based table, what you can do is to create a regular table pointing the location to /user/test/data and set the below properties, this will look at all the files inside the subdirectory and get the result set.

set hive.input.dir.recursive=true;

set hive.mapred.supports.subdirectories=true;

set hive.supports.subdirectories=true;

set mapreduce.input.fileinputformat.input.dir.recursive=true;

Created on 07-05-2016 05:32 PM - edited 08-19-2019 04:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Johnny Fuger. When you have a set of files in an existing directory structure, and you are not able to move the files around, there is a way to create a Hive table that is partitioned. You can manually define the partitions (explicitly). It is important to note that you are controlling each partition.

You create the table, then add each partition manually via an ALTER TABLE command. Here is an example where there are 3 days worth of files in three different directories: directory #1 has 1 file (10 records total), the second directory has 2 files(20 records total), and the 3rd has 3 files(30 records total):

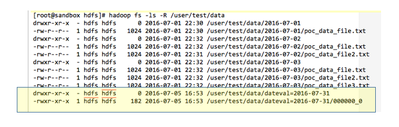

hadoop fs -mkdir -p /user/test/data/2016-07-01 hadoop fs -mkdir -p /user/test/data/2016-07-02 hadoop fs -mkdir -p /user/test/data/2016-07-03 hadoop fs -put /tmp/poc_data_file.txt /user/test/data/2016-07-01 hadoop fs -put /tmp/poc_data_file.txt /user/test/data/2016-07-02/poc_data_file2.txt hadoop fs -put /tmp/poc_data_file.txt /user/test/data/2016-07-02 hadoop fs -put /tmp/poc_data_file.txt /user/test/data/2016-07-03 hadoop fs -put /tmp/poc_data_file.txt /user/test/data/2016-07-03/poc_data_file2.txt hadoop fs -put /tmp/poc_data_file.txt /user/test/data/2016-07-03/poc_data_file3.txt [root@sandbox hdfs]# hadoop fs -ls -R /user/test/data drwxr-xr-x - hdfs hdfs 0 2016-07-01 22:30 /user/test/data/2016-07-01 -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:30 /user/test/data/2016-07-01/poc_data_file.txt drwxr-xr-x - hdfs hdfs 0 2016-07-01 22:32 /user/test/data/2016-07-02 -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-02/poc_data_file.txt -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:31 /user/test/data/2016-07-02/poc_data_file2.txt drwxr-xr-x - hdfs hdfs 0 2016-07-01 22:32 /user/test/data/2016-07-03 -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-03/poc_data_file.txt -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-03/poc_data_file2.txt -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-03/poc_data_file3.txt

Now create an external table with a partition clause. Note the rowcount is zero initially since we have not defined any partitions yet.

create external table file_data_partitioned (id int, textval string, amount double) partitioned by (dateval string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' LOCATION '/user/test/data'; select count(*) from file_data_partitioned; hive> select count(*) from file_data_partitioned; OK 0

Now manually define the 3 partitions on the data using ALTER TABLE commands. You need to specify the correct location for each partition. These partitions could be anywhere in HDFS.

----------------------------------------------- -- Add partitions manually ----------------------------------------------- alter table file_data_partitioned add partition (dateval = '2016-07-01') location '/user/test/data/2016-07-01'; alter table file_data_partitioned add partition (dateval = '2016-07-02') location '/user/test/data/2016-07-02'; alter table file_data_partitioned add partition (dateval = '2016-07-03') location '/user/test/data/2016-07-03'; --------------------------------------- -- Run statistics --------------------------------------- analyze table file_data_partitioned compute statistics ;

Now we can see & query the data in each partition.

hive> select dateval, count(*)

> from file_data_partitioned

> group by dateval;

OK

2016-07-01 10

2016-07-02 20

2016-07-03 30Important note though - if you choose this method of manual partitioning, you should always do it the same way each time you add data to the table. Otherwise you will get different directory structures in HDFS for the same table - data will be spread out among the cluster, which can get messy. Here's an example of this when you do an INSERT INTO command to create data for Partition 2017-07-31:

insert into file_data_partitioned partition (dateval = '2016-07-31') select id, textval, amount from file_data_partitioned where dateval = '2016-07-01'; [root@sandbox hdfs]# hadoop fs -ls -R /user/test/data drwxr-xr-x - hdfs hdfs 0 2016-07-01 22:30 /user/test/data/2016-07-01 -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:30 /user/test/data/2016-07-01/poc_data_file.txt drwxr-xr-x - hdfs hdfs 0 2016-07-01 22:32 /user/test/data/2016-07-02 -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-02/poc_data_file.txt -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:31 /user/test/data/2016-07-02/poc_data_file2.txt drwxr-xr-x - hdfs hdfs 0 2016-07-01 22:32 /user/test/data/2016-07-03 -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-03/poc_data_file.txt -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-03/poc_data_file2.txt -rw-r--r-- 1 hdfs hdfs 1024 2016-07-01 22:32 /user/test/data/2016-07-03/poc_data_file3.txt drwxr-xr-x - hdfs hdfs 0 2016-07-05 16:53 /user/test/data/dateval=2016-07-31 -rwxr-xr-x 1 hdfs hdfs 182 2016-07-05 16:53 /user/test/data/dateval=2016-07-31/000000_0

Note the new directory created for 2016-07-31 and see that it has a different structure - the default structure that Hive uses when Hive controls partitioning ( ... /dateval=2016-07-31/ ...)

I hope this helps.