Support Questions

- Cloudera Community

- Support

- Support Questions

- MergeContent inconsistent in aggregating output fr...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

MergeContent inconsistent in aggregating output from SplitJSON

- Labels:

-

Apache NiFi

Created on 01-10-2017 12:59 PM - edited 08-19-2019 03:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am currently using SplitJSON to split incoming JSON into multiple records based on JSON path $.*.

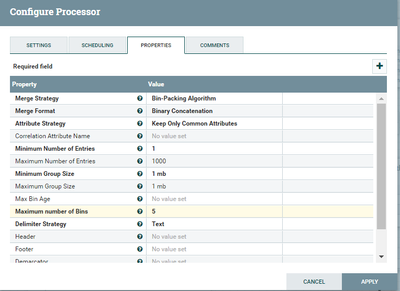

Further, I was able to aggregate all the splits into 1 single file with MergeContent. However, now when I am trying to aggregate split files into multiple chunks of 1 MB each, its not working as expected. I have followed some of the other artiles on the same use case, but most of them seems to use 'Minimum Number of Entries' for merging based on records than file size. Below is the MergeContent processor and respective properties. Any quick help or insight is highly appreciated. Thank you.

Created 01-10-2017 01:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The behavior you should be seeing here is that the mergeContent processor will take the first incoming FlowFile it sees and add it to bin 1. It will then continue to attempt to add additional FlowFiles to Bin 1 until either 1000 total FlowFiles have been added or the min size has reached 1 MB. Now lets say bin 1 has grown to 1000KB (just shy of 1 MB) and the next FlowFile would cause that bin to exceed the max group size of 1 MB. In this case that File would not be allowed to go into bin 1 and would be the first file to start bin 2. Now bin 1 hangs around because the min requirement of 1 MB has not been met and neither max entries or max group size has been met either. So you can see it is possible to fill all 5 of your bins without meeting your very tightly configured thresholds. So what happens when a next FlowFile will not fit in any of the 5 existing bins? The mergeContent processor will merge to oldest bin to free room to start a new bin. So what I am assuming here is you are seeing few or no files that are exactly 1 MB.

Thanks,

Matt

Created 01-10-2017 01:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The behavior you should be seeing here is that the mergeContent processor will take the first incoming FlowFile it sees and add it to bin 1. It will then continue to attempt to add additional FlowFiles to Bin 1 until either 1000 total FlowFiles have been added or the min size has reached 1 MB. Now lets say bin 1 has grown to 1000KB (just shy of 1 MB) and the next FlowFile would cause that bin to exceed the max group size of 1 MB. In this case that File would not be allowed to go into bin 1 and would be the first file to start bin 2. Now bin 1 hangs around because the min requirement of 1 MB has not been met and neither max entries or max group size has been met either. So you can see it is possible to fill all 5 of your bins without meeting your very tightly configured thresholds. So what happens when a next FlowFile will not fit in any of the 5 existing bins? The mergeContent processor will merge to oldest bin to free room to start a new bin. So what I am assuming here is you are seeing few or no files that are exactly 1 MB.

Thanks,

Matt

Created 01-10-2017 01:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @Matt, that helps understand it better. However I am not even seeing files with 1 MB, its either less or more than that. Also, regarding 'Max No. of Entries' its defaulted to 1000, and cant set it to blank either otherwise processor issues a warning that it cant be blank since it expects it to be a number instead.

So, I guess what I am asking is if its even possible to achieve the same or should I increase the 'Min Group size' or decrease the 'Max No. of Bins' to 1 at a time? Please advice. Thank you.

Created 01-10-2017 02:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the case where you are seeing Merged FlowFile larger then 1 MB, i suspect the merge is a single FlowFile that was larger then the 1 MB max. When a FlowFile arrives that exceeds to configured max it is passed to both the original and merged relationships unchanged.

decreasing bin number only impacts heap usage but does not change behavior.

Created 01-10-2017 02:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Avish Saha Unless you know that your incoming FlowFiles content can be combined to exactly 1 MB with out going over by even a byte, there is little chance you will see files of exactly 1 MB in size. The mergerContent processor will not truncate the content of a FlowFile to make a 1 MB output FlowFile. The more common use case to is to set an acceptable merged size range (min 800 KB - max 1 MB) for example. FlowFiles larger then 1 MB will still pass through unchanged.

Created 01-10-2017 02:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, thanks @Matt for the range suggestion. In my case the split JSON splits the incoming JSON into single records each of approx 15 KB of size. So essentially if I want lets say the file size between lets say 1 MB (1000 KB) to 1 MiB (1024 KiB), then I can set Min & Max Group Size accordingly, and Min No. of entries as 1. However what I am not sure is what should be Max No. of Bins in this case, or does it matter?

Created 01-10-2017 06:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The more bins the more of your NiFi JVM heap space that could be used. You just need to keep in mind that if all your bins have lets say 990 KB of data in them and the next file would put any of those queues over 1024 KB, then the oldest bin will still be merged at only 990 KB to make room for a new bin to hold the file that would not fit in any of the existing bins.

More bins equals more opportunities for a flowfile to find a bin where it fits...

Also keep in mind that as you have it configured, it is also possible for a bin to hang around for an indefinite amount of time. A bin at 999 KB which never gets another qualifying FlowFile that puts its size between 1000 and 1024 will sit forever unless you set the max bin age. This property tells the MergeContent processor to merge a bin no matter what its current state is if it reaches this max age. I recommend you always set this value to the max amount of data latency you are willing accept on this dataflow.

If you found all this information helpful, please accept this answer.

Matt

Created 01-11-2017 05:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @Matt. Will try the suggestion and revert in case I have any more follow up queries. Appreciate your help.