Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Multiple NFS Gateways for HDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Multiple NFS Gateways for HDFS

- Labels:

-

Apache Hadoop

Created 02-12-2016 12:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

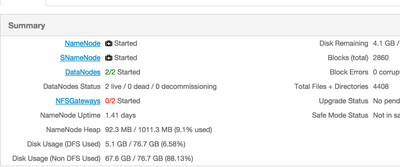

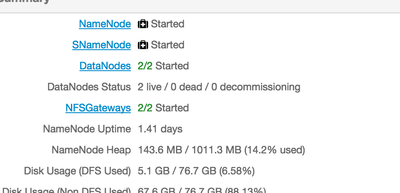

Is it possible to have multiple NFS Gateways on different nodes on a single cluster?

Created on 02-12-2016 01:02 AM - edited 08-19-2019 01:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created on 02-12-2016 01:02 AM - edited 08-19-2019 01:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 02-12-2016 01:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Source: http://hortonworks.com/blog/simplifying-data-management-nfs-access-to-hdfs/

Created 05-30-2016 12:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

If I understand we can start multiple nfs gateway server on multiple servers (datanode, namenode, client hdfs).

if we have (servernfs01, servernfs02, servernfs03) and (client01, client02)

client01# : mount -t nfs servernfs01:/ /test01 client02# : mount -t nfs servernfs02:/ /test02

My question is how to avoir a service interruption ? What's happened if servernfs01 is failed ?

How to keep access to hdfs for client01, in this case ?