Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: MySQL installation fails: resource_management....

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

MySQL installation fails: resource_management.core.exceptions.ExecutionFailed

- Labels:

-

Apache Ambari

-

Apache Hive

Created 04-15-2018 09:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I add Hive service, MySQL installation fails with the following error:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/mysql_server.py", line 64, in <module>

MysqlServer().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 375, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/mysql_server.py", line 33, in install

self.install_packages(env)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 821, in install_packages

retry_count=agent_stack_retry_count)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 166, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 53, in action_install

self.install_package(package_name, self.resource.use_repos, self.resource.skip_repos)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/yumrpm.py", line 264, in install_package

self.checked_call_with_retries(cmd, sudo=True, logoutput=self.get_logoutput())

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 266, in checked_call_with_retries

return self._call_with_retries(cmd, is_checked=True, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 283, in _call_with_retries

code, out = func(cmd, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 72, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 102, in checked_call

tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 150, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 303, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/bin/yum -d 0 -e 0 -y install mysql-community-release' returned 1. Error: Nothing to dostdout: /var/lib/ambari-agent/data/output-129.txt

2018-04-15 21:03:50,120 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=None -> 2.6

2018-04-15 21:03:50,126 - Using hadoop conf dir: /usr/hdp/2.6.4.0-91/hadoop/conf

2018-04-15 21:03:50,127 - Group['livy'] {}

2018-04-15 21:03:50,128 - Group['spark'] {}

2018-04-15 21:03:50,129 - Group['hdfs'] {}

2018-04-15 21:03:50,129 - Group['hadoop'] {}

2018-04-15 21:03:50,129 - Group['users'] {}

2018-04-15 21:03:50,130 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,130 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,131 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,132 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,133 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,134 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2018-04-15 21:03:50,134 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'users'], 'uid': None}

2018-04-15 21:03:50,135 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs'], 'uid': None}

2018-04-15 21:03:50,136 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,137 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,138 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': [u'hadoop'], 'uid': None}

2018-04-15 21:03:50,138 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-04-15 21:03:50,140 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2018-04-15 21:03:50,145 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2018-04-15 21:03:50,145 - Group['hdfs'] {}

2018-04-15 21:03:50,145 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', u'hdfs']}

2018-04-15 21:03:50,146 - FS Type:

2018-04-15 21:03:50,146 - Directory['/etc/hadoop'] {'mode': 0755}

2018-04-15 21:03:50,161 - File['/usr/hdp/2.6.4.0-91/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-04-15 21:03:50,162 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2018-04-15 21:03:50,176 - Repository['HDP-2.6-repo-1'] {'append_to_file': False, 'base_url': 'http://public-repo-1.hortonworks.com/HDP/centos7/2.x/updates/2.6.4.0', 'action': ['create'], 'components': [u'HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None}

2018-04-15 21:03:50,183 - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-2.6-repo-1]\nname=HDP-2.6-repo-1\nbaseurl=http://public-repo-1.hortonworks.com/HDP/centos7/2.x/updates/2.6.4.0\n\npath=/\nenabled=1\ngpgcheck=0'}

2018-04-15 21:03:50,184 - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match

2018-04-15 21:03:50,184 - Repository['HDP-2.6-GPL-repo-1'] {'append_to_file': True, 'base_url': 'http://public-repo-1.hortonworks.com/HDP-GPL/centos7/2.x/updates/2.6.4.0', 'action': ['create'], 'components': [u'HDP-GPL', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None}

2018-04-15 21:03:50,188 - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-2.6-repo-1]\nname=HDP-2.6-repo-1\nbaseurl=http://public-repo-1.hortonworks.com/HDP/centos7/2.x/updates/2.6.4.0\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-2.6-GPL-repo-1]\nname=HDP-2.6-GPL-repo-1\nbaseurl=http://public-repo-1.hortonworks.com/HDP-GPL/centos7/2.x/updates/2.6.4.0\n\npath=/\nenabled=1\ngpgcheck=0'}

2018-04-15 21:03:50,188 - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match

2018-04-15 21:03:50,188 - Repository['HDP-UTILS-1.1.0.22-repo-1'] {'append_to_file': True, 'base_url': 'http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.22/repos/centos7', 'action': ['create'], 'components': [u'HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-1', 'mirror_list': None}

2018-04-15 21:03:50,192 - File['/etc/yum.repos.d/ambari-hdp-1.repo'] {'content': '[HDP-2.6-repo-1]\nname=HDP-2.6-repo-1\nbaseurl=http://public-repo-1.hortonworks.com/HDP/centos7/2.x/updates/2.6.4.0\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-2.6-GPL-repo-1]\nname=HDP-2.6-GPL-repo-1\nbaseurl=http://public-repo-1.hortonworks.com/HDP-GPL/centos7/2.x/updates/2.6.4.0\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-UTILS-1.1.0.22-repo-1]\nname=HDP-UTILS-1.1.0.22-repo-1\nbaseurl=http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.22/repos/centos7\n\npath=/\nenabled=1\ngpgcheck=0'}

2018-04-15 21:03:50,192 - Writing File['/etc/yum.repos.d/ambari-hdp-1.repo'] because contents don't match

2018-04-15 21:03:50,192 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2018-04-15 21:03:50,271 - Skipping installation of existing package unzip

2018-04-15 21:03:50,271 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2018-04-15 21:03:50,280 - Skipping installation of existing package curl

2018-04-15 21:03:50,280 - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2018-04-15 21:03:50,288 - Skipping installation of existing package hdp-select

2018-04-15 21:03:50,293 - The repository with version 2.6.4.0-91 for this command has been marked as resolved. It will be used to report the version of the component which was installed

2018-04-15 21:03:50,299 - Skipping stack-select on MYSQL_SERVER because it does not exist in the stack-select package structure.

2018-04-15 21:03:50,489 - MariaDB RedHat Support: false

2018-04-15 21:03:50,493 - Using hadoop conf dir: /usr/hdp/2.6.4.0-91/hadoop/conf

2018-04-15 21:03:50,507 - call['ambari-python-wrap /usr/bin/hdp-select status hive-server2'] {'timeout': 20}

2018-04-15 21:03:50,529 - call returned (0, 'hive-server2 - 2.6.4.0-91')

2018-04-15 21:03:50,530 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=None -> 2.6

2018-04-15 21:03:50,565 - File['/var/lib/ambari-agent/cred/lib/CredentialUtil.jar'] {'content': DownloadSource('http://eureambarimaster1.local.eurecat.org:8080/resources/CredentialUtil.jar'), 'mode': 0755}

2018-04-15 21:03:50,566 - Not downloading the file from http://eureambarimaster1.local.eurecat.org:8080/resources/CredentialUtil.jar, because /var/lib/ambari-agent/tmp/CredentialUtil.jar already exists

2018-04-15 21:03:50,566 - checked_call[('/usr/jdk64/jdk1.8.0_112/bin/java', '-cp', u'/var/lib/ambari-agent/cred/lib/*', 'org.apache.ambari.server.credentialapi.CredentialUtil', 'get', 'javax.jdo.option.ConnectionPassword', '-provider', u'jceks://file/var/lib/ambari-agent/cred/conf/mysql_server/hive-site.jceks')] {}

2018-04-15 21:03:51,189 - checked_call returned (0, 'SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".\nSLF4J: Defaulting to no-operation (NOP) logger implementation\nSLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.\nApr 15, 2018 9:03:50 PM org.apache.hadoop.util.NativeCodeLoader <clinit>\nWARNING: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable\neurecat123')

2018-04-15 21:03:51,197 - Package['mysql-community-release'] {'retry_on_repo_unavailability': False, 'retry_count': 5}

2018-04-15 21:03:51,278 - Installing package mysql-community-release ('/usr/bin/yum -d 0 -e 0 -y install mysql-community-release')

2018-04-15 21:03:52,017 - Execution of '/usr/bin/yum -d 0 -e 0 -y install mysql-community-release' returned 1. Error: Nothing to do

2018-04-15 21:03:52,017 - Failed to install package mysql-community-release. Executing '/usr/bin/yum clean metadata'

2018-04-15 21:03:52,211 - Retrying to install package mysql-community-release after 30 seconds

2018-04-15 21:04:27,630 - The repository with version 2.6.4.0-91 for this command has been marked as resolved. It will be used to report the version of the component which was installed

2018-04-15 21:04:27,636 - Skipping stack-select on MYSQL_SERVER because it does not exist in the stack-select package structure.

Command failed after 1 tries

Created 04-15-2018 10:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From HDP 2.6.4, due to licensing issues mysql was removed. All the GPL licensed components will be removed from now. If you are using mysql for hive then you should install it by yourself.

# wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm # sudo rpm -ivh mysql-community-release-el7-5.noarch.rpm # yum update

Then you also need to make sure that the JDBC driver is setup via ambari server setup command as following:

# yum install -y mysql-connector-java # ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

.

Some more details here: https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.5/bk_ambari-administration/content/using_hive...

Created 04-15-2018 10:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From HDP 2.6.4, due to licensing issues mysql was removed. All the GPL licensed components will be removed from now. If you are using mysql for hive then you should install it by yourself.

# wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm # sudo rpm -ivh mysql-community-release-el7-5.noarch.rpm # yum update

Then you also need to make sure that the JDBC driver is setup via ambari server setup command as following:

# yum install -y mysql-connector-java # ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

.

Some more details here: https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.5/bk_ambari-administration/content/using_hive...

Created on 04-15-2018 11:21 PM - edited 08-17-2019 08:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

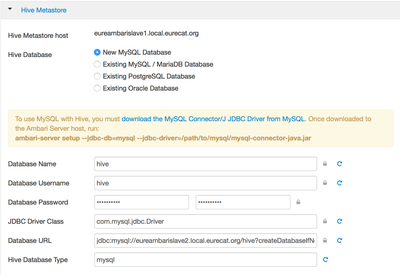

I want to add Hive service to my cluster. In the Add Service Wizard I see the attached screen. It looks like MySQL is the default option. So, what is a recommended way to add Hive? I do not have any special requirement for selecting MySQL. My final goal is to install Spark2 which requires Hive.

Created 04-15-2018 11:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As due to licensing issues the mysql server will not be installed by default (unlike earlier versions) so you will have to manually insatll the MySQL Server on the host "xxxambarislave2.local.xxx.org". adn then after starting the MySQL server on that host you can then run the ambari Hive Service "Add Service" wizard.

So basically the detailed steps to use MySQL for Hive (In HDP 2.6.4) is describe din the following section as a pre requisite:

"Installing and Configuring MySQL" : https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.6.4/bk_command-line-installation/content/meet-m...

Created 04-15-2018 11:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Basicaly the steps will be somethign like following:

1. You can install MySQL 5.5 or later. On the Ambari host, install the JDBC driver for MySQL, and then add it to Ambari:

# yum install mysql-connector-java*

If the MySQL mysql-connector-java is not available in your YUM repo then you can download the Driver manually on the ambari server host "mysql-connector-java-5.1.35.zip" or later JDBC driver from : https://dev.mysql.com/downloads/connector/j/5.1.html then extract it on ambari server host. Put the connector jar in some location like: /usr/share/java/mysql-connector-java.jar

2. Run the ambari server setup command as following: (then restart ambari server)

# sudo ambari-server setup --jdbc-db=mysql --jdbc-driver=/usr/share/java/mysql-connector-java.jar

3. Now install the MySQL Server as following on the desired host. In your case in may be "xxxambarislave2.local.xxx.org". SSH Log in to the node on which you want to install the MySQL. Then install MySQL and the MySQL community server, and start the MySQL service:

# yum localinstall https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm # yum install mysql-community-server # systemctl start mysqld.service

4. Obtain a randomly generated MySQL root password:

# grep 'A temporary password is generated for root@localhost' /var/log/mysqld.log |tail -1

5. Reset the MySQL root password. Enter the following command, followed by the password you obtained in the previous step. MySQL will ask you to change the password

# /usr/bin/mysql_secure_installation

The from ambari UI you can install Hive Service and mentioned the MySQL server details.

.

Created 04-16-2018 10:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I followed your recommendations, but I go the following conflict error when installing MySQL server:

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/bin/yum -d 0 -e 0 -y install mysql-community-release' returned 1. Error: mysql57-community-release conflicts with mysql-community-release-el7-7.noarch You could try using --skip-broken to work around the problem You could try running: rpm -Va --nofiles --nodigest

Created 04-16-2018 12:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your initial indication helped:

# wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm

# sudo rpm -ivh mysql-community-release-el7-5.noarch.rpm

But the below-given steps were leading to either a conflict or impossibility to execute "yum install mysql-community-release" from Ambari installation Wizard:

# yum localinstall https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

# yum install mysql-community-server

# systemctl start mysqld.service