Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NIFI load data from CSV to database

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NIFI load data from CSV to database

- Labels:

-

Apache NiFi

Created on 04-24-2018 01:00 PM - edited 08-18-2019 02:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 04-24-2018 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

PutDatabaseRecord allows you to put multiple records from one flow file into a database at a time, without requiring the user to convert to SQL (you can use PutSQL for the latter, but it is less efficient). In your case you just need GetFile -> PutDatabaseRecord. Your CSVReader will have the schema for the data, which will indicate the types of the fields to PutDatabaseRecord. It will use that to insert the fields appropriately into the prepared statement and execute the whole flow file as a single batch.

Created 10-31-2018 10:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to insert few sample records (.csv) to Teradata using NiFi. My current workflow is same as you suggested

GetFile -> PutDatabaseRecord

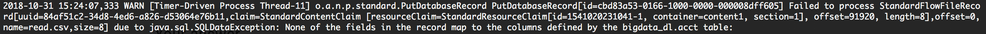

but I am getting the error as below. Please advise. Appreciate your help!

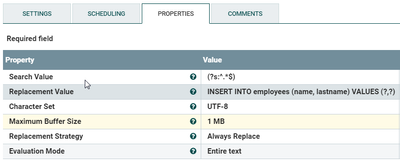

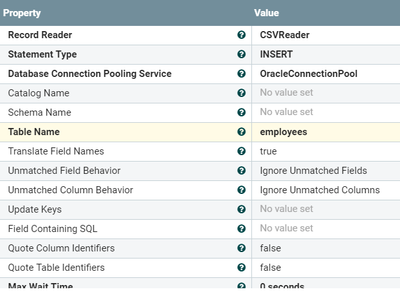

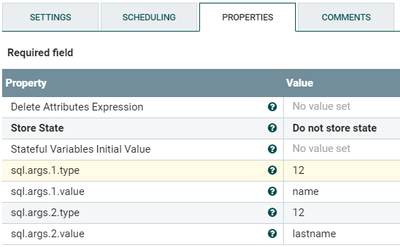

Please find the configurations for the 2 processors:

This is how data looks like:

ACCT_ID,ACCT_NAME

1,A

2,B

Table definition:

create table bigdata_dl.acct(

ACCT_ID VARCHAR(30),

ACCT_NAME VARCHAR(30)

);

Created 11-01-2018 08:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since your CSV headers match the column names exactly, try setting Translate Field Names to false.

Created on 10-21-2019 11:54 PM - edited 10-21-2019 11:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a csv file which might have corrupt data as well.

How can I still insert the correct data and collect the corrupted data in seperate flow?