Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Need to understand why Job taking long time in...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Need to understand why Job taking long time in reduce phase, approximately 2 hrs for one reduce task

- Labels:

-

Apache Hadoop

-

Apache Hive

Created 01-26-2016 04:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ 2016-01-25 00:10:27,135 to next line which started @ 2016-01-25 02:18:46,599, approximately 2 hrs for one reduce task

2016-01-25 00:10:27,133 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.Fetcher: for url=13562/mapOutput?job=job_1450565638170_43987&reduce=11&map=attempt_1450565638170_43987_m_000021_1 sent hash and received reply 2016-01-25 00:10:27,133 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.Fetcher: fetcher#22 about to shuffle output of map attempt_1450565638170_43987_m_000021_1 decomp: 592674 len: 118056 to MEMORY 2016-01-25 00:10:27,135 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput: Read 592674 bytes from map-output for attempt_1450565638170_43987_m_000021_1 2016-01-25 00:10:27,135 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 592674, inMemoryMapOutputs.size() -> 4, commitMemory -> 807365521, usedMemory ->1104745434 2016-01-25 00:10:27,135 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.ShuffleSchedulerImpl: XXXXXXXXXXXXXXXXXXXXXXXXXX:XXXX freed by fetcher#22 in 5ms 2016-01-25 02:18:46,599 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput: Read 296787239 bytes from map-output for attempt_1450565638170_43987_m_000010_0 2016-01-25 02:18:46,614 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 296787239, inMemoryMapOutputs.size() -> 5, commitMemory -> 807958195, usedMemory ->1104745434 2016-01-25 02:18:46,614 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl: Starting inMemoryMerger's merge since commitMemory=1104745434 > mergeThreshold=992137472. Current usedMemory=1104745434 2016-01-25 02:18:46,614 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.MergeThread: InMemoryMerger - Thread to merge in-memory shuffled map-outputs: Starting merge with 5 segments, while ignoring 0 segments 2016-01-25 02:18:46,615 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.ShuffleSchedulerImpl: am1plccmrhdd15.r1-core.r1.aig.net:13562 freed by fetcher#12 in 7726688ms 2016-01-25 02:18:46,615 INFO [EventFetcher for fetching Map Completion Events] org.apache.hadoop.mapreduce.task.reduce.EventFetcher: EventFetcher is interrupted.. Returning

Created 01-26-2016 08:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you enabled mapside compression to reduce the amount of data moved across the clusters when shuffling to the reduces? How long did your longest map task take to run (start time and end time)?

set mapreduce.map.output.compress=true;

set mapreduce.map.output.compress.codec=org.apache.hadoop.io.compress.SnappyCodec;

Created 01-26-2016 03:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 01-26-2016 09:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Joseph Niemiec . The longest running mapper was for 2 mins 54 secs .

The compression is set to false . I guess as suggested above, it makes sense to compress the data and send compress data across the network.

Since this is a hive job , I believe below is the properties that need to be enabled .

hive.exec.compress.intermediate=true

I don't see an option to modify the compression format for the intermediate task in hive, looks like it picks up hadoop default compression . This has not been defined in our environment so i guess i will have to set this property and test it out.

Is there a way to pass on the mapreduce intermediate compression from the job instead of making a global change .

Created 01-27-2016 01:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

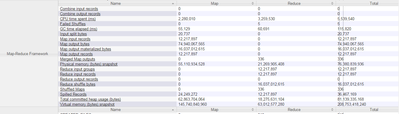

Because your running the Hive query on the MR engine the MR props will be respected. You can mess with your slowstart, heapsizes, compressions all by just setting MR props in the Hive session/job like below, dont bother setting the Hive you have above if we explicitly set the MR ones. Also can we get a screenshot of your counters page? You can get to it from the overview page on the left, I am most interested in the 'MapReduce Framework Counters'

##Setting MR Props in Hive##

set mapreduce.map.output.compress=true;

set mapreduce.map.output.compress.codec=org.apache.hadoop.io.compress.SnappyCodec;

Created on 01-27-2016 04:01 AM - edited 08-19-2019 04:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks , i will try setting those MR properties through hive . Below is the MR framework counters screen shot .

Created 01-26-2016 09:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neeraj Sabharwal this best practice document is really helpful thank you .

Created 06-20-2016 11:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jagdish Saripella, @Joseph Niemiec

Have you figured out the problem ? I ran into similar issue. This is unlikely a data-skew issue.

- « Previous

-

- 1

- 2

- Next »