Support Questions

- Cloudera Community

- Support

- Support Questions

- Need to understand why Job taking long time in red...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

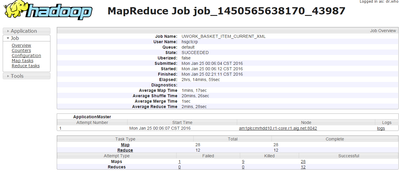

Need to understand why Job taking long time in reduce phase, approximately 2 hrs for one reduce task

- Labels:

-

Apache Hadoop

-

Apache Hive

Created 01-26-2016 04:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@ 2016-01-25 00:10:27,135 to next line which started @ 2016-01-25 02:18:46,599, approximately 2 hrs for one reduce task

2016-01-25 00:10:27,133 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.Fetcher: for url=13562/mapOutput?job=job_1450565638170_43987&reduce=11&map=attempt_1450565638170_43987_m_000021_1 sent hash and received reply 2016-01-25 00:10:27,133 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.Fetcher: fetcher#22 about to shuffle output of map attempt_1450565638170_43987_m_000021_1 decomp: 592674 len: 118056 to MEMORY 2016-01-25 00:10:27,135 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput: Read 592674 bytes from map-output for attempt_1450565638170_43987_m_000021_1 2016-01-25 00:10:27,135 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 592674, inMemoryMapOutputs.size() -> 4, commitMemory -> 807365521, usedMemory ->1104745434 2016-01-25 00:10:27,135 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.ShuffleSchedulerImpl: XXXXXXXXXXXXXXXXXXXXXXXXXX:XXXX freed by fetcher#22 in 5ms 2016-01-25 02:18:46,599 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput: Read 296787239 bytes from map-output for attempt_1450565638170_43987_m_000010_0 2016-01-25 02:18:46,614 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 296787239, inMemoryMapOutputs.size() -> 5, commitMemory -> 807958195, usedMemory ->1104745434 2016-01-25 02:18:46,614 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl: Starting inMemoryMerger's merge since commitMemory=1104745434 > mergeThreshold=992137472. Current usedMemory=1104745434 2016-01-25 02:18:46,614 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.MergeThread: InMemoryMerger - Thread to merge in-memory shuffled map-outputs: Starting merge with 5 segments, while ignoring 0 segments 2016-01-25 02:18:46,615 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.ShuffleSchedulerImpl: am1plccmrhdd15.r1-core.r1.aig.net:13562 freed by fetcher#12 in 7726688ms 2016-01-25 02:18:46,615 INFO [EventFetcher for fetching Map Completion Events] org.apache.hadoop.mapreduce.task.reduce.EventFetcher: EventFetcher is interrupted.. Returning

Created 01-26-2016 05:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it depends on several parameters. Time it took to copy data to the reducer so networking can be a bottleneck, slow storage, etc. You can look at adding more reducers to your job. I can't tell whether you're running java program. There are different ways of setting number of reducers for specific types of jobs.

Created 01-26-2016 05:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the size of the dataset you are trying to process? Could you please paste you YARN/MapReduce container configurations as well as your hardware specs.?

Created 01-26-2016 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

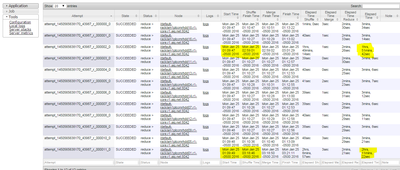

Please go to the RM UI, and for the Job go the the 'Counters' Under MapReduce counters you need to check both how many Reducer_Input_Groups there are and additionally how many of these Groups were assigned to each Reducer.

The Reducer_Input_Groups represents the number of distinct keys your Mappers produce, each distinct key will belong to a Reducer were a Reducer may have 1+N Keys it takes care of. therfore you can have as many reduces as you have reducer_input_groups, but not more then that.

Also you should check the number of Reducer_Output_Records and compare that to the SpilledRecords value for the reduces. If your spllied records to reducer output records are not 1:1 then you may want to consider making your Reducers alittle larger to prevent the extra IO Spill from taking place.

Created 01-26-2016 02:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jonas Straub : hardware config : we have a 24 node cluster out of that 18 are the data nodes 4 master nodes & a edge & service node . The individual servers are 24 core where as master having 32 cores and over all compute power of 1.34TB .

Minimum yarn container size is 2048MB

Looks like the table was dropped so I donot have information on the amount of data that was worked on .

Created 01-26-2016 02:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

See @Jonas Straub comment. Data size plays a big role on understanding the time.

Created on 01-26-2016 02:37 PM - edited 08-19-2019 04:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Artem Ervits : I guess i have provided incomplete information , my apologizes. . This is a hive job which selects data from few tables unions them and then insert overwrites into one other table ( i donot have info for the amount of data the original tables, probably they were dropped ). The job actually spuns 28 mappers 12 reducers , out of this 10 reducers have completed the job under 3 mins expect for 2 which took approximately 2 hours .

This job is a cron and it has been running for quite few days , no config changes were done from infrastructure end .

One other odd thing about this is that some days it spun reducers and other days it doesn't . ( does this depend on the amount of data that is being parsed ?

The initial post yarn logs shows @ what point it took long and where it took long time

- 2016-01-2500:10:27,135 INFO [fetcher#22] org.apache.hadoop.mapreduce.task.reduce.ShuffleSchedulerImpl: XXXXXXXXXXXXXXXXXXXXXXXXXX:XXXX freed by fetcher#22 in 5ms

- 2016-01-2502:18:46,599 INFO [fetcher#12] org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput: Read 296787239 bytes from map-output for attempt_1450565638170_43987_m_000010_0

Created 01-26-2016 02:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your problem is not in the Reduce stage, the problem shown here is network shuffle time and this can be 2 things

1) it could mean a heavy amount of key skew (I doubt it does in this case due to the reduce stage time)

2) it more likely is that these reduces have started before all the mappers have complete, so you have reduces stuck in the network shuffle phase for a long time, a reducer cannot complete until ALL Mappers are done so it can fetch all the keys it needs for processing.

Try changing your slow-start so reduces start later then maps.

set mapreduce.job.reduce.slowstart.completedmaps=0.5;

Created 01-27-2016 01:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also both of these log entries are shuffle related for the most part, its the Reduces fetching the Map outputs from nodes in the cluster. Which leads me to question things like network transfer rates the NICs had when this occurred, as its only 2 nodes that demonstrate the slow shuffle times. I am not sure if you have metrics available tho or not.

Created 01-26-2016 03:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Joseph Niemiec : Thanks for reply the current setting is as below :

hive> set mapreduce.job.reduce.slowstart.completedmaps;

mapreduce.job.reduce.slowstart.completedmaps=0.8

So i believe 80% of mappers should be completed before reducer kicks in ..