Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFi Flow xml/json getting corrupted in multi ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi Flow xml/json getting corrupted in multi node setup

- Labels:

-

Apache NiFi

Created 05-01-2023 11:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I have Nifi running on EKS cluser with 3 nodes. Every now and then, flow.xml.gz gets corrupted in one of the node and it fails to start back up again. Same things happens with other nodes sooner or later and ultimately NiFi crashes.

The error from nodes startup logs -

schema validation Error parsing Flow configuration at line <line_no>, col <col no > : cvc-complex-type:2.4.d : Invalid content was found starting with element 'property'. No child element is expected at this point.

It has become a pain to maintain Nifi because of this issue. Please suggest a solution.

Created 05-02-2023 11:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@srv1009, Welcome to our community! To help you get the best possible answer, I have tagged our NiFi experts @MattWho @SAMSAL @cotopaul @DigitalPlumber who may be able to assist you further.

Please feel free to provide any additional information or details about your query, and we hope that you will find a satisfactory solution to your question.

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 05-03-2023 08:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am doing Root cause Analysis, and source of issue seems to be abrupt nifi shutdown resulting in flow file corruption.

The logs before first error -

Received Trapped Signal.. Shutting down

Apache Nifi has accepted the shutdown signal and is shutting down now.

Failed to determine if process 165 is running or nor, assuming it is not.

Further information-

I am using 3 node nifi cluster, with 32GB memory. Heap memory allocated is 24GB.

Created 05-03-2023 10:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@srv1009,

In my overall experience with NiFi, the problem you have reported only happened because of one of the following two reasons:

- as you already mentioned, an abrupt NiFi shutdown --> which of course might cause your corrupted file. This can be solved easily, you stop doing such shutdowns 🙂

- the physical disk on which NiFi is located gets corrupted and implicitly it starts corrupting other files as well. This has an easy solution as well --> you test your hardware health and if necessary you change it.

Nevertheless, the reason for why this happens is present either in the NiFi logs or the server logs 🙂

Created 05-03-2023 09:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The logs which I have access to just says what I mentioned.

"Received trapped signal.. shutting down". I am trying to locate the source

of the issue but logs are not helping.

Could you tell me which log files to look into. I will try to get my hands

on them in lower environment and see if i can locate the source.

Created 05-04-2023 12:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Assuming that you are running on Linux, you need to find your operating system logs. In most linux distribution, those logs are to be found in /var/log. Now, every sysadmin configures each server by your company rules and requirements so I suggest you speak with the team responsible for the linux server and ask them to provide you with the logs. In these logs, you might find out why you are receiving that error in the first place.

Unfortunately, this problem is not really related to NiFi, but to your infrastructure or to how somebody uses your NiFi instance. Somebody is doing something and you need to find out who and what 😞

Created on 05-04-2023 02:30 AM - edited 05-04-2023 02:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@cotopaul wrote:Assuming that you are running on Linux, you need to find your operating system logs. In most linux distribution, those logs are to be found in /var/log. Now, every sysadmin configures each server by your company rules and requirements so I suggest you speak with the team responsible for the linux server and ask them to provide you with the logs. In these logs, you might find out why you are receiving that error in the first place.

Unfortunately, this problem is not really related to NiFi, but to your infrastructure or to how somebody uses your NiFi instance. Somebody is doing something and you need to find out who and what 😞

@cotopaul wrote:Assuming that you are running on Linux, you need to find your operating system logs. In most linux distribution, those logs are to be found in /var/log. Now, every sysadmin configures each server by your company rules and requirements so I suggest you speak with the team responsible for the linux server and ask them to provide you with the logs. In these logs, you might find out why you are receiving that error in the first place.

Unfortunately, this problem is not really related to NiFi, but to your infrastructure or to how somebody uses your NiFi instance. Somebody is doing something and you need to find out who and what 😞

I totally agree with you, but i want to just confirm one thing. I am

running my nifi on eks, and kubernetes seems to restart pod every once in a

while based on memory usage or other metrics it keeps hold of.

The pattern which I see in logs is nifi shuts down just before my flowfile

gets corrupted. So , i am making the assumption that it's probably abrupt

shutdown triggered by EKS , causing the flowfile to corrupt.

Now, the bigger question is whatever be the reason for shutdown, if nifi is

receiving shutdown signal and shutting down. Ain't it supposed to be

shutdown gracefully?

I tried replicating this in lower environment, and whenever I terminate the

running pod directly, it shuts down the nifi and corrupts the flow XML 8/10

times, making me believe that 8 times it got shutdown while updating flow

XML.

Is there a way i can confirm if nifi keeps flow intact in case of regular

shutdown or is it something like an expected behaviour?

Because we expect pods to be restarted with kubernetes, one of the reasons we use multi node architecture is that if one node goes down , others will keep application running while the down nodes would be brought back up behind the scenes.

But here, if we lose the node altogether everytime it runs into an issue, eventually we will end up with 0 functional nodes which is currently happening.

How are we supposed to address that ?

Created 05-08-2023 09:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@srv1009

If NiFi is "restarting" itself, that means NiFi did not receive a graceful shutdown request.

When NiFi is launched, the bootstrap process is started, that bootstrap process then starts the NiFi core as a child process and monitors for the child process pid. If that pid disappears or the NiFi core is not responding, the bootstrap process will assume the core has died and will restart it.

Typically in such scenarios, this is the result of the OS killing off the NiFi core process pid. In linux the OOM Killer will do this when system memory gets too low and it sees that child process as the largest consumer of system memory. The latest version of NiFi use a flow.json.gz to persist the dataflow. If you are still using a flow.xml.gz, what version of NiFi are you running?

Regardless the NiFi flow is unpacked on startup and loaded into NiFi heap memory. When a change occurs on the canvas, the current flow.json.gz/flow.xml.gz is archived and a new one is created. To have corruption in the newly written flow.josn.gz/flow.xml.gz, that means the core NiFi process was killed while that was being written out to disk. Or like @cotopaul you have some disk corruption going on.

With a graceful shutdown of the NiFi service, there is a 10 second grace period for current threads to complete before the core is killed. If you are having some disk issues, high disk latency, or disk space issues, maybe the writing of your flow.xml.gz is taking a lot longer and process is being killed before that completes, but that seems slim as multiple things would need to happen in succession (Change on canvas at almost exact time you initiate a graceful shutdown). You could increase the graceful shutdown in the nifi.properties, but i doubt that is what is impacting you here.

If you found that the provided solution(s) assisted you with your query, please take a moment to login and click Accept as Solution below each response that helped.

Thank you,

Matt

Created 05-08-2023 10:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your response. It was very helpful and informative.

I am using Nifi 1.19 version and have tried using flow.json.gz and ran into same error there.

What I understand from ShutdownHook code is that whenever a SIGTERM signal is issued from k8s, it starts the shutdown and if it doesn't shutdown in the grace period configured in nifi.properties, the core process itself is forcefully destroyed.

As you said, the chances of getting flowfile corrupted is pretty slim as it needs many things to happen in succession. I was wondering what else could cause flowfile to go bad.

Also in the restart logs, I never saw error saying that flowfile has gone bad, it throws warning with schema errors and just shuts down. Only when i replace the flow xml or rename the default flow file to something else, the warning message disappears and nifi starts up.

Another information, I would like to bring in your notice is that it happens in our prod environments too, where flow is essentially read-only for everyone, so we are never making any change to the flow. Also, we have checkpointing enabled, every few minutes it archives the current flow state. Does it function same as making change to flow, i.e Archive the current flow xml and create new one? If yes, then it could fit in the rare sequence of events causing flow to corrupt.

Another thing,

Is it possible to configure nifi startup behaviour such that, if flow xml is corrupt, it ignores it and startup with new flow xml? As in Higher environments, losing the flow is not that big a concern, than to deploy nifi again with new flow xml.

we have nifi registry, our own flow deployment tools to sync the flow across environments to recover the flow quickly but nifi crashing every time something goes wrong with flow xml doesn't help to maintain High availability/resiliency.

Looking forward for your response.

Created 05-09-2023 09:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

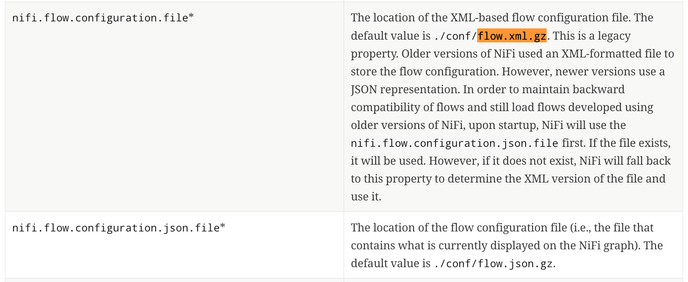

Are you sure you are removing or replacing a flow.xml.gz. Apache NiFi 1.19 uses the flow.json.gz.

The flow.json.gz is auto generated from the flow.xml.gz if and only if the flow.json.gz does not already exist at startup.

As far as flow changes in your production environment goes... All the following are flow config changes:

1. Moving a component

2. Changing state of a component (start, stop, enable, disable)

3. Importing a flow from the nifi-registry or changing the version of a version controlled flow.

So you are saying none of the above are happening ever in prod (seems unlikely)?

You mentioned that you have "checkpointing" enabled. The only "checkpointing" that NiFi does is NOT related the flow.ml.gz or the flow.json.gz. It has to do with checkpointing the flowfile_repository which contains metadata about NiFi FlowFiles queued within connection in your datflows on the canvas.

The flow.json.gz is only file being used on startup once it has been generated. Are you having disk space issues?

Archive can be enabled for the flow.json.gz as part of the following properties:

Archive of flow.json.gz only happens when a configuration change is made on the NiFi canvas or via some change via NiFi rest-api interaction resulting in changes to datafow(s). Archive when enabled does not randomly generate archive copies of the flow.json.gz. Only happens with each change. So if you are seeing an archive of teh flow.josn.gz generated every few minutes in the configured archive.dir? If so, then changes to your dataflow are happening.

Are you sure that the SIGTERM is executing a clean shutdown of the NiFi process (./nifi.sh stop) or is it just killing the NiFi process id? If a graceful shutdown is happening you would see that in the nifi-bootstrap.log.

The Graceful shutdown period is configurable by this property:

If you found that the provided solution(s) assisted you with your query, please take a moment to login and click Accept as Solution below each response that helped.

Thank you,

Matt