Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFi MergeContent generating 2 output files in...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi MergeContent generating 2 output files instead of 1 file

- Labels:

-

Apache NiFi

Created on 09-28-2020 06:43 AM - edited 09-28-2020 06:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm using

- GetDateandServer processor to fetch file names ,

- decompress the files ,

- remove header using executestreamcommand Process

Command arguments- 1d

Command path- sed

IgnoreSTDIN_ False

- MergeContent Processor to merge files

Merge strategy: Bin-Packing algorithm

Merge format: Bin concatenation

Merge data strategy: Do not merge uncommon metadata

Min no of entries: 180

Max no of entries: 1000

Minimum Group Size: 60GB

Max Bin age: 5 min

Max no of bins: 1

- UpdateAttribute to Create a files name Compress files

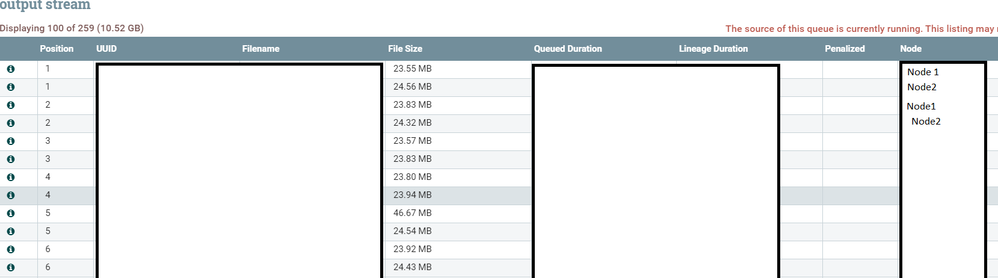

- putHDFS-To put data into HDFSMy problem is after ExecuteStreamcommand Processor is triggered in the queue I could see two positions with same value

For every half hour I should get 1 output file but I could see 2 files as output. NIFi is running on 3 nodes, and list queue on 2 nodes. Files running on node1 is merged as 1 file and files running on node 2 is fetched as 2nd file so I'm getting 2 files. Could you please let me know how to get one file Thank you in advance for your help

Note: I have posted the same question previously but I couldn't see it again so I posted again

- @Nifi

Created 09-30-2020 09:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sru111 ,

If possible, please update your nifi version to 1.11.4 or above. You can find load balancing option there. Otherwise, stick to your plan of using primary node only for FetchSFTP processor. You can still do it in your nifi using Remote Process Groups. But, it will become really complex with that.

Created 09-29-2020 12:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sru111 ,

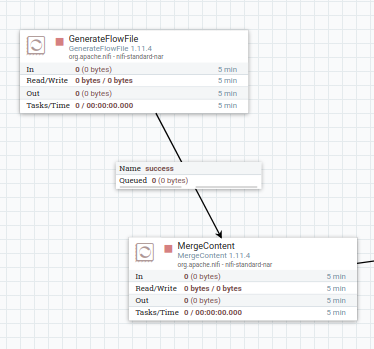

Consider the MergeContent processor in the picture as your MergeContent processor.

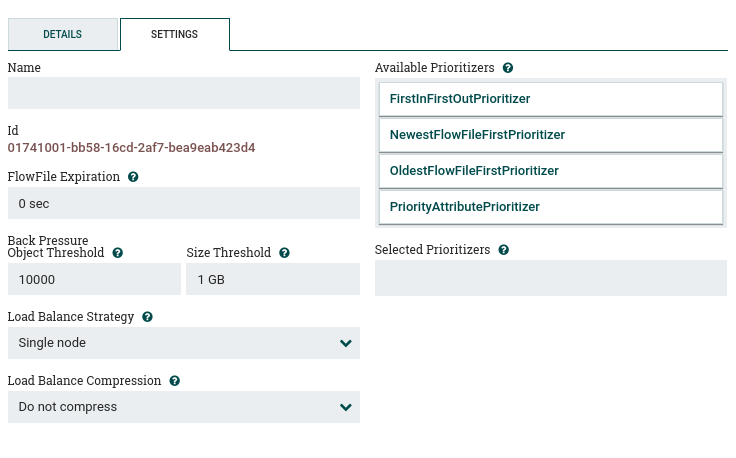

Configure the queue(here, 'success') that acts as the upstream queue for your MergeContent processor.

Select the load balance strategy as Single node, then you will get all the files as input to only one of the nodes.

(Optional)

You can configure the donwstream queue of MergeContent processor to have the load balance strategy as Round Robin, so that the files are distributed among all the nodes in the cluster.

Created on 09-30-2020 02:08 AM - edited 09-30-2020 04:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

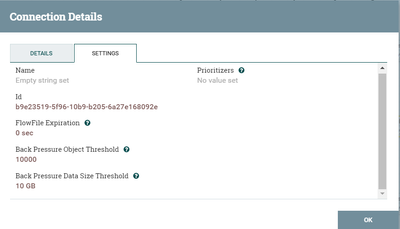

Thank you @PVVK for your solution, I am unable to view the option load strategy in the queue before the mergecontent processor So I have done this. Previously the fetchSFTP was set to execute on all nodes and I changed the option to execute on Primary node. As a result I am getting single file now. Please correct if if I am wrong

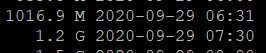

Also There is a delay while data is loading into HDFS using PUTHDFS Processor.After compression, while there is a change in size from MB to GB, it is being loaded after 1 hour.

Please find the screenshot below for your reference

Created on 09-30-2020 08:06 AM - edited 09-30-2020 08:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Sru111,

Setting the FetchSFTP processor to run on primary node is fine.

But, if there are multiple files that you need to fetch from SFTP using the same processor, fetching of second file will happen only after you fetch first one (Similarly for the rest). But, you can fetch them simultaneously. So, using all the 3 nodes is preferred for FetchSFTP processor.

May I know which version of nifi you are using? I believe, load balance strategy was introduced in 1.11.0 (not sure) but, started working correctly in 1.11.4 version.

Regarding PutHDFS, I don't have a clue about it! Sorry!

Created on 09-30-2020 08:55 AM - edited 09-30-2020 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @PVVK .

My NiFi version is 1.5.0

If I set execute on all nodes in FetchSFTP, Sometimes I am getting duplicate files fetched by different nodes

Created 09-30-2020 09:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sru111 ,

If possible, please update your nifi version to 1.11.4 or above. You can find load balancing option there. Otherwise, stick to your plan of using primary node only for FetchSFTP processor. You can still do it in your nifi using Remote Process Groups. But, it will become really complex with that.