Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi bash scripts execution and output

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi bash scripts execution and output

- Labels:

-

Apache NiFi

Created on 10-10-2017 10:19 AM - edited 08-17-2019 09:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

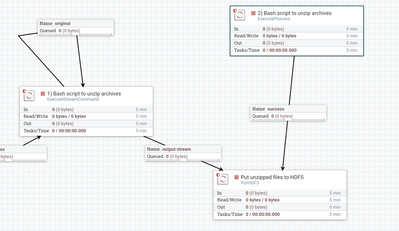

I have a directory with zip archives in the local filesystem of the Nifi server, and i would like to create a flow that unzips these archives with a bash script and then puts them in HDFS.

The problem i have is that i cannot direct the output of the bash script in a correct way to the PutHDFS processor so that it parses the unzipped files.

1) With the use of ExecuteStreamCommand processor i have 2 options for the outgoing flow, the original relationship that contains the initial zipped archive and the outputstream relationship which it should be what i am looking for but it transfers only an empty file with the same name with the original. How should be this processor be configured when it runs a bash script/command to correctly contain the files produced from this script/command?

2) With the use of ExecuteProcess processor, where there is only a success/failure relationship and also this does not help to pass the outgoing flow as input of the PutHDFS processor to move the unzipped files to HDFS.

Any help would be greately appreciated!

Created 10-11-2017 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why not use UnpackContent or CompressContent instead of Execute process ?

- https://nifi.apache.org/docs/nifi-docs/components/org.apache.nifi/nifi-standard-nar/1.4.0/org.apache...

- https://nifi.apache.org/docs/nifi-docs/components/org.apache.nifi/nifi-standard-nar/1.4.0/org.apache...

Does UnpackContent suits your need ?

Created 10-11-2017 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Why not use UnpackContent or CompressContent instead of Execute process ?

- https://nifi.apache.org/docs/nifi-docs/components/org.apache.nifi/nifi-standard-nar/1.4.0/org.apache...

- https://nifi.apache.org/docs/nifi-docs/components/org.apache.nifi/nifi-standard-nar/1.4.0/org.apache...

Does UnpackContent suits your need ?

Created 10-12-2017 09:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

CompressContent works fine for gzip archives. Thanks a lot, still exploring Nifi processors possibilities

Created 10-11-2017 06:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Foivos A The output.stream relation from ExecuteStreamCommand contains the stdout from the command executed.

Unless you do cat <unzipped_file> at the end of your script you won't see anything on that relation. And this would only work if you only have 1 unzipped file of course.

The way I did this was to have the script "echo" at the end the names of the local files, one per line. This output will go to the output.stream relation and from there you can do SplitText to split the output by line followed by a FetchFile -> PutHDFS.

If you're still interested, I can share my flow and the scripts, but as Abdelkrim mentioned, UnpackContent should do the job, even for very large files as UnpackContent followed by PutHDFS will be streamed so will not affect the NiFi heap.

Created 10-12-2017 02:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Alexandru Anghel, ive uploaded a new question with my whole use case and logic here.

Any help really appreciated!