Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Oozie work flow not finding main class

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Oozie work flow not finding main class

- Labels:

-

Apache Oozie

Created on 04-12-2022 08:47 AM - edited 04-12-2022 08:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Community,

I am going through this tutorial https://www.cloudera.com/tutorials/setting-up-a-spark-development-environment-with-java.html and I am trying to submit it through Oozie work flow. In Hue I am going to Query > Scheduler > Workflow then dropping Java program to actions and then uploading jar file then adding the main class which is Hortonworks.SparkTutorial.Main. When I click to run the Oozie work flow job I keep getting the error of: "Caused by: java.lang.ClassNotFoundException: Class Hortonwork.SparkTutorial.Main not found". I'm using intelliJ to do this project so I hold down ctrl and hover over Main and it takes me to MANIFEST.MF file and it says Main-Class: Hortonworks.SparkTutorial.Main so I'm getting the main class definition right I feel like. I cannot figure out why it is saying it can't find my class.

Created 04-13-2022 02:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jarededrake , The "ClassNotFoundException: Class Hortonwork.SparkTutorial.Main not found" suggests that in the Java program's main class package name might have a typo (in your workflow definiton), the Hortonwork should be Hortonworks. Can you check that?

Created 04-14-2022 08:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

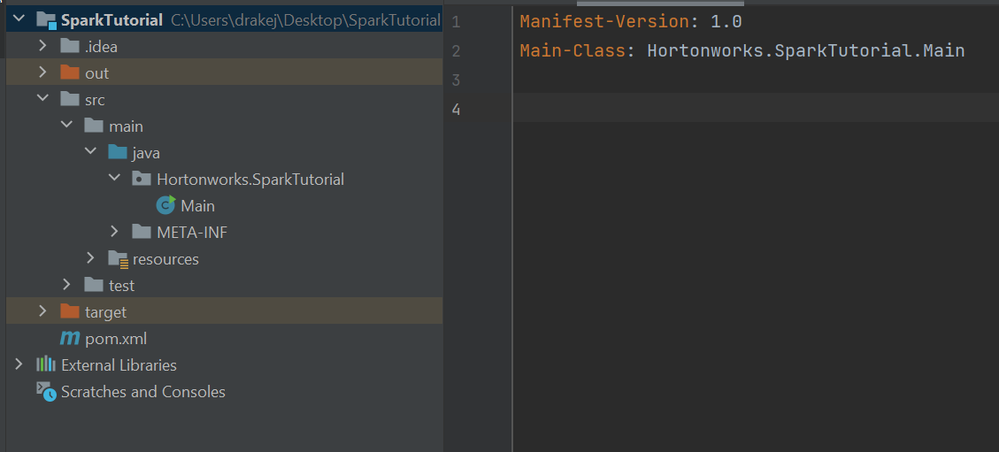

@mszurap You were right I didn't have the "s" at the end 😐. However I am still getting the problem on oozie, I've add a screen shot so you can see the structure of my program and you can see in the MANIFEST.MF file it has my Main class as

Hortonworks.SparkTutorial.Main

Created 04-14-2022 09:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see. Have you verified that the built jar contains this package structure and class names? Can you also show where the jar is uploaded and how is it referenced in the oozie workflow?

Thanks, Miklos

Created 04-15-2022 08:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can yes I will get those screen shots to you

Created 04-15-2022 08:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So this is interesting, I run "mvn package" to package my application into a jar and I get two different jars I get a SparkTutorial.jar. When I look at the contents of that jar file I only see my dependencies but I do not see my main class

I run: jar tf C:\Users\drakej\Desktop\SparkTutorial\out\artifacts\SparkTutorial_jar\SparkTutorial.jar.

Sample of the output is below, it only lists my dependencies.

org/apache/hadoop/ha/proto/HAServiceProtocolProtos$TransitionToStandbyRequestProto$Builder.class

org/apache/hadoop/ha/proto/HAServiceProtocolProtos$TransitionToStandbyRequestProto.class

org/apache/hadoop/ha/proto/HAServiceProtocolProtos$TransitionToStandbyRequestProtoOrBuilder.class

org/apache/hadoop/ha/proto/HAServiceProtocolProtos$TransitionToStandbyResponseProto$1.class

org/apache/hadoop/ha/proto/HAServiceProtocolProtos$TransitionToStandbyResponseProto$Builder.class

org/apache/hadoop/ha/proto/HAServiceProtocolProtos$TransitionToStandbyResponseProto.class

org/apache/hadoop/ha/proto/HAServiceProtocolProtos$TransitionToStandbyResponseProtoOrBuilder.class

org/apache/hadoop/ha/proto/HAServiceProtocolProtos.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$1.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveRequestProto$1.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveRequestProto$Builder.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveRequestProto.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveRequestProtoOrBuilder.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveResponseProto$1.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveResponseProto$Builder.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveResponseProto.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$CedeActiveResponseProtoOrBuilder.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$GracefulFailoverRequestProto$1.class

org/apache/hadoop/ha/proto/ZKFCProtocolProtos$GracefulFailoverRequestProto$Builder.class

The second jar file that is made is SparkTutorial-1.0-SNAPSHOT.jar, I run

jar tf C:\Users\drakej\Desktop\SparkTutorial\target\SparkTutorial-1.0-SNAPSHOT.jar

Then there it has my class listed, however when I run this jar file in oozie I get the error:

org.apache.oozie.action.hadoop.JavaMainException: java.lang.NoClassDefFoundError: org/apache/spark/SparkConf

So one jar has my dependencies and another jar has my class but they are not together.

Created 04-15-2022 08:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Forgot to add the contents of

jar tf C:\Users\drakej\Desktop\SparkTutorial\target\SparkTutorial-1.0-SNAPSHOT.jar

META-INF/

META-INF/MANIFEST.MF

Hortonworks/

Hortonworks/SparkTutorial/

code.txt

Hortonworks/SparkTutorial/Main.class

Main.class

replacementValues.properties

shakespeareText.txt

ULAN-Test-IPSummary.csv

ULAN-Test-IPSummary.txt

META-INF/maven/

META-INF/maven/hortonworks/

META-INF/maven/hortonworks/SparkTutorial/

META-INF/maven/hortonworks/SparkTutorial/pom.xml

META-INF/maven/hortonworks/SparkTutorial/pom.properties

Created 04-26-2022 05:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jarededrake , sorry for the delay, I was away for a couple of days.

You should use your thin jar (application only - without the dependencies) in the target directory ("SparkTutorial-1.0-SNAPSHOT.jar"). The NoClassDefFoundError for the SparkConf suggests that you've tried a Java action. It is highly suggested to use a Spark action in Oozie workflow editor when running a Spark application to make sure that the environment is set up properly for the application.

Created 04-26-2022 09:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @mszurap , no problem at all totally understand. So I ran my "SparkTutorial-1.0-SNAPSHOT.jar" from Hue > Query > Scheduler > Workflow, I then drag down Spark to the work flow, I then fill out the inputs like this:

Jar/py name: SParkTutorial-10.0.SNAPSHOT.jar

Main class: Main

Files + : /user/zzmdrakej2/SparkTutorial-1.0-SNAPSHOT.jar

I then get this error, its different so I guess that's a good sign right:

Failing Oozie Launcher, Main class [org.apache.oozie.action.hadoop.SparkMain], main() threw exception, Delegation Token can be issued only with kerberos or web authentication

Created 04-27-2022 12:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jarededrake , that's a good track, the issue currently seems to be that the cluster has Kerberos enabled, and that needs an extra configuration.

In the workflow editor, in the right upper corner of the Spark action you will find a cogwheel icon for advanced settings. There on the Credentials tab enable the "hcat" and "hbase" credentials to let the Spark client obtain delegation tokens for the Hive (Hive metastore) and HBase services - in case the spark application wants to use those services (Spark does not know this in advance, so it obtains those DTs). You can disable this behavior too if you are sure that the Spark applicatino will not connect to Hive (using Spark SQL) or HBase, just add the following to the Spark action option list:

--conf spark.security.credentials.hadoopfs.enabled=false --conf spark.security.credentials.hbase.enabled=false --conf spark.security.credentials.hive.enabled=falsebut it's easier to just enable these credentials in the settings page.

For similar Kerberos related issues in other actions, please see the following guide:

https://gethue.com/hadoop-tutorial-oozie-workflow-credentials-with-a-hive-action-with-kerberos/