Support Questions

- Cloudera Community

- Support

- Support Questions

- PutEmail processor dying frequently

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PutEmail processor dying frequently

- Labels:

-

Apache NiFi

Created 02-18-2022 08:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

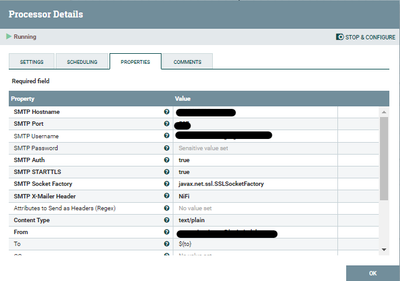

In my process I'm triggering some emails at certain points. My problem is that the PutEmail processor just keep dying frequently (even two or three times per day)!!!

Nifi Version 1.14.0

When processor dyes I have to terminate and restart it. This is the dump of the last crash:

"Timer-Driven Process Thread-1" Id=68 RUNNABLE (in native code)

at java.base@11.0.2/java.net.SocketInputStream.socketRead0(Native Method)

at java.base@11.0.2/java.net.SocketInputStream.socketRead(SocketInputStream.java:115)

at java.base@11.0.2/java.net.SocketInputStream.read(SocketInputStream.java:168)

at java.base@11.0.2/java.net.SocketInputStream.read(SocketInputStream.java:140)

at com.sun.mail.util.TraceInputStream.read(TraceInputStream.java:102)

at java.base@11.0.2/java.io.BufferedInputStream.fill(BufferedInputStream.java:252)

at java.base@11.0.2/java.io.BufferedInputStream.read(BufferedInputStream.java:271)

- waiting on java.io.BufferedInputStream@40399b64

at com.sun.mail.util.LineInputStream.readLine(LineInputStream.java:100)

at com.sun.mail.smtp.SMTPTransport.readServerResponse(SMTPTransport.java:2456)

at com.sun.mail.smtp.SMTPTransport.openServer(SMTPTransport.java:2172)

at com.sun.mail.smtp.SMTPTransport.protocolConnect(SMTPTransport.java:722)

- waiting on com.sun.mail.smtp.SMTPTransport@4fa5e86a

at jakarta.mail.Service.connect(Service.java:364)

- waiting on com.sun.mail.smtp.SMTPTransport@4fa5e86a

at jakarta.mail.Service.connect(Service.java:222)

at jakarta.mail.Service.connect(Service.java:171)

at jakarta.mail.Transport.send0(Transport.java:230)

at jakarta.mail.Transport.send(Transport.java:100)

at org.apache.nifi.processors.standard.PutEmail.send(PutEmail.java:524)

at org.apache.nifi.processors.standard.PutEmail.onTrigger(PutEmail.java:392)

at app//org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27)

at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1202)

at org.apache.nifi.controller.tasks.ConnectableTask.invoke(ConnectableTask.java:214)

at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run (TimerDrivenSchedulingAgent.java:103)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.base@11.0.2/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515)

at java.base@11.0.2/java.util.concurrent.FutureTask.runAndReset(FutureTask.java:305)

at java.base@11.0.2/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run (ScheduledThreadPoolExecutor.java:305)

at java.base@11.0.2/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base@11.0.2/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base@11.0.2/java.lang.Thread.run(Thread.java:834)

Number of Locked Synchronizers: 1

- java.util.concurrent.ThreadPoolExecutor$Worker@39b265c9

"Timer-Driven Process Thread-10 <Terminated Task>" Id=92 RUNNABLE (in native code)

at java.base@11.0.2/java.net.SocketInputStream.socketRead0(Native Method)

at java.base@11.0.2/java.net.SocketInputStream.socketRead(SocketInputStream.java:115)

at java.base@11.0.2/java.net.SocketInputStream.read(SocketInputStream.java:168)

at java.base@11.0.2/java.net.SocketInputStream.read(SocketInputStream.java:140)

at com.sun.mail.util.TraceInputStream.read(TraceInputStream.java:102)

at java.base@11.0.2/java.io.BufferedInputStream.fill(BufferedInputStream.java:252)

at java.base@11.0.2/java.io.BufferedInputStream.read(BufferedInputStream.java:271)

- waiting on java.io.BufferedInputStream@7fe5124a

at com.sun.mail.util.LineInputStream.readLine(LineInputStream.java:100)

at com.sun.mail.smtp.SMTPTransport.readServerResponse(SMTPTransport.java:2456)

at com.sun.mail.smtp.SMTPTransport.openServer(SMTPTransport.java:2172)

at com.sun.mail.smtp.SMTPTransport.protocolConnect(SMTPTransport.java:722)

- waiting on com.sun.mail.smtp.SMTPTransport@17e56d13

at jakarta.mail.Service.connect(Service.java:364)

- waiting on com.sun.mail.smtp.SMTPTransport@17e56d13

at jakarta.mail.Service.connect(Service.java:222)

at jakarta.mail.Service.connect(Service.java:171)

at jakarta.mail.Transport.send0(Transport.java:230)

at jakarta.mail.Transport.send(Transport.java:100)

at org.apache.nifi.processors.standard.PutEmail.send(PutEmail.java:524)

at org.apache.nifi.processors.standard.PutEmail.onTrigger(PutEmail.java:392)

at app//org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27)

at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1202)

at org.apache.nifi.controller.tasks.ConnectableTask.invoke(ConnectableTask.java:214)

at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run (TimerDrivenSchedulingAgent.java:103)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.base@11.0.2/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515)

at java.base@11.0.2/java.util.concurrent.FutureTask.runAndReset(FutureTask.java:305)

at java.base@11.0.2/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:305)

at java.base@11.0.2/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base@11.0.2/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base@11.0.2/java.lang.Thread.run(Thread.java:834)

Number of Locked Synchronizers: 1

- java.util.concurrent.ThreadPoolExecutor$Worker@176d79dc

I can find the reason (and the corrective action to take) why is this happening as the remain processors works fine. I'll appreciate your help pointing me in the right direction.

Created on 03-03-2022 04:19 PM - edited 03-03-2022 04:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @templarian ,

From your log I can see that thread 13 got stuck right after this line:

2022-03-02 16:31:37,479 DEBUG [Timer-Driven Process Thread 13 ] jakarta.mail getProvider() returning jakarta.mail.Provider[TRANSPORT,smtp,com.sun.mail.smtp.SMTPTransport,Oracle]

Looking at other threads, the line that should've come right after that would be one like this:

com.sun.mail.smtp connected to host "smtp.office365.com", port: 587

It seems to me that the thread got stuck during the attempt to connect to the SMTP server. I was able to reproduce this locally and confirmed that after the socked connection is established, if the server response is lost for some reason (or if the server doesn't respond at all) the processor thread gets stuck forever.

Apparently this is kind of by design, since the default value of the mail.smtp.timeout property is infinite, as you can see here.

I had a look at the PutEmail processor source code and unfortunately I don't think there is a way currently to set this in the processor configuration.

I'd suggest that you open a NiFi Jira in https://issues.apache.org/ asking for the configurable timeout to be added. If you have support with Cloudera, please open a ticket and we should be able to help with that.

Cheers,

André

--

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-18-2022 11:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are there any messages in nifi-app.log related to the processor around the time of the crash?

It might help enabling DEBUG for NiFi and the processor and trying to reproduce the problem. Please share the debug logs if you manage to get them.

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-23-2022 02:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi @araujo,

Thanks for your reply.

I can't see anything relevant on nifi-app or in the PutEmail Debug.

Following logs are from an event that happened earlier today were 3 flow files were enqueued. I terminate/start the PutEmail processor and just 2 flow files were processed before the processor dyed again. I repeated the terminate/start action and the processor have been working without problem since then.

PutEmail Debug

Sent email as a result of receiving StandardFlowFileRecord[uuid=41e2361c-a94f-4b4c-b8d3-bdb558c92ae4,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1645643212828-491, container=default, section=491], offset=105253, length=104579],offset=0, name=33_76209474-6_41326.pdf, size=104579]

Sent email as a result of receiving StandardFlowFileRecord[uuid=e05651dd-a507-4873-a291-fc840ce60b89,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1645643170184-490, container=default, section=490], offset=511202, length=118027],offset=0,name=34_76209474-6_45376.pdf, size=118027] <the processor just dyed, no goodbye, no error, no nothing!!>

[Terminated Process] - DEBUG - Reloading component 8d061908-32f1-1b27-7653-ff7e9404c34a to type org.apache.nifi.processors.standard.PutEmail from bundle org.apache.nifi:nifi-standard-nar:1.14.0

Sent email as a result of receiving StandardFlowFileRecord[uuid=a3ec8b22-850a-4788-b191-cb1faef454f9,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1645643289194-492, container=default, section=492], offset=868, length=103410],offset=0,name=33_83574800-6_41327.pdf, size=103410]

Sent email as a result of receiving StandardFlowFileRecord[uuid=e43076fc-5baa-4d3b-8f50-e198524dff93,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1645643289194-492, container=default, section=492], offset=104278, length=116147],offset=0,name=34_83574800-6_45377.pdf, size=116147]

[Terminated Process] - DEBUG - Reloading component 8d061908-32f1-1b27-7653-ff7e9404c34a to type org.apache.nifi.processors.standard.PutEmail from bundle org.apache.nifi:nifi-standard-nar:1.14.0

Sent email as a result of receiving StandardFlowFileRecord[uuid=4478a2ce-e9f6-4bd5-b63b-6549531e697a,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1645641281073-449, container=default, section=449], offset=127565, length=102849],offset=0,name=33_79921700-7_41302.pdf, size=102849]

Sent email as a result of receiving StandardFlowFileRecord[uuid=880053b8-336b-4ceb-

a7eb-371118014b1b,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1645643289319-493, container=default, section=493], offset=620752, length=99647],offset=0,name= 2022-02-23T16_28_31_T34F8251_76450608-1.pdf, size=99647]

nifi-app

Does not exist any different message in the log while the event happened

INFO [Cleanup Archive for default] o.a.n.c.repository.FileSystemRepository Successfully deleted 0 files (0 bytes) from archive

INFO [pool-10-thread-1] o.a.n.c.r.WriteAheadFlowFileRepository Initiating checkpoint of FlowFile Repository

INFO [pool-10-thread-1] o.a.n.c.r.WriteAheadFlowFileRepository Successfully checkpointed FlowFile Repository with 124 records in 0 milliseconds

INFO [pool-10-thread-1] o.a.n.c.r.WriteAheadFlowFileRepository Initiating checkpoint of FlowFile Repository

INFO [pool-10-thread-1] o.a.n.c.r.WriteAheadFlowFileRepository Successfully checkpointed FlowFile Repository with 124 records in 0 milliseconds

INFO [Write-Ahead Local State Provider Maintenance] org.wali.MinimalLockingWriteAheadLog org.wali.MinimalLockingWriteAheadLog@5a5cd0af checkpointed with 334 Records and 0 Swap Files in 55 milliseconds (Stop-the-world time = 24 milliseconds, Clear Edit Logs time = 24 millis), max Transaction ID 38437

Thanks

Created 02-23-2022 03:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Would you be able to bump your NiFi log level to DEBUG and restart the service?

Your nifi-app.log only seems to have INFO level messages.

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 03-01-2022 08:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After month close, I have an approved manteinance window for tomorrow night.

I tried to enable debug in in test instance, changing the root tag in the logback.xml

<root level="DEBUG">

<appender-ref ref="APP_FILE" />

</root>

But I got several hundreds of Mb in the log files in a few minutes. As I don't when the issue will appear; does exist some way to increase the log level in the nifi-app, but only for the PutEmail processor or its associate classes? I can see that related clasess to PutEmail are (until I can see):

com.sun.mail.smtp

jakarta.activation

com.sun.mail.smtp

jakarta.mail

thanks

Created 03-01-2022 07:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @templarian ,

Instead of changing the root log level, you can modify it for a single class or package using the following entry (one for each class/package):

<logger name="com.sun.mail.smtp" level="DEBUG" additivity="false">

<appender-ref ref="APP_FILE"/>

</logger>

Also note that if your configuration file has configuration scan enabled with the following, NiFi will scan the logback.xml file at the specified interval and if there are changes to it it will reload the configuration without the need for a restart:

# beggining of file

<configuration scan="true" scanPeriod="30 seconds">

...

</configuration>

# end of file

Cheers,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 03-03-2022 05:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @araujo ,

Here you can find the nifi-app.log in Debug mode.

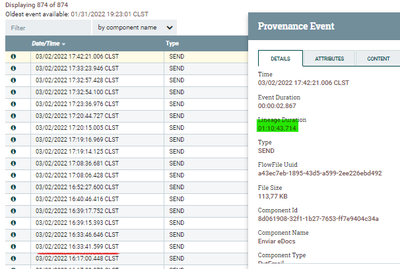

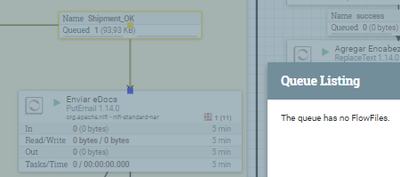

This is the flowfile that was enqueue (just one flowfile when I checked)

In the logs appears that the processor received the flowfile at 16:31:37,479 but almost instantly the process dyed -without clear reason- , and the mail only was send until 17:42:20,913 after I terminated/restarted the processor. The dump did not show anything different from my first post.

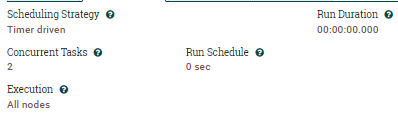

As I can see, in the mean time the other Processor's task (Concurrent tasks = 2) continue working without problem.

Also I noticed that while I was attempting to terminate the Processor the queue was appearing with one flow file enqueued, but when I tried to list the queue I got the message "The queue has no FlowFiles". I don't know if this is the normal behavior.

Thanks

Created on 03-03-2022 04:19 PM - edited 03-03-2022 04:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @templarian ,

From your log I can see that thread 13 got stuck right after this line:

2022-03-02 16:31:37,479 DEBUG [Timer-Driven Process Thread 13 ] jakarta.mail getProvider() returning jakarta.mail.Provider[TRANSPORT,smtp,com.sun.mail.smtp.SMTPTransport,Oracle]

Looking at other threads, the line that should've come right after that would be one like this:

com.sun.mail.smtp connected to host "smtp.office365.com", port: 587

It seems to me that the thread got stuck during the attempt to connect to the SMTP server. I was able to reproduce this locally and confirmed that after the socked connection is established, if the server response is lost for some reason (or if the server doesn't respond at all) the processor thread gets stuck forever.

Apparently this is kind of by design, since the default value of the mail.smtp.timeout property is infinite, as you can see here.

I had a look at the PutEmail processor source code and unfortunately I don't think there is a way currently to set this in the processor configuration.

I'd suggest that you open a NiFi Jira in https://issues.apache.org/ asking for the configurable timeout to be added. If you have support with Cloudera, please open a ticket and we should be able to help with that.

Cheers,

André

--

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 03-03-2022 06:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @araujo ,

It is major head ache for me your findings as this is a crucial step in the process. But, at least I know what is happening. I will try to use another smtp server to check if the problem decreases.

Unfortunately we don't have Cloudera support. I just opened the issue NIFI-9758

Thanks a lot for your help.

Regards,

Javier