Support Questions

- Cloudera Community

- Support

- Support Questions

- PutSplunk processor and Splunk group multiple sysl...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PutSplunk processor and Splunk group multiple syslog jsons into one event

Created on 01-01-2017 07:54 PM - edited 08-19-2019 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm using PutSplunk processor to sink syslogs in json format to Splunk server.

But on Splunk side, I see multiple json are grouped in one event.

How can I configure my PutSplunk and Splunk server to see one json for each event?

Regards,

Wendell

Created 01-03-2017 04:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Actually, the flowfile in the queue before the PutSplunk does contain only one json.

For some reason the Splunk group them together. If I choose different json type (no timestamp) in splunk data, then each json in one event. But @Bryan Bende's "Message Delimiter" worth to be added.

Regards,

Wendell

Created 01-03-2017 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Make sure you split data using the SplitJson processor in NiFi before putting into Splunk. The reason is the syslog receiver may bundle incoming messages based on the network setup, but knows nothing about actual data format like json.

Created 01-03-2017 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

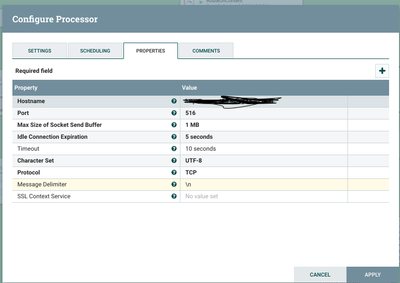

PutSplunk has two modes of operating, it can send the entire content of the flow file as a single message, or it can stream the content of a flow file and separate it based on a delimiter. The way it chooses between these modes is based on whether or not the "Message Delimiter" property is set in PutSplunk.

In your case I am assuming you have multiple JSON documents in a flow file, so you probably want to set the "Message Delimiter" to whatever is separating them, likely a \n.

Created 01-03-2017 04:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Actually, the flowfile in the queue before the PutSplunk does contain only one json.

For some reason the Splunk group them together. If I choose different json type (no timestamp) in splunk data, then each json in one event. But @Bryan Bende's "Message Delimiter" worth to be added.

Regards,

Wendell

Created on 05-06-2019 10:05 PM - edited 08-19-2019 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Wendell Bu , I am trying same , to send events from Nifi to Splunk (using putSplunk processor) . I was stuck initially , not able to see events in splunk . My AttributetoJSON (In my view data provenance ,I see raw logs are converted to JSON format) is connected to putSplunk processor , It has hostname,port and message delimiter configured as in below screenshot . On splunk side , input port is defined . Not sure if i am missing something .Can you please let me know if there are any other steps, i need to follow ?

Appreciate your help in advance !