Support Questions

- Cloudera Community

- Support

- Support Questions

- RandomForest causing Heap Space error

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

RandomForest causing Heap Space error

- Labels:

-

Apache Spark

-

Apache YARN

Created on 12-22-2016 12:32 PM - edited 08-18-2019 03:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDP-2.5.0.0 using Ambari 2.4.0.1, Spark 2.0.1.

I am having a Scala code that reads a 108MB csv file and uses the RandomForest.

I run the following command :

/usr/hdp/current/spark2-client/bin/spark-submit --class samples.FuelModel --master yarn --deploy-mode cluster --driver-memory 8g spark-assembly-1.0.jar

The console output :

16/12/22 09:07:24 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/12/22 09:07:25 WARN DomainSocketFactory: The short-circuit local reads feature cannot be used because libhadoop cannot be loaded. 16/12/22 09:07:26 INFO TimelineClientImpl: Timeline service address: http://l4326pp.sss.com:8188/ws/v1/timeline/ 16/12/22 09:07:26 INFO AHSProxy: Connecting to Application History server at l4326pp.sss.com/138.106.33.132:10200 16/12/22 09:07:26 INFO Client: Requesting a new application from cluster with 4 NodeManagers 16/12/22 09:07:26 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (204800 MB per container) 16/12/22 09:07:26 INFO Client: Will allocate AM container, with 9011 MB memory including 819 MB overhead 16/12/22 09:07:26 INFO Client: Setting up container launch context for our AM 16/12/22 09:07:26 INFO Client: Setting up the launch environment for our AM container 16/12/22 09:07:26 INFO Client: Preparing resources for our AM container 16/12/22 09:07:27 INFO YarnSparkHadoopUtil: getting token for namenode: hdfs://prodhadoop/user/ojoqcu/.sparkStaging/application_1481607361601_8315 16/12/22 09:07:27 INFO DFSClient: Created HDFS_DELEGATION_TOKEN token 79178 for ojoqcu on ha-hdfs:prodhadoop 16/12/22 09:07:28 INFO metastore: Trying to connect to metastore with URI thrift://l4327pp.sss.com:9083 16/12/22 09:07:29 INFO metastore: Connected to metastore. 16/12/22 09:07:29 INFO YarnSparkHadoopUtil: HBase class not found java.lang.ClassNotFoundException: org.apache.hadoop.hbase.HBaseConfiguration 16/12/22 09:07:29 INFO Client: Source and destination file systems are the same. Not copying hdfs:/lib/spark2_2.0.1.tar.gz 16/12/22 09:07:29 INFO Client: Uploading resource file:/localhome/ojoqcu/code/debug/Rikard/spark-assembly-1.0.jar -> hdfs://prodhadoop/user/ojoqcu/.sparkStaging/application_1481607361601_8315/spark-assembly-1.0.jar 16/12/22 09:07:29 INFO Client: Uploading resource file:/tmp/spark-ff9db580-00db-476e-9086-377c60bc7e2a/__spark_conf__1706674327523194508.zip -> hdfs://prodhadoop/user/ojoqcu/.sparkStaging/application_1481607361601_8315/__spark_conf__.zip 16/12/22 09:07:29 WARN Client: spark.yarn.am.extraJavaOptions will not take effect in cluster mode 16/12/22 09:07:29 INFO SecurityManager: Changing view acls to: ojoqcu 16/12/22 09:07:29 INFO SecurityManager: Changing modify acls to: ojoqcu 16/12/22 09:07:29 INFO SecurityManager: Changing view acls groups to: 16/12/22 09:07:29 INFO SecurityManager: Changing modify acls groups to: 16/12/22 09:07:29 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(ojoqcu); groups with view permissions: Set(); users with modify permissions: Set(ojoqcu); groups with modify permissions: Set() 16/12/22 09:07:29 INFO Client: Submitting application application_1481607361601_8315 to ResourceManager 16/12/22 09:07:30 INFO YarnClientImpl: Submitted application application_1481607361601_8315 16/12/22 09:07:31 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:07:31 INFO Client: client token: Token { kind: YARN_CLIENT_TOKEN, service: } diagnostics: AM container is launched, waiting for AM container to Register with RM ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: dataScientist start time: 1482394049862 final status: UNDEFINED tracking URL: http://l4327pp.sss.com:8088/proxy/application_1481607361601_8315/ user: ojoqcu 16/12/22 09:07:32 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:07:33 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:07:34 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:07:35 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:35 INFO Client: client token: Token { kind: YARN_CLIENT_TOKEN, service: } diagnostics: N/A ApplicationMaster host: 138.106.33.145 ApplicationMaster RPC port: 0 queue: dataScientist start time: 1482394049862 final status: UNDEFINED tracking URL: http://l4327pp.sss.com:8088/proxy/application_1481607361601_8315/ user: ojoqcu 16/12/22 09:07:36 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:37 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:38 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:39 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:40 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:41 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:42 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:43 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:44 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:45 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:46 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:47 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:48 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:49 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:50 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:51 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:52 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:53 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:54 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:55 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:56 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:57 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:58 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:07:59 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:00 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:01 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:02 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:03 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:04 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:05 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:06 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:07 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:08 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:09 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:10 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:11 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:12 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:13 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:14 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:15 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:16 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:17 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:18 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:19 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:20 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:21 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:22 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:23 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:24 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:25 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:26 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:27 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:28 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:29 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:30 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:31 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:32 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:33 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:34 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:35 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:36 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:37 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:38 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:39 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:40 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:41 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:42 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:43 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:44 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:45 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:46 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:47 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:48 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:49 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:50 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:51 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:52 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:08:52 INFO Client: client token: Token { kind: YARN_CLIENT_TOKEN, service: } diagnostics: AM container is launched, waiting for AM container to Register with RM ApplicationMaster host: N/A ApplicationMaster RPC port: -1 queue: dataScientist start time: 1482394049862 final status: UNDEFINED tracking URL: http://l4327pp.sss.com:8088/proxy/application_1481607361601_8315/ user: ojoqcu 16/12/22 09:08:53 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:08:54 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:08:55 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:08:56 INFO Client: Application report for application_1481607361601_8315 (state: ACCEPTED) 16/12/22 09:08:57 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:57 INFO Client: client token: Token { kind: YARN_CLIENT_TOKEN, service: } diagnostics: N/A ApplicationMaster host: 138.106.33.144 ApplicationMaster RPC port: 0 queue: dataScientist start time: 1482394049862 final status: UNDEFINED tracking URL: http://l4327pp.sss.com:8088/proxy/application_1481607361601_8315/ user: ojoqcu 16/12/22 09:08:58 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:08:59 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:00 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:01 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:02 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:03 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:04 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:05 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:06 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:07 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:08 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:09 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:10 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:11 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:12 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:13 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:14 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:15 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:16 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:17 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:18 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:19 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:20 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:21 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:22 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:23 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:24 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:25 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:26 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:27 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:28 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:29 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:30 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:31 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:32 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:33 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:34 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:35 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:36 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:37 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:38 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:39 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:40 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:41 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:42 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:43 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:44 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:45 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:46 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:47 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:48 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:49 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:50 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:51 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:52 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:53 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:54 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:55 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:56 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:57 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:58 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:09:59 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:00 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:01 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:02 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:03 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:04 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:05 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:06 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:07 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:08 INFO Client: Application report for application_1481607361601_8315 (state: RUNNING) 16/12/22 09:10:09 INFO Client: Application report for application_1481607361601_8315 (state: FINISHED) 16/12/22 09:10:09 INFO Client: client token: Token { kind: YARN_CLIENT_TOKEN, service: } diagnostics: User class threw exception: org.apache.spark.SparkException: Job aborted due to stage failure: Task 1 in stage 28.0 failed 4 times, most recent failure: Lost task 1.3 in stage 28.0 (TID 59, l4328pp.sss.com): ExecutorLostFailure (executor 3 exited caused by one of the running tasks) Reason: Container marked as failed: container_e63_1481607361601_8315_02_000005 on host: l4328pp.sss.com. Exit status: 143. Diagnostics: Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143 Killed by external signal Driver stacktrace: ApplicationMaster host: 138.106.33.144 ApplicationMaster RPC port: 0 queue: dataScientist start time: 1482394049862 final status: FAILED tracking URL: http://l4327pp.sss.com:8088/proxy/application_1481607361601_8315/ user: ojoqcu Exception in thread "main" org.apache.spark.SparkException: Application application_1481607361601_8315 finished with failed status at org.apache.spark.deploy.yarn.Client.run(Client.scala:1132) at org.apache.spark.deploy.yarn.Client$.main(Client.scala:1175) at org.apache.spark.deploy.yarn.Client.main(Client.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$runMain(SparkSubmit.scala:736) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:185) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:210) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala) 16/12/22 09:10:09 INFO ShutdownHookManager: Shutdown hook called 16/12/22 09:10:09 INFO ShutdownHookManager: Deleting directory /tmp/spark-ff9db580-00db-476e-9086-377c60bc7e2a377c60bc7e2a

Partial output from the YARN log from one of the nodes :

2016-12-22 09:08:54,205 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e63_1481607361601_8315_02_000001 transitioned from LOCALIZED to RUNNING 2016-12-22 09:08:54,206 INFO runtime.DelegatingLinuxContainerRuntime (DelegatingLinuxContainerRuntime.java:pickContainerRuntime(67)) - Using container runtime: DefaultLinuxContainerRuntime 2016-12-22 09:08:56,779 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(375)) - Starting resource-monitoring for container_e63_1481607361601_8315_02_000001 2016-12-22 09:08:56,810 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 545.1 MB of 12 GB physical memory used; 10.3 GB of 25.2 GB virtual memory used 2016-12-22 09:08:59,850 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 804.6 MB of 12 GB physical memory used; 10.4 GB of 25.2 GB virtual memory used 2016-12-22 09:09:02,886 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 973.3 MB of 12 GB physical memory used; 10.4 GB of 25.2 GB virtual memory used 2016-12-22 09:09:05,921 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.2 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:08,957 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.2 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:12,000 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.2 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:15,037 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.2 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:18,080 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.2 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:21,116 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.2 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:24,153 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.2 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:27,193 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.4 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:30,225 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.4 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:33,270 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.4 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:36,302 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.4 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:39,339 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.6 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:42,376 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.6 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:45,422 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.6 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:48,459 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.6 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:51,502 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.7 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:54,539 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.7 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:09:57,583 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.7 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:10:00,611 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.7 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:10:02,059 INFO ipc.Server (Server.java:saslProcess(1538)) - Auth successful for appattempt_1481607361601_8315_000002 (auth:SIMPLE) 2016-12-22 09:10:02,061 INFO authorize.ServiceAuthorizationManager (ServiceAuthorizationManager.java:authorize(137)) - Authorization successful for appattempt_1481607361601_8315_000002 (auth:TOKEN) for protocol=interface org.apache.hadoop.yarn.api.ContainerManagementProtocolPB 2016-12-22 09:10:02,062 INFO containermanager.ContainerManagerImpl (ContainerManagerImpl.java:startContainerInternal(810)) - Start request for container_e63_1481607361601_8315_02_000005 by user ojoqcu 2016-12-22 09:10:02,063 INFO application.ApplicationImpl (ApplicationImpl.java:transition(304)) - Adding container_e63_1481607361601_8315_02_000005 to application application_1481607361601_8315 2016-12-22 09:10:02,063 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e63_1481607361601_8315_02_000005 transitioned from NEW to LOCALIZING 2016-12-22 09:10:02,063 INFO containermanager.AuxServices (AuxServices.java:handle(215)) - Got event CONTAINER_INIT for appId application_1481607361601_8315 2016-12-22 09:10:02,063 INFO yarn.YarnShuffleService (YarnShuffleService.java:initializeContainer(184)) - Initializing container container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:02,063 INFO yarn.YarnShuffleService (YarnShuffleService.java:initializeContainer(270)) - Initializing container container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:02,063 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e63_1481607361601_8315_02_000005 transitioned from LOCALIZING to LOCALIZED 2016-12-22 09:10:02,063 INFO nodemanager.NMAuditLogger (NMAuditLogger.java:logSuccess(89)) - USER=ojoqcuIP=138.106.33.144OPERATION=Start Container RequestTARGET=ContainerManageImplRESULT=SUCCESSAPPID=application_1481607361601_8315CONTAINERID=container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:02,078 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e63_1481607361601_8315_02_000005 transitioned from LOCALIZED to RUNNING 2016-12-22 09:10:02,078 INFO runtime.DelegatingLinuxContainerRuntime (DelegatingLinuxContainerRuntime.java:pickContainerRuntime(67)) - Using container runtime: DefaultLinuxContainerRuntime 2016-12-22 09:10:03,612 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(375)) - Starting resource-monitoring for container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:03,647 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 34005 for container-id container_e63_1481607361601_8315_02_000005: 452.4 MB of 4 GB physical memory used; 3.1 GB of 8.4 GB virtual memory used 2016-12-22 09:10:03,673 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.7 GB of 12 GB physical memory used; 10.5 GB of 25.2 GB virtual memory used 2016-12-22 09:10:06,708 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 34005 for container-id container_e63_1481607361601_8315_02_000005: 815.3 MB of 4 GB physical memory used; 3.1 GB of 8.4 GB virtual memory used 2016-12-22 09:10:06,738 INFO monitor.ContainersMonitorImpl (ContainersMonitorImpl.java:run(464)) - Memory usage of ProcessTree 32969 for container-id container_e63_1481607361601_8315_02_000001: 1.8 GB of 12 GB physical memory used; 10.6 GB of 25.2 GB virtual memory used 2016-12-22 09:10:09,094 WARN privileged.PrivilegedOperationExecutor (PrivilegedOperationExecutor.java:executePrivilegedOperation(170)) - Shell execution returned exit code: 143. Privileged Execution Operation Output: main : command provided 1 main : run as user is ojoqcu main : requested yarn user is ojoqcu Getting exit code file... Creating script paths... Writing pid file... Writing to tmp file /opt/hdfsdisks/sdh/yarn/local/nmPrivate/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005/container_e63_1481607361601_8315_02_000005.pid.tmp Writing to cgroup task files... Creating local dirs... Launching container... Getting exit code file... Creating script paths... Full command array for failed execution: [/usr/hdp/current/hadoop-yarn-nodemanager/bin/container-executor, ojoqcu, ojoqcu, 1, application_1481607361601_8315, container_e63_1481607361601_8315_02_000005, /opt/hdfsdisks/sdg/yarn/local/usercache/ojoqcu/appcache/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005, /opt/hdfsdisks/sdb/yarn/local/nmPrivate/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005/launch_container.sh, /opt/hdfsdisks/sdk/yarn/local/nmPrivate/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005/container_e63_1481607361601_8315_02_000005.tokens, /opt/hdfsdisks/sdh/yarn/local/nmPrivate/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005/container_e63_1481607361601_8315_02_000005.pid, /opt/hdfsdisks/sdb/yarn/local%/opt/hdfsdisks/sdc/yarn/local%/opt/hdfsdisks/sdd/yarn/local%/opt/hdfsdisks/sde/yarn/local%/opt/hdfsdisks/sdf/yarn/local%/opt/hdfsdisks/sdg/yarn/local%/opt/hdfsdisks/sdh/yarn/local%/opt/hdfsdisks/sdi/yarn/local%/opt/hdfsdisks/sdj/yarn/local%/opt/hdfsdisks/sdk/yarn/local%/opt/hdfsdisks/sdl/yarn/local%/opt/hdfsdisks/sdm/yarn/local, /opt/hdfsdisks/sdb/yarn/log%/opt/hdfsdisks/sdc/yarn/log%/opt/hdfsdisks/sdd/yarn/log%/opt/hdfsdisks/sde/yarn/log%/opt/hdfsdisks/sdf/yarn/log%/opt/hdfsdisks/sdg/yarn/log%/opt/hdfsdisks/sdh/yarn/log%/opt/hdfsdisks/sdi/yarn/log%/opt/hdfsdisks/sdj/yarn/log%/opt/hdfsdisks/sdk/yarn/log%/opt/hdfsdisks/sdl/yarn/log%/opt/hdfsdisks/sdm/yarn/log, cgroups=none] 2016-12-22 09:10:09,095 WARN runtime.DefaultLinuxContainerRuntime (DefaultLinuxContainerRuntime.java:launchContainer(107)) - Launch container failed. Exception: org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationException: ExitCodeException exitCode=143: at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationExecutor.executePrivilegedOperation(PrivilegedOperationExecutor.java:175) at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.runtime.DefaultLinuxContainerRuntime.launchContainer(DefaultLinuxContainerRuntime.java:103) at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.runtime.DelegatingLinuxContainerRuntime.launchContainer(DelegatingLinuxContainerRuntime.java:89) at org.apache.hadoop.yarn.server.nodemanager.LinuxContainerExecutor.launchContainer(LinuxContainerExecutor.java:392) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:317) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:83) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: ExitCodeException exitCode=143: at org.apache.hadoop.util.Shell.runCommand(Shell.java:933) at org.apache.hadoop.util.Shell.run(Shell.java:844) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:1123) at org.apache.hadoop.yarn.server.nodemanager.containermanager.linux.privileged.PrivilegedOperationExecutor.executePrivilegedOperation(PrivilegedOperationExecutor.java:150) ... 9 more 2016-12-22 09:10:09,095 WARN nodemanager.LinuxContainerExecutor (LinuxContainerExecutor.java:launchContainer(400)) - Exit code from container container_e63_1481607361601_8315_02_000005 is : 143 2016-12-22 09:10:09,095 INFO container.ContainerImpl (ContainerImpl.java:handle(1163)) - Container container_e63_1481607361601_8315_02_000005 transitioned from RUNNING to EXITED_WITH_FAILURE 2016-12-22 09:10:09,095 INFO launcher.ContainerLaunch (ContainerLaunch.java:cleanupContainer(425)) - Cleaning up container container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:09,095 INFO runtime.DelegatingLinuxContainerRuntime (DelegatingLinuxContainerRuntime.java:pickContainerRuntime(67)) - Using container runtime: DefaultLinuxContainerRuntime 2016-12-22 09:10:09,114 INFO nodemanager.LinuxContainerExecutor (LinuxContainerExecutor.java:deleteAsUser(537)) - Deleting absolute path : /opt/hdfsdisks/sdb/yarn/local/usercache/ojoqcu/appcache/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:09,114 INFO nodemanager.LinuxContainerExecutor (LinuxContainerExecutor.java:deleteAsUser(537)) - Deleting absolute path : /opt/hdfsdisks/sdc/yarn/local/usercache/ojoqcu/appcache/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:09,115 INFO nodemanager.LinuxContainerExecutor (LinuxContainerExecutor.java:deleteAsUser(537)) - Deleting absolute path : /opt/hdfsdisks/sdd/yarn/local/usercache/ojoqcu/appcache/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:09,115 INFO nodemanager.LinuxContainerExecutor (LinuxContainerExecutor.java:deleteAsUser(537)) - Deleting absolute path : /opt/hdfsdisks/sde/yarn/local/usercache/ojoqcu/appcache/application_1481607361601_8315/container_e63_1481607361601_8315_02_000005 2016-12-22 09:10:09,115 WARN nodemanager.NMAuditLogger (NMAuditLogger.java:logFailure(150)) - USER=ojoqcuOPERATION=Container Finished - FailedTARGET=ContainerImplRESULT=FAILUREDESCRIPTION=Container failed with state: EXITED_WITH_FAILUREAPPID=application_1481607361601_8315CONTAINERID=container_e63_1481607361601_8315_02_000005

At least one container is killed, probably, due to OutOfMemory as the heap space overflows.

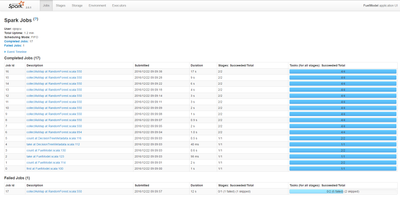

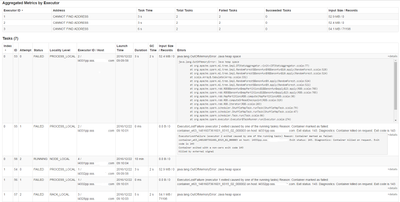

Attached are the screenshots from the SparkUI.

How shall I proceed :

- Would the using higher values for options like --driver-memory and --executor-memory help ?

- Is there some Spark setting that needs to be changed via Ambari ?

Created 12-23-2016 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes increase driver and executor memory.

http://spark.apache.org/docs/latest/configuration.html

Also specify more executors.

in spark submit

--master yarn --deploy-mode cluster

In my code I like to:

val sparkConf = new SparkConf().setAppName("Links")

sparkConf.set("spark.cores.max", "32")

sparkConf.set("spark.serializer", classOf[KryoSerializer].getName)

sparkConf.set("spark.sql.tungsten.enabled", "true")

sparkConf.set("spark.eventLog.enabled", "true")

sparkConf.set("spark.app.id", "MyApp")

sparkConf.set("spark.io.compression.codec", "snappy")

sparkConf.set("spark.rdd.compress", "false")

sparkConf.set("spark.suffle.compress", "true")

See

http://spark.apache.org/docs/latest/submitting-applications.html

--executor-memory 32G \

--num-executors 50 \Up these

spark.driver.cores | 32 | Number of cores to use for the driver process, only in cluster mode. |

spark.driver.maxResultSize | 1g | Limit of total size of serialized results of all partitions for each Spark action (e.g. collect). Should be at least 1M, or 0 for unlimited. Jobs will be aborted if the total size is above this limit. Having a high limit may cause out-of-memory errors in driver (depends on spark.driver.memory and memory overhead of objects in JVM). Setting a proper limit can protect the driver from out-of-memory errors. |

spark.driver.memory | 32g | Amount of memory to use for the driver process, i.e. where SparkContext is initialized. (e.g. 1g, 2g).

Note: In client mode, this config must not be set through the SparkConf directly in your application, because the driver JVM has already started at that point. Instead, please set this through the --driver-memory command line option or in your default properties file. |

spark.executor.memory | 32g | Amount of memory to use per executor process (e.g. 2g, 8g).

|

See http://spark.apache.org/docs/latest/running-on-yarn.html for running on YARN

--driver-memory 32g \

--executor-memory 32g \ --executor-cores 1 \

Use as much memory as you can for optimal Spark performance.

spark.yarn.am.memory | 16G | Amount of memory to use for the YARN Application Master in client mode, in the same format as JVM memory strings (e.g. 512m, 2g). In cluster mode, use spark.driver.memory instead.Use lower-case suffixes, e.g. |

spark.driver.memory | 16g | Amount of memory to use for the driver process, i.e. where SparkContext is initialized. (e.g. 1g, 2g).

Note: In client mode, this config must not be set through the SparkConfdirectly in your application, because the driver JVM has already started at that point. Instead, please set this through the --driver-memory command line option or in your default properties file. |

spark.driver.cores | 50 | Number of cores used by the driver in YARN cluster mode. Since the driver is run in the same JVM as the YARN Application Master in cluster mode, this also controls the cores used by the YARN Application Master. In client mode, use spark.yarn.am.cores to control the number of cores used by the YARN Application Master instead. |

Up things where you can and run in YARN cluster mode.

Good Reference:

http://www.slideshare.net/pdx_spark/performance-in-spark-20-pdx-spark-meetup-81816

http://www.slideshare.net/JenAman/rearchitecting-spark-for-performance-understandability-63065166

See the Hortonworks Spark Tuning Guide

Created 12-23-2016 03:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes increase driver and executor memory.

http://spark.apache.org/docs/latest/configuration.html

Also specify more executors.

in spark submit

--master yarn --deploy-mode cluster

In my code I like to:

val sparkConf = new SparkConf().setAppName("Links")

sparkConf.set("spark.cores.max", "32")

sparkConf.set("spark.serializer", classOf[KryoSerializer].getName)

sparkConf.set("spark.sql.tungsten.enabled", "true")

sparkConf.set("spark.eventLog.enabled", "true")

sparkConf.set("spark.app.id", "MyApp")

sparkConf.set("spark.io.compression.codec", "snappy")

sparkConf.set("spark.rdd.compress", "false")

sparkConf.set("spark.suffle.compress", "true")

See

http://spark.apache.org/docs/latest/submitting-applications.html

--executor-memory 32G \

--num-executors 50 \Up these

spark.driver.cores | 32 | Number of cores to use for the driver process, only in cluster mode. |

spark.driver.maxResultSize | 1g | Limit of total size of serialized results of all partitions for each Spark action (e.g. collect). Should be at least 1M, or 0 for unlimited. Jobs will be aborted if the total size is above this limit. Having a high limit may cause out-of-memory errors in driver (depends on spark.driver.memory and memory overhead of objects in JVM). Setting a proper limit can protect the driver from out-of-memory errors. |

spark.driver.memory | 32g | Amount of memory to use for the driver process, i.e. where SparkContext is initialized. (e.g. 1g, 2g).

Note: In client mode, this config must not be set through the SparkConf directly in your application, because the driver JVM has already started at that point. Instead, please set this through the --driver-memory command line option or in your default properties file. |

spark.executor.memory | 32g | Amount of memory to use per executor process (e.g. 2g, 8g).

|

See http://spark.apache.org/docs/latest/running-on-yarn.html for running on YARN

--driver-memory 32g \

--executor-memory 32g \ --executor-cores 1 \

Use as much memory as you can for optimal Spark performance.

spark.yarn.am.memory | 16G | Amount of memory to use for the YARN Application Master in client mode, in the same format as JVM memory strings (e.g. 512m, 2g). In cluster mode, use spark.driver.memory instead.Use lower-case suffixes, e.g. |

spark.driver.memory | 16g | Amount of memory to use for the driver process, i.e. where SparkContext is initialized. (e.g. 1g, 2g).

Note: In client mode, this config must not be set through the SparkConfdirectly in your application, because the driver JVM has already started at that point. Instead, please set this through the --driver-memory command line option or in your default properties file. |

spark.driver.cores | 50 | Number of cores used by the driver in YARN cluster mode. Since the driver is run in the same JVM as the YARN Application Master in cluster mode, this also controls the cores used by the YARN Application Master. In client mode, use spark.yarn.am.cores to control the number of cores used by the YARN Application Master instead. |

Up things where you can and run in YARN cluster mode.

Good Reference:

http://www.slideshare.net/pdx_spark/performance-in-spark-20-pdx-spark-meetup-81816

http://www.slideshare.net/JenAman/rearchitecting-spark-for-performance-understandability-63065166

See the Hortonworks Spark Tuning Guide

Created 01-03-2017 11:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The command that worked :

*Most conservative, takes the least cluster

/usr/hdp/current/spark2-client/bin/spark-submit --class samples.FuelModel--master yarn --deploy-mode cluster --executor-memory 4g--driver-memory 8g spark-assembly-1.0.jar

The time taken is too long(40+ minutes) to be acceptable, I tried increasing the no. of cores and executors but it didn't help much. I guess the overhead exceeds the performance benefit due to the processing a small file(< 200MB) on 2 nodes. I would be glad if you can provide any pointers.