Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Single Node Configuration Questions

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Single Node Configuration Questions

- Labels:

-

Apache Ambari

-

Apache Hadoop

-

HDFS

Created on 01-25-2017 02:42 PM - edited 09-16-2022 03:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am a complete noob to Hadoop and in need of some help. I am building a single node cluster with 4 separate 2tb hard drives. My hopes are to have the primary hard drive dedicated for the OS (CentOS 7) and the other 3 for HDFS. However, when trying to configure the cluster through Ambari, I am not finding the option to do so. Am I missing something? How do I configure Hadoop to use the secondary hard drives to store and access data? Due to the strict network policies I am wanting to stay away from virtual machines and I figure there is a way to have the naming and data nodes on the OS HDD, while the actual data is on the expendable HDDs. Simple, right? Please help. Any and all advice is greatly appreciated.

Created 01-25-2017 03:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You would have to mount the 4 disks to the OS anyway. So mount the OS disk to / and the other 3 HD's to /hadoop/hdfs/data1, /hadoop/hdfs/data2 and /hadoop/hdfs/data3.

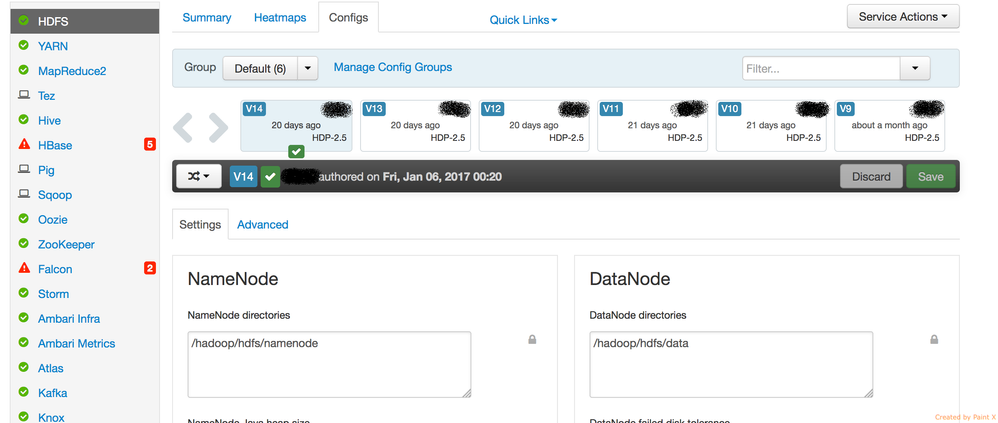

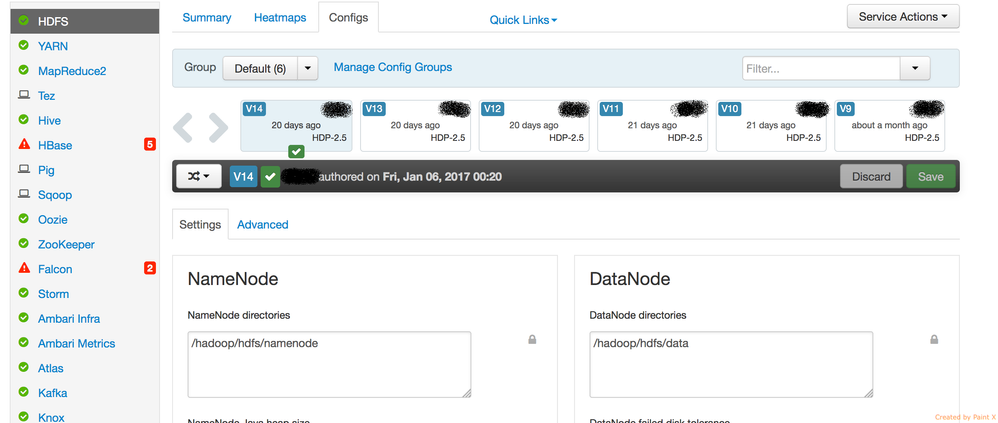

In Ambari you can set the OS level local folders to be used as HDFS storage like in the screenprint. property = 'dfs.datanode.data.dir'

Created 01-25-2017 03:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You would have to mount the 4 disks to the OS anyway. So mount the OS disk to / and the other 3 HD's to /hadoop/hdfs/data1, /hadoop/hdfs/data2 and /hadoop/hdfs/data3.

In Ambari you can set the OS level local folders to be used as HDFS storage like in the screenprint. property = 'dfs.datanode.data.dir'

Created 01-25-2017 03:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your response.

I have the other three already mounted to the base system (/mnt/...), named Data1, Data2, Data3 already. How do I mount them to hadoop? Is this a configuration in Ambari/HDFS? Or is this something I must do in terminal? Again, still learning the finer details of this system.

Thanks.

Created 01-25-2017 09:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, just enter those OS level paths ( /mnt/data1,/mnt/data2,/mnt/data3 ) as comma separated value in the box for Datadir ('dfs.datadir.data.dir') on the HDFS config page on Ambari.

HFDS is just a logical layer on top of the OS level filesystem, so you just hand Ambari/Hadoop the locations on the native OS filesystem where to 'host' HDFS.

Created 01-26-2017 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Don't forget to mark the question as answered, if it is answered