Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Snappy vs. Zlib - Pros and Cons for each compr...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Snappy vs. Zlib - Pros and Cons for each compression in Hive/ Orc files

- Labels:

-

Apache Hive

Created 11-16-2015 08:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had couple of questions on the file compression. We plan on using ORC format for a data zone that will be heavily accessed by the end-users via Hive/JDBC.

What is the recommendation when it comes to compressing ORC files?

Do you think Snappy is a better option (over ZLIB) given Snappy’s better read-performance? (Snappy is more performant in a read-often scenario, which is usually the case for Hive data.) When would you choose zlib?

As a side note: Compression is a double-edged sword, as you can go also have performance issue going from larger file sizes spread among multiple nodes to the smaller size & HDFS block size interactions. You can blunt this by using compression strategy.

Created 11-18-2015 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

David's post is from 2014. Since then we switched away from standard Zlib in ORC.

See the slides from ORC 2015: Faster, Better, Smaller

Each column type (like string, int etc) get different Zlib compatible algorithms for compression (i.e different trade-offs of RLE/Huffman/LZ77).

ORC+Zlib after the columnar improvements no longer has the historic weaknesses of Zlib, so it is faster than SNAPPY to read, smaller than SNAPPY on disk and only ~10% slower than SNAPPY to write it out.

Created on 11-16-2015 08:47 PM - edited 08-19-2019 05:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ancil McBarnett Performance! Performance! and performance! 🙂

ORC + Zlib is the way go.

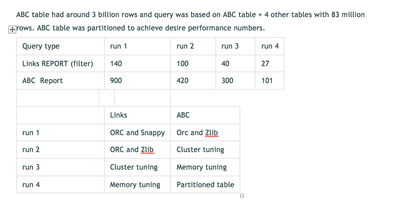

Here are the details based on a test done in my env.

run 1 vs. run 2

Created 11-16-2015 09:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for sharing! How many datasets were in the Links table? Is the dataset in Links a subset from the ABC dataset?

Created 11-16-2015 11:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ABC and Links were separate tables. @Jonas Straub

Created 11-16-2015 09:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ORC+ZLib seems to have the better performance. ZLib is also the default compression option, however there are definitely valid cases for Snappy.

I like the comment from David (2014, before ZLib Update) "SNAPPY for time based performance, ZLIB for resource performance (Drive Space)." Make sure you checkout David's post: https://streever.atlassian.net/wiki/display/HADOOP/Optimizing+ORC+Files+for+Query+Performance

As @gopal pointed out in the comment, we have switched to a new ZLib algorithm, hence the combination ORC + (new) ZLib is the way to go. The performance difference of ZLib and Snappy regarding disk writes is rather small.

Btw. ZLib is not always the better option, when it comes to HBase, Snappy is usually better 🙂

Created 11-18-2015 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

David's post is from 2014. Since then we switched away from standard Zlib in ORC.

See the slides from ORC 2015: Faster, Better, Smaller

Each column type (like string, int etc) get different Zlib compatible algorithms for compression (i.e different trade-offs of RLE/Huffman/LZ77).

ORC+Zlib after the columnar improvements no longer has the historic weaknesses of Zlib, so it is faster than SNAPPY to read, smaller than SNAPPY on disk and only ~10% slower than SNAPPY to write it out.

Created 11-18-2015 06:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @gopal. In this case we should definitely use ORC+(new)Zlib. I'll edit my answer 🙂

Created 11-24-2015 04:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@gopal just to confirm, these improvements would require HDP 2.3.x and later correct?

Created 06-04-2016 05:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any updates for 2016

Created 06-04-2016 05:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ORC is considering adding a faster decompression in 2016 - zstd (ZStandard). The enum values for that has already been reserved, but until we work through the trade-offs involved in ZStd - more on that sometime later this year.

https://issues.apache.org/jira/browse/ORC-46

But bigger wins are in motion for ORC with LLAP, the in-memory format for LLAP isn't compressed at all - so it performs like ORC without compression overheads, while letting the cold data on disk sit around in Zlib.