Support Questions

- Cloudera Community

- Support

- Support Questions

- Spark num-executors setting

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark num-executors setting

- Labels:

-

Apache Spark

Created on 09-13-2016 02:50 PM - edited 08-18-2019 06:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How will Spark designate resources in spark 1.6.1+ when using num-executors? This question comes up a lot so I wanted to use a baseline example.

On an 8 node cluster ( 2 name nodes) (1 edge node) (5 worker nodes). Each worker node having 20 cores and 256G.

if num-executors = 5 will you get 5 total executors or 5 on each node? Table below for illustration.

| cores | executors per node | executors total |

| 25 | 5 | 25 |

Created 09-13-2016 03:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This applies to YARN mode. The --num-executors defines the number of executors, which really defines the total number of applications that will be run. You can specify the --executor-cores which defines how many CPU cores are available per executor/application. Given that, the answer is the first: you will get 5 total executors.

Created 09-13-2016 08:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kirk Haslbeck Michael is correct you will get 5 total executors

Created 09-13-2016 03:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

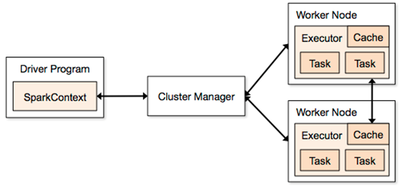

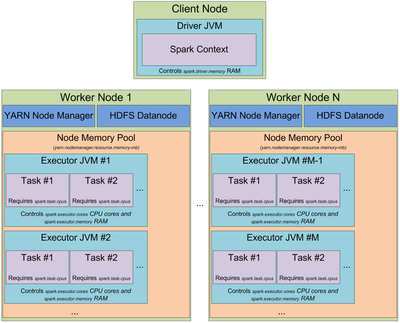

@Kirk Haslbeck Good question, and thanks for the diagrams. Here are some more details to consider.

It is a good point that each JVM-based worker can have multiple "cores" that run tasks in a multi-threaded environment. There are benefits to running multiple executors on a single node (single JVM) to take advantage of the multi-core processing power, and to reduce the total JVM overhead per executor. Obviously, the JVM has to startup and initialize certain data structures before it can begin running tasks.

From Spark docs, we configure number of cores using these parameters:

spark.driver.cores = Number of cores to use for the driver process

spark.executor.cores = The number of cores to use on each executor

You also want to watch out for this parameter, which can be used to limit the total cores used by Spark across the cluster (i.e., not each worker):

spark.cores.max = the maximum amount of CPU cores to request for the application from across the cluster (not from each machine)

Finally, here is a description from Databricks, aligning the terms "cores" and "slots":

"Terminology: We're using the term “slots” here to indicate threads available to perform parallel work for Spark. Spark documentation often refers to these threads as “cores”, which is a confusing term, as the number of slots available on a particular machine does not necessarily have any relationship to the number of physical CPU cores on that machine."

Created 09-13-2016 04:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there any method to arrive at the num-executors and num-cores value given a particluar hadoop cluster size. For the below configuration 8 data nodes - 40 vCPU and 160 GB of memory I used the below

Number of cores <= 5 (assuming 5)

Num executors = (40-1)/5*8 = 56

Memory = (160-1)/7 = 22 GB

Also, I was reading the Spark 2.0 documentation and it seems that num-executors is being removed and we will be using num-cores and num-cores-max.

Any suggestions, for making an informed decision on these values will be really helpful.

Thanks, Jayadeep

Created 09-13-2016 04:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As with tuning most hadoop jobs, I think it requires some experimenting. The performance will vary depending on the type of jobs you are running, the data volumes, etc.

This slideshare may also be helpful: http://www.slideshare.net/jcmia1/apache-spark-20-tuning-guide

This is a good article on LinkedIn: https://www.linkedin.com/pulse/tune-spark-jobs-2-chaaranpall-lambba

This is a good article on Spark and garbage collection: https://databricks.com/blog/2015/05/28/tuning-java-garbage-collection-for-spark-applications.html