Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Sqoop hook doesn't work for atlas?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sqoop hook doesn't work for atlas?

Created 08-17-2016 04:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I install atlas and sqoop respectively and haven't used HDP.

After execute this command:

sqoop import -connect jdbc:mysql://master:3306/hive -username root -password admin -table TBLS -hive-import -hive-table sqoophook1

It shows that sqoop import data into hive successfully, and never report error.

Then I check the Atlas UI, search the sqoop_process type, but I can't check any information. Why?

`

Here is my configuration process:

Step 1: Set the <sqoop-conf>/sqoop-site.xml

<property> <name>sqoop.job.data.publish.class</name> <value>org.apache.atlas.sqoop.hook.SqoopHook</value> </property>

Step 2: Copy the <atlas-conf>/atlas-application.properties to <sqoop-conf>

Step 3: Link <atlas-home>/hook/sqoop/*.jar in sqoop lib.

`

Are these configuration-steps wrong ?

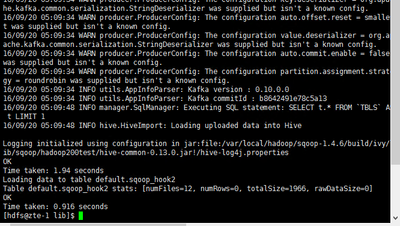

Here is the output

sqoop import -connect jdbc:mysql://zte-1:3306/hive -username root -password admin -table TBLS -hive-import -hive-table sqoophook2 Warning: /var/local/hadoop/sqoop-1.4.6/../hcatalog does not exist! HCatalog jobs will fail. Please set $HCAT_HOME to the root of your HCatalog installation. Warning: /var/local/hadoop/sqoop-1.4.6/../accumulo does not exist! Accumulo imports will fail. Please set $ACCUMULO_HOME to the root of your Accumulo installation. 16/08/23 01:04:04 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6 16/08/23 01:04:04 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 16/08/23 01:04:04 INFO tool.BaseSqoopTool: Using Hive-specific delimiters for output. You can override 16/08/23 01:04:04 INFO tool.BaseSqoopTool: delimiters with --fields-terminated-by, etc. 16/08/23 01:04:05 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset. 16/08/23 01:04:05 INFO tool.CodeGenTool: Beginning code generation 16/08/23 01:04:05 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:04:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:04:06 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /var/local/hadoop/hadoop-2.6.0 Note: /tmp/sqoop-hdfs/compile/2606be5f25a97674311440065aac302d/TBLS.java uses or overrides a deprecated API. Note: Recompile with -Xlint:deprecation for details. 16/08/23 01:04:09 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hdfs/compile/2606be5f25a97674311440065aac302d/TBLS.jar 16/08/23 01:04:09 WARN manager.MySQLManager: It looks like you are importing from mysql. 16/08/23 01:04:09 WARN manager.MySQLManager: This transfer can be faster! Use the --direct 16/08/23 01:04:09 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path. 16/08/23 01:04:09 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql) 16/08/23 01:04:09 INFO mapreduce.ImportJobBase: Beginning import of TBLS SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/var/local/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/var/local/hadoop/hbase-1.1.5/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/S taticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/08/23 01:04:10 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/08/23 01:04:10 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar 16/08/23 01:04:11 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job. maps 16/08/23 01:04:11 INFO client.RMProxy: Connecting to ResourceManager at zte-1/192.168.136.128:8032 16/08/23 01:04:16 INFO db.DBInputFormat: Using read commited transaction isolation 16/08/23 01:04:16 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`TBL_ID`), MAX(`TBL_ID`) FROM `TBLS` 16/08/23 01:04:17 INFO mapreduce.JobSubmitter: number of splits:4 16/08/23 01:04:17 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1471882959657_0001 16/08/23 01:04:19 INFO impl.YarnClientImpl: Submitted application application_1471882959657_0001 16/08/23 01:04:19 INFO mapreduce.Job: The url to track the job: http://zte-1:8088/proxy/application_147188295 9657_0001/ 16/08/23 01:04:19 INFO mapreduce.Job: Running job: job_1471882959657_0001 16/08/23 01:04:37 INFO mapreduce.Job: Job job_1471882959657_0001 running in uber mode : false 16/08/23 01:04:37 INFO mapreduce.Job: map 0% reduce 0% 16/08/23 01:05:05 INFO mapreduce.Job: map 25% reduce 0% 16/08/23 01:05:07 INFO mapreduce.Job: map 100% reduce 0% 16/08/23 01:05:08 INFO mapreduce.Job: Job job_1471882959657_0001 completed successfully 16/08/23 01:05:08 INFO mapreduce.Job: Counters: 30 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=529788 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=426 HDFS: Number of bytes written=171 HDFS: Number of read operations=16 HDFS: Number of large read operations=0 HDFS: Number of write operations=8 Job Counters Launched map tasks=4 Other local map tasks=4 Total time spent by all maps in occupied slots (ms)=102550 Total time spent by all reduces in occupied slots (ms)=0 Total time spent by all map tasks (ms)=102550 Total vcore-seconds taken by all map tasks=102550 Total megabyte-seconds taken by all map tasks=105011200 Map-Reduce Framework Map input records=3 Map output records=3 Input split bytes=426 Spilled Records=0 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=1227 CPU time spent (ms)=3640 Physical memory (bytes) snapshot=390111232 Virtual memory (bytes) snapshot=3376676864 Total committed heap usage (bytes)=74018816 File Input Format Counters Bytes Read=0 File Output Format Counters Bytes Written=171 16/08/23 01:05:08 INFO mapreduce.ImportJobBase: Transferred 171 bytes in 57.2488 seconds (2.987 bytes/sec) 16/08/23 01:05:08 INFO mapreduce.ImportJobBase: Retrieved 3 records. 16/08/23 01:05:08 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `TBLS` AS t LIMIT 1 16/08/23 01:05:08 INFO hive.HiveImport: Loading uploaded data into Hive 16/08/23 01:05:19 INFO hive.HiveImport: 16/08/23 01:05:19 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/var/local/hadoop/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/var/local/hadoop/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/var/local/hadoop/hbase-1.1.5/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an e xplanation. 16/08/23 01:05:19 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] 16/08/23 01:05:31 INFO hive.HiveImport: OK 16/08/23 01:05:31 INFO hive.HiveImport: Time taken: 3.481 seconds 16/08/23 01:05:31 INFO hive.HiveImport: Loading data to table default.sqoophook2 16/08/23 01:05:33 INFO hive.HiveImport: Table default.sqoophook2 stats: [numFiles=4, totalSize=171] 16/08/23 01:05:33 INFO hive.HiveImport: OK 16/08/23 01:05:33 INFO hive.HiveImport: Time taken: 1.643 seconds 16/08/23 01:05:35 INFO hive.HiveImport: Hive import complete. 16/08/23 01:05:35 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

Created 08-17-2016 10:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you also confirm if the sqoop-site.xml has the rest address for atlas server configured ?

Sample configuration is available here

Created 08-23-2016 06:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like this version of sqoop does not support integration with atlas. You may have to upgrade the version to sqoop-1.4.6.2.3.99.1-5.jar sandbox (HDP 2.4.0) or use sqoop-1.4.7 or later from apache.

Do let me know if the upgrade resolve the issue

Created 08-23-2016 06:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much. But I can find sqoop-1.4.7 in the official webside: http://sqoop.apache.org/

This webside shows that the latest stable release is 1.4.6. It doesn't provide the access for downloading the sqoop-1.4.7.

Could you give me a link to download the 1.4.7 version or send me the jar to my email: dreamcoding@outlook.com

Created 08-23-2016 04:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ethan Hsieh I have sent you the jar file. Also you will be able to build this jar file by cloning the sqoop git repo - https://github.com/apache/sqoop.git

Details of how to compile is provided under - https://github.com/apache/sqoop/blob/trunk/COMPILING.txt

Created 09-14-2016 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much, after imported the 1.4.7.jar package into sqoop1.4.6 does solve the problem. But I am worried that there will be some small problems in the future, so I came up with several solutions:

1. I found that the version of sqoop in HDP is 1.4.6, but as I mentioned before that the sqoop1.4.6 obtained from the official is not complete, I would like to ask you that can give me a full version of the 1.4.6.

2. can you provide me a full version of the sqoop-1.4.7.,not just only the 1.4.7.jar package

3. I even tried the latest release of the sqoop-1.99.7, but the official information only saw the use of it in importing data from the relational database into HDFS, I want to know the operation steps of using it to import datafrom the relational database into Hive.

Created 09-19-2016 06:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1.You will now be able to find the sqoop hook with http://hortonworks.com/tech-preview-hdp-2-5/

2.I could provide you with the full version but that may not be a clean fix.Please build sqoop from latest branch on apache here which would have all the changes.

3. Could you please post this as a different question as this is related to sqoop client

Created 09-02-2016 06:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sqoop hook for atlas is not part of the 2.4.0 release.

Created 09-20-2016 12:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same question.I used ant to compile the project that was downloaded from the github.But when i used the sqoop to import data from mysql to hive,the data could't be imported to hive and the atlas hook didn't work.So i want to know the sqoop project source that the 1.4.7 jar you got from was got from github?

Created on 09-20-2016 12:59 PM - edited 08-19-2019 04:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

when the job finished,it gived me these messages.But I can't understand it.

- « Previous

-

- 1

- 2

- Next »