Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: The heap memory usage of NameNode is much high...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

The heap memory usage of NameNode is much higher than expected

- Labels:

-

Apache Ambari

-

HDFS

Created 01-16-2024 12:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

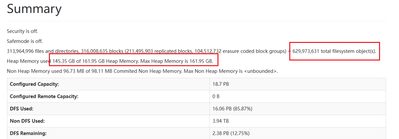

Hi, I have a Hadoop 3.1.1 cluster and recently I found that the NameNode heap memory usage in the cluster is very high. I saw 629973631 file objects in the cluster through WebUi, so according to my calculations, it should occupy no more than 90GB of memory, right? Why is the current memory usage consistently above 140GB? Is this related to me enabling erasure codes?

Created 01-17-2024 01:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Meepoljd ,

The file and block metadata consumes the NameNode heap. Can you share how did your calculation?

Per our docs:

the file count should kept below 300m files. Also the same page suggests that approximately 150 bytes are needed for each namespace object, I assume you did your calculation based on that. The real NN heap consumption varies with the path lengths, ACL counts, replication factors, snapshots, operational load, etc. As such in our other page

we suggest to allocate rather a bigger heap size, 1 GB heap for 1 million blocks, which would be ~320 GB in your case.

Hope this helps,

Best regards, Miklos

Created 01-17-2024 01:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Meepoljd ,

The file and block metadata consumes the NameNode heap. Can you share how did your calculation?

Per our docs:

the file count should kept below 300m files. Also the same page suggests that approximately 150 bytes are needed for each namespace object, I assume you did your calculation based on that. The real NN heap consumption varies with the path lengths, ACL counts, replication factors, snapshots, operational load, etc. As such in our other page

we suggest to allocate rather a bigger heap size, 1 GB heap for 1 million blocks, which would be ~320 GB in your case.

Hope this helps,

Best regards, Miklos

Created 01-18-2024 12:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for your answer. I will try to adjust the allocation of heap memory. In addition, I would like to ask how the conclusion of using 1GB of memory for every 1 million blocks was drawn? Or is there a more precise calculation method that can lead to such a conclusion?

Created 01-18-2024 05:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As far as I know this is more of an empirical best practice. As mentioned it cannot be exactly calculated since there are some variable factors (filename / path lengths, acl counts, etc) which change from environment to environment.

Created 01-18-2024 12:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for your answer. I will try to adjust the allocation of heap memory. In addition, I would like to ask how the conclusion of using 1GB of memory for every 1 million blocks was drawn? Or is there a more precise calculation method that can lead to such a conclusion?