Support Questions

- Cloudera Community

- Support

- Support Questions

- Unable to fetch data from hive table using Apache ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Unable to fetch data from hive table using Apache NiFi (ExecuteSQL processor)

- Labels:

-

Apache NiFi

Created on 07-11-2016 10:01 AM - edited 08-19-2019 03:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

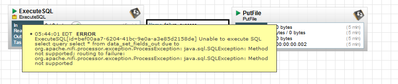

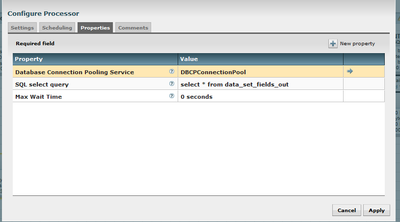

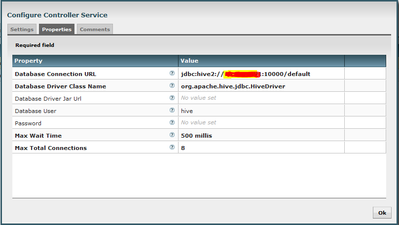

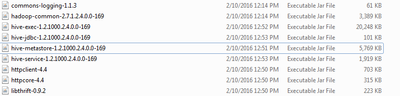

I am trying to fetch data from hive table (ExecuteSQL) and load into file system(PutFile). I have kept all required hive

jar in lib folder of nifi. However getting Error message in ExecuteSQL processor. Please let me know if i have done any mistake anywhere and help me to complete the job.

Created 07-11-2016 12:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are using HDF 1.2, unfortunately the ExecuteSQL processor doesn't work with hive, yet. The Hive processor is on the roadmap and may be included in the next release.

Created 07-11-2016 12:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you are using HDF 1.2, unfortunately the ExecuteSQL processor doesn't work with hive, yet. The Hive processor is on the roadmap and may be included in the next release.

Created 09-01-2016 02:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just an update - the 'SelectHiveQL' has been added as part of Nifi 0.7

Created 08-04-2016 04:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There must be a new nifi processor 'SelectHiveQL' that queries from hive. Also, there is a processor now to insert or update data directly to hive 'PutHiveQL'

Created 08-05-2016 07:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI @hduraiswamy, i need to know the steps involved in upgrading the HDF nifi(version 0.6.0.1.2.0.1-1) to to 0.7 version. My current version doesn't have 'SelectHiveQL' and 'PutHiveQL' processors. Pls share...it helps lot

Created 08-06-2016 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Follow the below steps:

- save your hdf flow files to xml templates

- download the nifi 0.7 from apache nifi downloads site (https://nifi.apache.org/download.html)

- unzip the file, edit the port (if you would like) and start nifi

- import the templates

If this answer and comment is helpful, please upvote my answer and/or select as best answer. Thank you!!

Created 08-06-2016 09:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @hduraiswamy

Thanks for your replay

- My issue is how to start 0.7 nifi. I'm having HDF 0.6.0.1.2.0.1-1 version and downloaded from Hortonworks.

- If i need to start my Nifi is \bin\run-nifi.bat

- Here(0.7) not having same file structure of 0.6.0.1.2.0.1-1

- Please explain briefly

Thanks

Iyappan

Created on 08-06-2016 09:56 PM - edited 08-19-2019 03:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

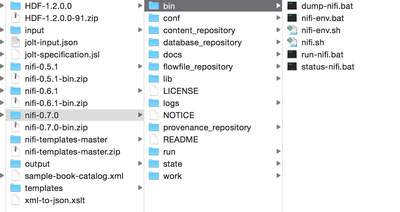

- Download the nifi-0.7.0-bin.zip file from the downloads link https://nifi.apache.org/download.html

- After that, if you unzip the file, you will see the folder structure similar to this one below:

- Then based on the OS, you can either use 'bin/run-nifi.bat' for windows or 'bin/nifi.sh start' for mac/linux. More details on how to start nifi is here https://nifi.apache.org/docs/nifi-docs/html/getting-started.html#starting-nifi

- You can tail the logs from logs/nifi-app.log (to see if it starts properly)

- OPTIONAL: By default, nifi starts on port 8080 - but if you see any port conflict or want to start this on a different port, you can change that by editing the file 'conf/nifi.properties', search for 8080 and update the port number.

If you like the answer, please make sure to upvote or accept the answer.

Created 08-08-2016 04:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @hduraiswamy

I made mistake on download itself now its working fine......Thanks once again...