Support Questions

- Cloudera Community

- Support

- Support Questions

- Using NFS with Ambari 2.1 and above

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Using NFS with Ambari 2.1 and above

- Labels:

-

Apache Ambari

Created 09-25-2015 06:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

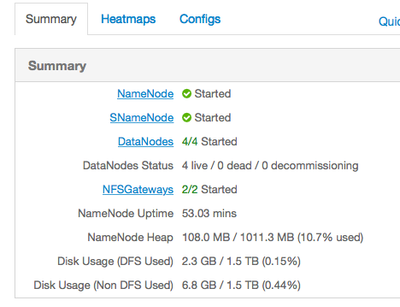

Before Ambari 2.1, we had to manager the NFS Gateway separately. Now it's "kind of" part of the Ambari process. At least it shows up in Ambari (HDFS Summary page) as installed and running.

But I don't see a away to control the bind, etc... And there aren't any processes running like that. So what is the process for using NFS with Ambari 2.1+?

Created on 09-28-2015 09:06 PM - edited 08-19-2019 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll answer my own question here since I was able to work through it on a new install.

With a fresh installation of HDP 2.3 with Ambari 2.1.1, you'll be prompted during the installation to select a server (or more) to install the NFS gateway on. This happens in the same configuration windows during cluster configuration where you designate Datanodes, Region Servers, Phoenix Servers, etc..

After the installation has finished, you'll see indications that the NFS Gateway is running on the choosen servers.

Now what? If you go to one of the servers and do a

df -h

you won't see any new mount points. So how far down the path did Ambari get you. If you reference back to the HDP 2.2 docs on configuring NFS, you'll see that Ambari has started the nfs and rpcbind services for you. But now it's up to you to mount them.

Follow the remaining HDP 2.2 docs to complete the process and mount the NFS gateway.

The startup process for the NFS Gateway is run as the 'hdfs' user. So earlier documents covering proxy settings are NOT necessary.

# Mount Example (to be run as root)

mkdir /hdfs

mount -t nfs -o vers=3,proto=tcp,nolock localhost:/ /hdfs

User interaction details are found here.

/etc/fstab Example for NFS Gateway Automountlocalhost:/ /hdfs nfs rw,vers=3,proto=tcp,nolock,timeo=600 0 0

If you're using NFS as a quick way to traverse HDFS while avoiding the startup times of the jvm when making the 'hdfs dfs ...' call, try out the hdfs-cli project.

Created on 09-28-2015 09:06 PM - edited 08-19-2019 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'll answer my own question here since I was able to work through it on a new install.

With a fresh installation of HDP 2.3 with Ambari 2.1.1, you'll be prompted during the installation to select a server (or more) to install the NFS gateway on. This happens in the same configuration windows during cluster configuration where you designate Datanodes, Region Servers, Phoenix Servers, etc..

After the installation has finished, you'll see indications that the NFS Gateway is running on the choosen servers.

Now what? If you go to one of the servers and do a

df -h

you won't see any new mount points. So how far down the path did Ambari get you. If you reference back to the HDP 2.2 docs on configuring NFS, you'll see that Ambari has started the nfs and rpcbind services for you. But now it's up to you to mount them.

Follow the remaining HDP 2.2 docs to complete the process and mount the NFS gateway.

The startup process for the NFS Gateway is run as the 'hdfs' user. So earlier documents covering proxy settings are NOT necessary.

# Mount Example (to be run as root)

mkdir /hdfs

mount -t nfs -o vers=3,proto=tcp,nolock localhost:/ /hdfs

User interaction details are found here.

/etc/fstab Example for NFS Gateway Automountlocalhost:/ /hdfs nfs rw,vers=3,proto=tcp,nolock,timeo=600 0 0

If you're using NFS as a quick way to traverse HDFS while avoiding the startup times of the jvm when making the 'hdfs dfs ...' call, try out the hdfs-cli project.

Created 09-29-2015 01:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Same experience for me. Ambari went as far as getting things up and running, but, mounting all shares was up to me.

Created 11-24-2015 12:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Regarding "you won't see any new mount points":

It's important to distinguish between the NFS Gateway service and the NFS client, even though they can both be on the same machine. NFS services export mountpoints, ie make them available for clients to mount. NFS clients mount them and make use of them as filesystems. It is true that for some applications, it would be convenient to have the NFS mountpoint mounted on the cluster nodes, but this is a Client functionality, not part of Gateway setup. And for many other applications, it is more important to have the NFS mountpoint available for use by other hosts outside the Hadoop cluster -- which can't be managed by Ambari.