Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Using Nifi to do processing on CSV file before...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Using Nifi to do processing on CSV file before inserting into database

- Labels:

-

Apache NiFi

Created 01-03-2018 04:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a CSV file that is messy.

I need to:

1. Get the date from the filename and use that as my date and append that to one of the columns.

2. Parse the CSV file to get the columns as the very last column is a string which has separators in the string ",".

The data looks like this.

Filename: ExampleFile_2017-09-20.LOG

Content:

23:49:38.637,162929511757,$009389BF,36095,,,,,,,,,,Failed to fit max attempts (1=>3), fit failing entirely (Fit Failure=True),

23:49:38.638,162929512814,$008EE9F6,-16777208,,,,,,,,,,Command Measure, Targets complete - Elapsed: 76064 ms,

The following is what will need to be inserted into the database:

2017-09-20 23:49:38.637,162929511757,$009389BF,36095,,,,,,,,,,"Failed to fit max attempts (1=>3), fit failing entirely (Fit Failure=True)"

2017-09-20 23:49:38.638,162929512814,$008EE9F6,-16777208,,,,,,,,,,"Command Measure, Targets complete - Elapsed: 76064 ms"

Would I need to do this inside of NiFi or some external script by calling some type of ExecuteScript?

Created on 01-03-2018 07:43 PM - edited 08-18-2019 03:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes we can do your case using NiFi Processors without using any external scripts.

Here is what i tried:-

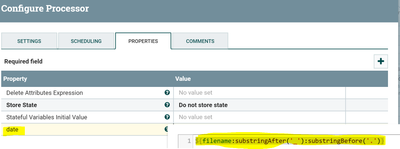

First you need to extract the date from filename and keep it as attribute to the flowfile by using

Update Attribute processor:-

add new property

date

${filename:substringAfter('_'):substringBefore('.')}Configs:-

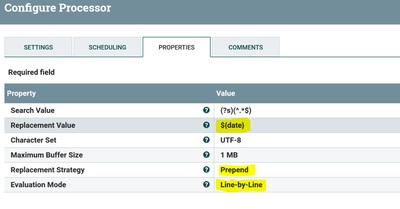

Then use Replace text processor to prepend the existing flowfile csv data with extracted date attribute

Change the

Replacement Value

${date}**keep space after ${date} in above.

Maximum Buffer Size

1 MB //if your flowfile content is more than 1 MB then you need to change this value.

Replacement Strategy

Prepend

Evaluation Mode

Line-by-Line

Configs:-

So in this processor we are just prepending the data with date attribute that is extracted in Update attribute processor and we are going to prepend date value Line-by-Line evoluation mode.

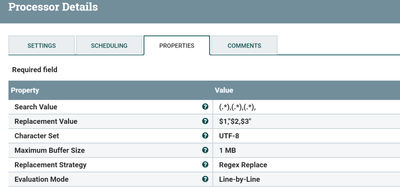

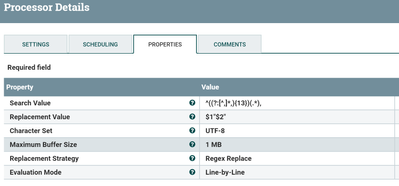

Then use another replace text processor to replace last , with double quotes "

Search Value

(.*),(.*),(.*),

Replacement Value

$1,"$2,$3"

Maximum Buffer Size

1 MB //if your flowfile content is more than 1 MB then you need to change this value.

Replacement Strategy

Regex Replace

Evaluation Mode

Line-by-Line

Configs:-

So in this processor we are extracting all the data between last 2 commas and then replacing first group as is and enclosing group2 and group3 with double quotes and removing , at last.

Output:-

2017-09-20 23:49:38.637,162929511757,$009389BF,36095,,,,,,,,,,"Failed to fit max attempts (1=>3), fit failing entirely (Fit Failure=True)" 2017-09-20 23:49:38.638,162929512814,$008EE9F6,-16777208,,,,,,,,,,"Command Measure, Targets complete - Elapsed: 76064 ms"

I have attached the xml file you can use the same xml file and change the configs as per your needs.

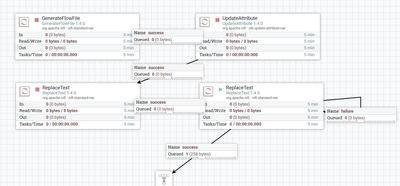

Flow:-

Reference xml:-

Let me know if you have any issues..!!

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of errors.

Created on 01-03-2018 07:43 PM - edited 08-18-2019 03:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes we can do your case using NiFi Processors without using any external scripts.

Here is what i tried:-

First you need to extract the date from filename and keep it as attribute to the flowfile by using

Update Attribute processor:-

add new property

date

${filename:substringAfter('_'):substringBefore('.')}Configs:-

Then use Replace text processor to prepend the existing flowfile csv data with extracted date attribute

Change the

Replacement Value

${date}**keep space after ${date} in above.

Maximum Buffer Size

1 MB //if your flowfile content is more than 1 MB then you need to change this value.

Replacement Strategy

Prepend

Evaluation Mode

Line-by-Line

Configs:-

So in this processor we are just prepending the data with date attribute that is extracted in Update attribute processor and we are going to prepend date value Line-by-Line evoluation mode.

Then use another replace text processor to replace last , with double quotes "

Search Value

(.*),(.*),(.*),

Replacement Value

$1,"$2,$3"

Maximum Buffer Size

1 MB //if your flowfile content is more than 1 MB then you need to change this value.

Replacement Strategy

Regex Replace

Evaluation Mode

Line-by-Line

Configs:-

So in this processor we are extracting all the data between last 2 commas and then replacing first group as is and enclosing group2 and group3 with double quotes and removing , at last.

Output:-

2017-09-20 23:49:38.637,162929511757,$009389BF,36095,,,,,,,,,,"Failed to fit max attempts (1=>3), fit failing entirely (Fit Failure=True)" 2017-09-20 23:49:38.638,162929512814,$008EE9F6,-16777208,,,,,,,,,,"Command Measure, Targets complete - Elapsed: 76064 ms"

I have attached the xml file you can use the same xml file and change the configs as per your needs.

Flow:-

Reference xml:-

Let me know if you have any issues..!!

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of errors.

Created 01-03-2018 10:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the great detailed response.

The first part does work but I don't think the regex will work for my case.

(Side bit, no fault of yours, I just absolutely despise regex as its unreadable to me and extremely difficult to debug (if at all).)

I should have mentioned this, but the only thing I know about the CSV file is that there are X number of columns before the string.

So I could see something like..

23:49:38.637,162929511757,$009389BF,36095,,,,,,,,,,Failed to fit max, attempts,(1=>3), fit failing entrely,(FitFailure=True),

The only thing I know is that there are 13 columns (commas) before the string and the string will always have a trailing "," (It has always been the last column in the row from what I have seen).

The other issue is I tried doing

(.*),

for all of the columns so I could then put it into a database query to insert the data but the regex seems to blowup and not function with so many columns (the original data has about 150 columns in it and I just truncated it down here).

Created on 01-04-2018 02:39 AM - edited 08-18-2019 03:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you know 13 columns before the string then you can use Replace Text processor that matches until 13th comma then match everything after 13th comma until last comma(not including last comma).

Replace text processor Configs:-

Change the below properties as

Search Value

^((?:[^,]*,){13})(.*), //capture group 1 until 13th comma and capture group 2 after 13th comma until last comma

Replacement Value

$1"$2" //keep 1 group as is and add double quotes for 2 group

Maximum Buffer Size

1 MB //if your flowfile content is more than 1 MB then you need to change this value.

Replacement Strategy

Regex Replace

Evaluation Mode

Line-by-Line

Configs:-

By using above search value property we are just replacing as is until 13th comma and matching whole content after 13th comma until last comma and enclosing whole content in double quotes(").

If you are thinking that regex will blow up by using above replace text processor configurations then you can use

Split Text processor before Replace Text processor to split big csv file each flowfile having 1 line,Then use

Replace Text Processor will work with 1 small flowfile, After replace text you can use

Merge Content Processor(if you want to merge small flowfiles into 1 big file again).

Created 01-04-2018 05:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks! That seems to work correctly. I'll mark this as the answer as it produces the answer I'm looking for.