Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: What is the default value of --executor -memor...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What is the default value of --executor -memory when spark-submit is running in the standalone cluster pseudo distributed mode?

- Labels:

-

Apache Spark

Created on 04-04-2017 07:48 AM - edited 08-18-2019 01:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

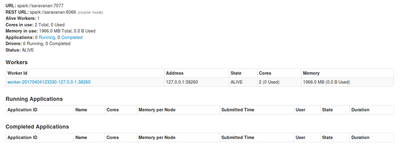

My set up is as follows: 1 laptop where i am running wordcount scala program through spark-submit command. The input for my application is a text file which is placed in HDFS. I'm using Spark's standalone cluster for managing my cluster. I'm running my application on a kind of pseudo distributed mode. while executing spark-submit command i don't use --executor-memory option. My execution command looks like this? I would like to know that how much memory will be allotted to executor by default when --executor-memory option is not given. My interface looks like the one in the image attached.

spark-submit --class Wordcount --master spark://saravanan:7077 /home/hduser/sparkapp/target/scala-2.11/sparkapp_2.11-0.1.jar hdfs://127.0.0.1:9000//inp_wrd hdfs://127.0.0.1:9000//amazon_wrd_count1

Created on 04-04-2017 09:30 AM - edited 08-18-2019 01:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Default executor memory will shown at spark->config->Advance spark-env, for reference check the attached image.

Created 04-04-2017 11:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. thanks. Now i understood that by default the amount of memory allotted for an executor is 1 GB and this value can be controlled through --executor-memory option. Now, I would like to know that By default, how many executors will be created for an application in a node and what is the total number of executors created in a cluster? How to control number of executors created in a node? Also, by default how many cores will be allotted to an executor in a node(I think that the number of cores allotted for an executor is unlimited in a node.Am i right?)?

Created 04-04-2017 12:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

how many executors will be created for an application in a node?what is the total number of executors created in a cluster for an application?

Based on the SPARK_EXECUTOR_INSTANCE by default.

how many cores will be allotted to an executor in a node?

Based on the SPARK_EXECUTOR_CORES by default.

Created 07-26-2019 04:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Below are the default configuration values which will be considered by the spark job if these are not overriden at the time of submitting job to the required values.

# - SPARK_EXECUTOR_INSTANCES, Number of workers to start (Default: 2)

# - SPARK_EXECUTOR_CORES, Number of cores for the workers (Default: 1).

# - SPARK_EXECUTOR_MEMORY, Memory per Worker (e.g. 1000M, 2G) (Default: 1G)

SPARK_EXECUTOR_INSTANCES -> indicates the number of workers to be started, it means for a job maximum this many number of executors it can take from the cluster resource manager.

SPARK_EXECUTOR_CORES -> indicates the number of cores in each executor, it means the spark TaskScheduler will ask this many cores to be allocated/blocked in each of the executor machine.

SPARK_EXECUTOR_MEMORY -> indicates the maximum amount of RAM/MEMORY it requires in each executor.

All these details are asked by the TastScheduler to the cluster manager (it may be a spark standalone, yarn, mesos and can be kubernetes starting from spark 2.0) to provide before actually the job execution starts.

Also, please note that, initial number of executor instances is dependent on "--num-executors" but when the data is more to be processed and "spark.dynamicAllocation.enabled" set true, then it will be dynamically add more executors based on "spark.dynamicAllocation.initialExecutors".

Note: Always "spark.dynamicAllocation.initialExecutors" should be configured greater than "--num-executors".

spark.dynamicAllocation.initialExecutors | spark.dynamicAllocation.minExecutors | Initial number of executors to run if dynamic allocation is enabled. If `--num-executors` (or `spark.executor.instances`) is set and larger than this value, it will be used as the initial number of executors. |

spark.executor.memory | 1g | Amount of memory to use per executor process, in the same format as JVM memory strings with a size unit suffix ("k", "m", "g" or "t") (e.g. 512m, 2g). |

spark.executor.cores | 1 in YARN mode, all the available cores on the worker in standalone and Mesos coarse-grained modes. | The number of cores to use on each executor. In standalone and Mesos coarse-grained modes, for more detail, see this description. |