Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Yarn Threshold error

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Yarn Threshold error

- Labels:

-

Apache Spark

-

Apache YARN

Created on 06-12-2017 12:00 PM - edited 08-17-2019 09:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

I'm using new HDP2.6. and Ambari. On it I have installed Yarn, MapReduce, Spark2, Hadoop and etc.

I'm trying to enter spark shell with --master yarn but I'm constantly getting this kind of error:

bin/spark-shell --master yarn --deploy-mode client

Warning: Ignoring non-spark config property: spark-executor.memory=4g

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

17/06/12 13:38:38 ERROR SparkContext: Error initializing SparkContext.

java.lang.IllegalArgumentException: Required executor memory (8192+819 MB) is above the max threshold (8192 MB) of this cluster! Please check the values of 'yarn.scheduler.maximum-allocation-mb' and/or 'yarn.nodemanager.resource.memory-mb'.

at org.apache.spark.deploy.yarn.Client.verifyClusterResources(Client.scala:334)

at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:168)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:56)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:156)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:509)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2320)

at org.apache.spark.sql.SparkSession$Builder$anonfun$6.apply(SparkSession.scala:868)

at org.apache.spark.sql.SparkSession$Builder$anonfun$6.apply(SparkSession.scala:860)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:860)

at org.apache.spark.repl.Main$.createSparkSession(Main.scala:96)

at $line3.$read$iw$iw.<init>(<console>:15)

at $line3.$read$iw.<init>(<console>:42)

at $line3.$read.<init>(<console>:44)

at $line3.$read$.<init>(<console>:48)

at $line3.$read$.<clinit>(<console>)

at $line3.$eval$.$print$lzycompute(<console>:7)

at $line3.$eval$.$print(<console>:6)

at $line3.$eval.$print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at scala.tools.nsc.interpreter.IMain$ReadEvalPrint.call(IMain.scala:786)

at scala.tools.nsc.interpreter.IMain$Request.loadAndRun(IMain.scala:1047)

at scala.tools.nsc.interpreter.IMain$WrappedRequest$anonfun$loadAndRunReq$1.apply(IMain.scala:638)

at scala.tools.nsc.interpreter.IMain$WrappedRequest$anonfun$loadAndRunReq$1.apply(IMain.scala:637)

at scala.reflect.internal.util.ScalaClassLoader$class.asContext(ScalaClassLoader.scala:31)

at scala.reflect.internal.util.AbstractFileClassLoader.asContext(AbstractFileClassLoader.scala:19)

at scala.tools.nsc.interpreter.IMain$WrappedRequest.loadAndRunReq(IMain.scala:637)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:569)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:565)

at scala.tools.nsc.interpreter.ILoop.interpretStartingWith(ILoop.scala:807)

at scala.tools.nsc.interpreter.ILoop.command(ILoop.scala:681)

at scala.tools.nsc.interpreter.ILoop.processLine(ILoop.scala:395)

at org.apache.spark.repl.SparkILoop$anonfun$initializeSpark$1.apply$mcV$sp(SparkILoop.scala:38)

at org.apache.spark.repl.SparkILoop$anonfun$initializeSpark$1.apply(SparkILoop.scala:37)

at org.apache.spark.repl.SparkILoop$anonfun$initializeSpark$1.apply(SparkILoop.scala:37)

at scala.tools.nsc.interpreter.IMain.beQuietDuring(IMain.scala:214)

at org.apache.spark.repl.SparkILoop.initializeSpark(SparkILoop.scala:37)

at org.apache.spark.repl.SparkILoop.loadFiles(SparkILoop.scala:105)

at scala.tools.nsc.interpreter.ILoop$anonfun$process$1.apply$mcZ$sp(ILoop.scala:920)

at scala.tools.nsc.interpreter.ILoop$anonfun$process$1.apply(ILoop.scala:909)

at scala.tools.nsc.interpreter.ILoop$anonfun$process$1.apply(ILoop.scala:909)

at scala.reflect.internal.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:97)

at scala.tools.nsc.interpreter.ILoop.process(ILoop.scala:909)

at org.apache.spark.repl.Main$.doMain(Main.scala:69)

at org.apache.spark.repl.Main$.main(Main.scala:52)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$runMain(SparkSubmit.scala:745)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)Also I tried with this line of code:

bin/spark-shell --conf spark-executor.memory=4g --conf spark.executor.cores=2 --master yarn --deploy-mode client

But still getting exactly the same error.

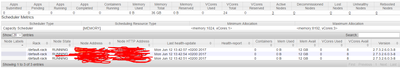

This is my Yarn resources:

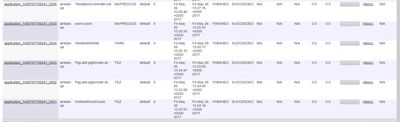

And this are apps that succeded on Ambari test:

Can someone tell me what I'm doing wrong here because I'm running nuts. Trying to fix this already one week and I can't anymore. Please someone. 😞

@Matt Clarke

@Jay SenSharma

Created 06-12-2017 09:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ivan Majnaric - I think you accidentally posted the same question twice:

https://community.hortonworks.com/questions/107268/yarn-threshold-error.html

Created 06-12-2017 09:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ivan Majnaric - I think you accidentally posted the same question twice:

https://community.hortonworks.com/questions/107268/yarn-threshold-error.html