Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: cloudbreak default blueprint installation in o...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

cloudbreak default blueprint installation in openstack has HDFS Capacity issues.

- Labels:

-

Hortonworks Cloudbreak

Created 12-22-2016 09:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

document.txtI have setup cloudbreak on openstack and trying to create a cluster with hdp-small-default bp. The cluster comes up by there are issues with HDFS capacity. On Ambari, i see HDFS Capacity Utilization Capacity Used:[100%, 24576], Capacity Remaining:[0] alert.

In the HDFS config, i see name node directory set to /hadoopfs/fs1/hdfs/namenode. Namenode is running on a host with 80 GB space on file system /dev/vda1 mounted on /. But /hadoopfs/fs1 is mounted on /dev/vdb which has just 9.8 GB of space. Not sure why its defaulting namenode dir to /hadoopfs/fs1/hdfs/namenode since the blueprint does not specify anything.

Tried adding "properties": { "dfs.namenode.name.dir": "/grid/0/hadoop/hdfs/namenode", "dfs.datanode.data.dir": "/grid/0/hadoop/hdfs/data" } in hdfs-site properties globally and in all host groups but the default still points to /hadoopfs/fs1/hdfs/namenode

attached is the blueprint i have used

Created 12-22-2016 01:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please try the blueprint. small-default-hdp-corrected.txt . Issue was the NameNode default heapsize of 1024 is causing issue. Overriding it to 2048 resolves the issue. Regarding HDFS datanode configured to /hadoopfs by default ? that is how cloudbreak is designed i believe so that datanode have seperate dedicated disks.

Created on 12-22-2016 09:45 AM - edited 08-18-2019 04:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

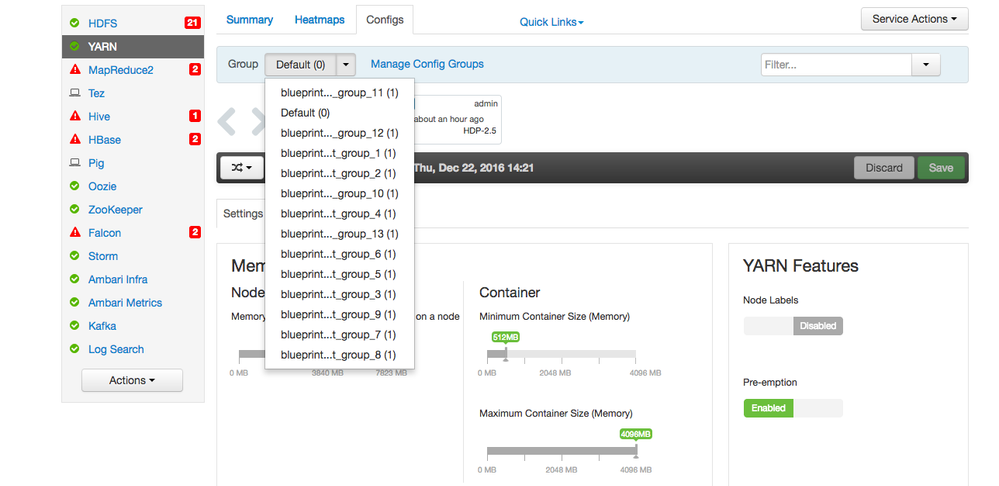

I am also facing the same issue -- I see cloudbreak creates config group for HDFS and YARN service while the same in not mentioned in blueprint definition. How do I say cloudbreak to not create config group ?

Created 12-22-2016 01:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please try the blueprint. small-default-hdp-corrected.txt . Issue was the NameNode default heapsize of 1024 is causing issue. Overriding it to 2048 resolves the issue. Regarding HDFS datanode configured to /hadoopfs by default ? that is how cloudbreak is designed i believe so that datanode have seperate dedicated disks.