Support Questions

- Cloudera Community

- Support

- Support Questions

- file location in HDP

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

file location in HDP

Created 12-14-2016 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This might come across as a very naive question as I have never used Linux before. I was wondering where can I view the files inside the sandbox like hadoop configuration files? What is the path? Data files on the data node??

I should log onto the sandbox via the command line via root and where should i navigate to then.

I was thinking of configuring a GUI (Gnome) for the sandbox, but unfortunately every simple task has proven to be more difficult than I expected. I do find answers to the problems that I face, online but unfortunately mostly the solutions would not work for me.

I have trouble turning off the firewall in the sandbox (Centos 6.8)

I have trouble installing Gnome because I get no package available etc.

Lastly is this necessary? or if i have ambari I do not need to install the GUI?

Trouble in pinging my machine from the guest.

Trouble copying files to the guest machine.... conneciton refused 😞

Thanks

Created 12-14-2016 07:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Arsalan Siddiqi, I'll try to answer the several pieces of your question.

- First, I encourage you to go through the Sandbox Tutorial at http://hortonworks.com/hadoop-tutorial/learning-the-ropes-of-the-hortonworks-sandbox/ It will help you understand a great deal about the Sandbox, and what it is intended to do and how to use it.

- The sandbox comes with Ambari and the Stack pre-installed. You shouldn't need to change OS system settings in the Sandbox VM, which is intended to act like an "appliance". Also, the sandbox already has everything Ambari needs to run successfully, over the HTTP Web interface. No need to configure graphic packages on the sandbox VM.

- Ambari provides a quite nice GUI for a large variety of things you might want to do with an HDP Stack, including viewing and modifying configurations, seeing the health and activity levels of HDP services, stopping and re-starting component services, and even viewing contents of HDFS files.

- While you can view the component config files at (in most cases) /etc/<componentname>/conf/* in the sandbox VM's native file system, please DO NOT try to change configurations there. The configs are Ambari-managed, and like any Ambari installation, if you change the files yourself, the ambari-agent will just change them back! Instead, use the Ambari GUI to change any configurations you wish, then press the Save button, and restart the affected services (Ambari will prompt you).

- The data files for HDFS are stored as usual in the native filesystem location defined by HDFS config parameter "dfs.datanode.data.dir". However, it won't do you much good to go look there, because the blockfiles stored there are not readily human-understandable. As you may know, HDFS layers its own filesystem on top of the native file system, storing each replica of each block as a file in a datanode. If you want to check the contents of HDFS directories, you're much better off to use the HDFS file browser, as follows:

- In Ambari, select the HDFS service view, and pull down the "Quick Links" menu at the top center. Select "Namenode UI".

- In the Namenode UI, pull down the "Utilities" menu at the top right. Select "Browse the file system".

- This will take you to the "Browse Directory" UI. You may click thru the directory names at the right edge, or type an HDFS directory path into the text box at the top of the directory listing. If you click on a file name, you will see info about the blocks of that file (short files only have Block 0), and you may download the file if you want to see the contents.

- Note that HDFS files are always immutable. HDFS files may be appended to, but cannot be edited.

- For copying files to the Sandbox VM, first make sure you can access the sandbox through 'ssh', as documented near the beginning of the Tutorial under "Explore the Sandbox"; then see http://hortonworks.com/hadoop-tutorial/learning-the-ropes-of-the-hortonworks-sandbox/#send-data-btwn... .

Hope this helps.

Created 12-14-2016 12:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Arsalan Siddiqi,

I recommend you to use Ambari as it will give you access to all configuration parameters and also to data files in HDFS by using the Ambari File View.

Please be aware that if you are using Ambari, any changes to config needs to be done through the Ambari GUI or API and editing the config files directly will not work (they are just a copy of the Ambari config).

/Best regards, Mats

Created 12-14-2016 12:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now that I am using NiFi inside the HDP.....and say i am using the GetHDFS processor..... what and how should i specify the path to the HDFS configuration files?

where is the core-site.xml file located?

Created 12-14-2016 05:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I agree on using the Ambari Files View on navigating around the HDP cluster when learning, it makes life a little easier 🙂

To answer your question, the *-site.xml files for the core hadoop components on an HDP cluster are located in /etc/hadoop/conf Other frameworks/technologies likely will have their own folders, such as Pig being /etc/pig/conf

Created 12-14-2016 07:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Arsalan Siddiqi, I'll try to answer the several pieces of your question.

- First, I encourage you to go through the Sandbox Tutorial at http://hortonworks.com/hadoop-tutorial/learning-the-ropes-of-the-hortonworks-sandbox/ It will help you understand a great deal about the Sandbox, and what it is intended to do and how to use it.

- The sandbox comes with Ambari and the Stack pre-installed. You shouldn't need to change OS system settings in the Sandbox VM, which is intended to act like an "appliance". Also, the sandbox already has everything Ambari needs to run successfully, over the HTTP Web interface. No need to configure graphic packages on the sandbox VM.

- Ambari provides a quite nice GUI for a large variety of things you might want to do with an HDP Stack, including viewing and modifying configurations, seeing the health and activity levels of HDP services, stopping and re-starting component services, and even viewing contents of HDFS files.

- While you can view the component config files at (in most cases) /etc/<componentname>/conf/* in the sandbox VM's native file system, please DO NOT try to change configurations there. The configs are Ambari-managed, and like any Ambari installation, if you change the files yourself, the ambari-agent will just change them back! Instead, use the Ambari GUI to change any configurations you wish, then press the Save button, and restart the affected services (Ambari will prompt you).

- The data files for HDFS are stored as usual in the native filesystem location defined by HDFS config parameter "dfs.datanode.data.dir". However, it won't do you much good to go look there, because the blockfiles stored there are not readily human-understandable. As you may know, HDFS layers its own filesystem on top of the native file system, storing each replica of each block as a file in a datanode. If you want to check the contents of HDFS directories, you're much better off to use the HDFS file browser, as follows:

- In Ambari, select the HDFS service view, and pull down the "Quick Links" menu at the top center. Select "Namenode UI".

- In the Namenode UI, pull down the "Utilities" menu at the top right. Select "Browse the file system".

- This will take you to the "Browse Directory" UI. You may click thru the directory names at the right edge, or type an HDFS directory path into the text box at the top of the directory listing. If you click on a file name, you will see info about the blocks of that file (short files only have Block 0), and you may download the file if you want to see the contents.

- Note that HDFS files are always immutable. HDFS files may be appended to, but cannot be edited.

- For copying files to the Sandbox VM, first make sure you can access the sandbox through 'ssh', as documented near the beginning of the Tutorial under "Explore the Sandbox"; then see http://hortonworks.com/hadoop-tutorial/learning-the-ropes-of-the-hortonworks-sandbox/#send-data-btwn... .

Hope this helps.

Created 12-15-2016 12:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

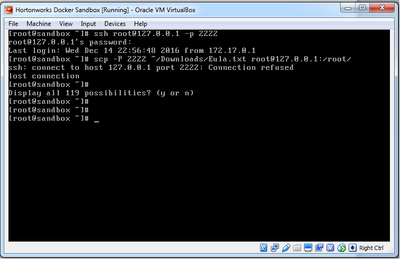

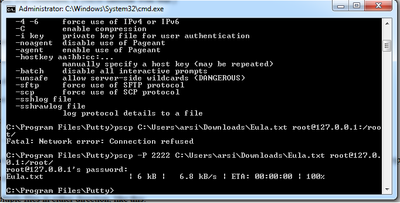

@Matt Foley Thankyou for your detailed reply.....unfortunately the ssh connection to transfer file does not work and i keep getting the connection refused error.... i have tried everything mentioned here...SCP Conn Refused

but still no luck.....

The last thing i would want to clarify is why when i connect via Putty to root@127.0.0.1 p 2222 in windows and do a "dir" command, i see different folders as to when i do a DIR in virtualbox terminal??

Created 12-15-2016 10:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Arsalan Siddiqi , Sorry you're having difficulties. It's pretty hard to debug access problems remotely, as you know, but let's see what we can do.

Let me clarify a couple things: Is it correct that you are able to use:

ssh root@127.0.0.1 -p 2222

to connect over ssh in putty, but when you use:

scp -P 2222 mylocalfile.txt root@127.0.0.1:/root/

it rejects the connection?

- Are you using all the parts in the above statement?

- The port: "-P 2222" (capital P, not lower case as with ssh).

- The root user id "root@"

- The colon after the IP address "127.0.0.1:"

- The destination directory (/root/ in the example above, but you can use any absolute directory path)

- If you've been using "localhost" as in the tutorial, try "127.0.0.1" instead.

- If you've been cut-and-pasting the command line, try typing it instead. The "-" symbol often doesn't cut-and-paste correctly, because formatted text may use character "m-dash" or "n-dash" instead of "hyphen". It is much safer to type it than to paste it.

- So, did you actually configure Putty with the "ssh" request, the port number, and the user and host info, in a dialogue box rather than typing an ssh command line? And did that work correctly?

- Assuming the answer to the above is "yes" and "yes", the next question is: Where are you typing the "scp" command? You can't type it into the Putty connection with the VM, that won't work. The scp command line is meant to be sent to your native box.

- Does Putty have a file transfer dialogue box that can use scp protocol? Is that what you're trying to use? Or have you downloaded the "pscp.exe" file from putty.org, and are using that? See http://www.it.cornell.edu/services/managed_servers/howto/file_transfer/fileputty.cfm The full docs for Putty PSCP are at https://the.earth.li/~sgtatham/putty/0.67/htmldoc/Chapter5.html#pscp-usage and shows that pscp also takes a capital "-P" to specify the port number.

Worst case, if you can't get any of these working, you've already established the Virtualbox connection for the VM. WIth some effort you can figure out how to configure a shared folder with your host machine, and use it to pass files back and forth.

Created on 12-16-2016 09:07 AM - edited 08-18-2019 05:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again.....

So you can see in the pictures below... i used putty... then ssh via the sandbox terminal.... i got the file transfer part working using PSCP....

Created 12-16-2016 10:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Arsalan Siddiqi , yes, this is what I expected for Putty. By using the Putty configuration dialogue as shown in your screenshot, you ALREADY have an ssh connection to the guest VM, so typing `ssh` in the Putty terminal window is unnecessary and `scp` won't work -- instead of asking the host OS to talk to the guest OS, you would be asking the guest OS to talk to itself!

I'm glad `pscp` (in a cmd window) worked for you -- and thanks for accepting the answer.

Your last question, "why when i connect via Putty to root@127.0.0.1 p 2222 in windows and do a "dir" command, i see different folders as to when i do a DIR in virtualbox terminal??" is a puzzler. I would suggest, in each window (Putty terminal and Virtualbox terminal), you type the linux command `pwd`, which stands for "print working directory". It may be that the filesystem location you are ending up at login in the two tools is different. Also you can try the linux `ls` command.

Created 12-15-2016 09:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Arsalan Siddiqi Can you post screenshots of the virtual box network settings (specifically, adapter 1 and adapter 2), and also the output of ifconfig?