Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: how to resolve the error about /tmp/hive/hive ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

how to resolve the error about /tmp/hive/hive is exceeded: limit=1048576 items=1048576

- Labels:

-

Apache Ambari

-

Apache Hadoop

-

Apache Hive

Created 06-16-2019 08:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi all

we have ambari cluster ( HDP version - 2.5.4 )

in the spark thrift log we can see the error about - /tmp/hive/hive is exceeded: limit=1048576 items=1048576

we try to delete the old files under /tmp/hive/hive , but there are a million of files and we cant delete them because

hdfs dfs -ls /tmp/hive/hive isn't return any output

any suggestion ? how to delete the old files in spite there are a million of files?

or any other solution/?

* for now spark thrift server isn't started successfully because this error , also hiveserver2 not started also

Caused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.protocol.FSLimitException$MaxDirectoryItemsExceededException): The directory item limit of /tmp/hive/hive is exceeded: limit=1048576 items=1048576 at org.apache.hadoop.ipc.Server$Han dler.run(Server.java:2347)

second

can we purge the files? by cron or other?

hdfs dfs -ls /tmp/hive/hive Found 4 items drwx------ - hive hdfs 0 2019-06-16 21:58 /tmp/hive/hive/2f95f6a5-76ad-487e-968c-1873264a3a9c drwx------ - hive hdfs 0 2019-06-16 21:45 /tmp/hive/hive/368d201c-cedf-48dc-bbad-f13d6aed7016 drwx------ - hive hdfs 0 2019-06-16 21:58 /tmp/hive/hive/717fb013-535b-4279-a12e-4fc4261c4d68

Created 06-17-2019 12:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"Mycluster" needs to be replaced with the "fs.defaultFS" parameter of your HDFS config.

Created 06-17-2019 12:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In case of NameNode enabled cluster the "dfs.nameservices" is defined. so based on the "dfs.nameservices" the "fs.defaultFS" is determined.

For example if "dfs.nameservices=mycluster" then the "fs.defaultFS" will be ideally "hdfs://mycluster"

If there is No NameNode HA enabled then the "fs.defaultFS" will be pointing to NameNode host/port

Created 06-17-2019 04:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay , in my cluster ( HDFS --> config ) I see dfs.nameservices=hdfsha ,

so it should be like this?

hadoop fs -rm -r -skipTrash hdfs://hdfsha/tmp/hive/hive/

Created 06-17-2019 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay actually it should be like this

hadoop fs -rm -r -skipTrash hdfs://hdfsha/tmp/hive/hive/*

need to add the "*" after slash in order to delete only the folders under /tmp/hive/hive and not the sub folder itself (/tmp/hive/hive)

Created 06-17-2019 05:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks good. Yes in your command mycluster need to be replaced with hdfsha

Created 06-16-2019 11:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jay - nice

I see there the option:

hadoop fs -rm -r -skipTrash hdfs://mycluster/tmp/hive/hive/

this option will remove all folders under /tmp/hive/hive

but what is the value - mycluster ? ( what I need to replace instead that ?

Created 06-17-2019 02:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Whenever you change the parameter this config the cluster needs to be aware of the changes. When you start Ambari the underlying components don't get started unless you explicitly start those components!

So you can start Ambari without stating YARN or HDFS

Created 06-17-2019 05:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey Shelton Okot - do you mean to restart the ambari server ? as ambari server restart? instead to restart the HDFS and YARN services ? ( after we set dfs.namenode.fs-limits.max-directory-items )

Created 06-17-2019 05:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the parameter "dfs.namenode.fs-limits.max-directory-items " is HDFS specific hence the & HDFS dependent services and HDFS Dependent service components needs to be restarted. In Ambari UI it will show the required service components that needs to be restarted.

No need to restart Ambari Server.

Created on 06-17-2019 05:22 AM - edited 08-17-2019 02:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

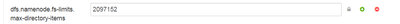

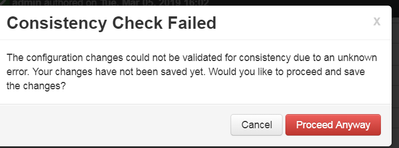

second when I saved the parameter in ambari - Ambari -> HDFS -> Configs -> Advanced -> Custom hdfs-site

dfs.namenode.fs-limits.max-directory-items=2097152

I GET:

The configuration changes could not be validated for consistency due to an unknown error. Your changes have not been saved yet. Would you like to proceed and save the changes?

dose this parameter supported in HDP version - 2.6.4?