Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: org.apache.hadoop.util.DiskChecker$DiskErrorEx...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

org.apache.hadoop.util.DiskChecker$DiskErrorException(No space available in any of the local directories.)

Created on 01-16-2023 10:13 AM - edited 01-16-2023 10:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This error has been reported earlier

I have a 3 node hadoop cluster for doing my research on Medical Side Effects

Each node has Ubuntu 18.04.5 LTS.

CDH 6.3.4-1.cdh6.3.4.p0.6751098

Basically I have not been able to run any MR jobs or Hive queries/jobs

Hive select * works of course because select * does not launch an MR job...However querying a Hive table with just 2 cols and 2 rows fails

hive -e "show create table beatles"

CREATE EXTERNAL TABLE `beatles`(

`id` int,

`name` string)

COMMENT 'Beatles Group'

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'field.delim'=',',

'line.delim'='\n',

'serialization.format'=',')

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs-//hp8300one:8020/data/demo'

TBLPROPERTIES (

'transient_lastDdlTime'='1673760903')

Time taken: 1.711 seconds, Fetched: 18 row(s)

hive -e "select * from beatles"

OK

1 john

2 paul

3 george

4 ringo

Time taken: 1.983 seconds, Fetched: 4 row(s)

hive -e "select * from beatles where id > 0"

WARNING: Use "yarn jar" to launch YARN applications.

Logging initialized using configuration in jar:file:/opt/cloudera/parcels/CDH-6.3.4-1.cdh6.3.4.p0.6751098/jars/hive-common-2.1.1-cdh6.3.4.jar!/hive-log4j2.properties Async: false

Query ID = sanjay_20230116101202_b81b4798-e6e9-485f-9689-db33f6b313ec

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

23/01/16 10:12:05 INFO client.RMProxy: Connecting to ResourceManager at hp8300one/10.0.0.3:8032

23/01/16 10:12:05 INFO client.RMProxy: Connecting to ResourceManager at hp8300one/10.0.0.3:8032

Starting Job = job_1673779576753_0003, Tracking URL = http://hp8300one:8088/proxy/application_1673779576753_0003/

Kill Command = /opt/cloudera/parcels/CDH-6.3.4-1.cdh6.3.4.p0.6751098/lib/hadoop/bin/hadoop job -kill job_1673779576753_0003

Hadoop job information for Stage-1: number of mappers: 0; number of reducers: 0

2023-01-16 10:12:10,441 Stage-1 map = 0%, reduce = 0%

Ended Job = job_1673779576753_0003 with errors

Error during job, obtaining debugging information...

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 0 HDFS Write: 0 HDFS EC Read: 0 FAIL

Total MapReduce CPU Time Spent: 0 msec

Error in the Logs

2023-01-15 02:47:37,463 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.ApplicationImpl: Application application_1673779576753_0001 transitioned from INITING to RUNNING

2023-01-15 02:47:37,467 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1673779576753_0001_01_000001 transitioned from NEW to LOCALIZING

2023-01-15 02:47:37,467 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_INIT for appId application_1673779576753_0001

2023-01-15 02:47:37,476 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService: Downloading public resource: { hdfs://hp8300one:8020/user/yarn/mapreduce/mr-framework/3.0.0-cdh6.3.4-mr-framework.tar.gz, 1672446065301, ARCHIVE, null }

2023-01-15 02:47:37,479 ERROR org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService: Local path for public localization is not found. May be disks failed.

org.apache.hadoop.util.DiskChecker$DiskErrorException: No space available in any of the local directories.

at org.apache.hadoop.fs.LocalDirAllocator$AllocatorPerContext.getLocalPathForWrite(LocalDirAllocator.java:400)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:152)

at org.apache.hadoop.yarn.server.nodemanager.LocalDirsHandlerService.getLocalPathForWrite(LocalDirsHandlerService.java:589)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$PublicLocalizer.addResource(ResourceLocalizationService.java:883)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerTracker.handle(ResourceLocalizationService.java:781)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerTracker.handle(ResourceLocalizationService.java:723)

at org.apache.hadoop.yarn.event.AsyncDispatcher.dispatch(AsyncDispatcher.java:197)

at org.apache.hadoop.yarn.event.AsyncDispatcher$1.run(AsyncDispatcher.java:126)

at java.lang.Thread.run(Thread.java:750)

2023-01-15 02:47:37,479 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService: Created localizer for container_1673779576753_0001_01_000001

2023-01-15 02:47:37,481 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService: Localizer failed for container_1673779576753_0001_01_000001

org.apache.hadoop.util.DiskChecker$DiskErrorException: No space available in any of the local directories.

at org.apache.hadoop.fs.LocalDirAllocator$AllocatorPerContext.getLocalPathForWrite(LocalDirAllocator.java:400)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:152)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:133)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:117)

at org.apache.hadoop.yarn.server.nodemanager.LocalDirsHandlerService.getLocalPathForWrite(LocalDirsHandlerService.java:584)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.ResourceLocalizationService$LocalizerRunner.run(ResourceLocalizationService.java:1205)

2023-01-15 02:47:37,481 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1673779576753_0001_01_000001 transitioned from LOCALIZING to LOCALIZATION_FAILED

2023-01-15 02:47:37,482 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.localizer.LocalResourcesTrackerImpl: Container container_1673779576753_0001_01_000001 sent RELEASE event on a resource request { hdfs://hp8300one:8020/user/yarn/mapreduce/mr-framework/3.0.0-cdh6.3.4-mr-framework.tar.gz, 1672446065301, ARCHIVE, null } not present in cache.

2023-01-15 02:47:37,482 WARN org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=sanjay OPERATION=Container Finished - Failed TARGET=ContainerImpl RESULT=FAILURE DESCRIPTION=Container failed with state: LOCALIZATION_FAILED APPID=application_1673779576753_0001 CONTAINERID=container_1673779576753_0001_01_000001

2023-01-15 02:47:37,483 WARN org.apache.hadoop.util.concurrent.ExecutorHelper: Execution exception when running task in DeletionService #1

2023-01-15 02:47:37,483 WARN org.apache.hadoop.util.concurrent.ExecutorHelper: Caught exception in thread DeletionService #1:

java.lang.NullPointerException: path cannot be null

at com.google.common.base.Preconditions.checkNotNull(Preconditions.java:204)

at org.apache.hadoop.fs.FileContext.fixRelativePart(FileContext.java:270)

at org.apache.hadoop.fs.FileContext.delete(FileContext.java:768)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.deletion.task.FileDeletionTask.run(FileDeletionTask.java:109)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

2023-01-15 02:47:37,489 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.ContainerImpl: Container container_1673779576753_0001_01_000001 transitioned from LOCALIZATION_FAILED to DONE

Created 01-16-2023 11:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The spark/yarn/hive jobs uses local directories for localization purpose. The required data which is used by the application is stored on local directories when the application is in execution.

Please manually delete the older data from local /tmp directory. Also,

Please follow the steps mentioned in following article to clear the cache memory from local directory : https://community.cloudera.com/t5/Community-Articles/How-to-clear-local-file-cache-and-user-cache-fo...

Please manually delete the unwanted data from local /tmp directory and also follow the above article

If you found that the provided solution(s) assisted you with your query, please take a moment to login and click Accept as Solution below each response that helped.

Created 01-16-2023 11:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The spark/yarn/hive jobs uses local directories for localization purpose. The required data which is used by the application is stored on local directories when the application is in execution.

Please manually delete the older data from local /tmp directory. Also,

Please follow the steps mentioned in following article to clear the cache memory from local directory : https://community.cloudera.com/t5/Community-Articles/How-to-clear-local-file-cache-and-user-cache-fo...

Please manually delete the unwanted data from local /tmp directory and also follow the above article

If you found that the provided solution(s) assisted you with your query, please take a moment to login and click Accept as Solution below each response that helped.

Created 01-17-2023 10:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately this does not solve the issue. I just request a very clear step by step explanation of where I need to specify all the variables needed to run a MR or a Hive job. I have been using CDH since 2011 but this is the first time I cannot even run a Wordcount program successfully !!! Very disappointed and discouraged.

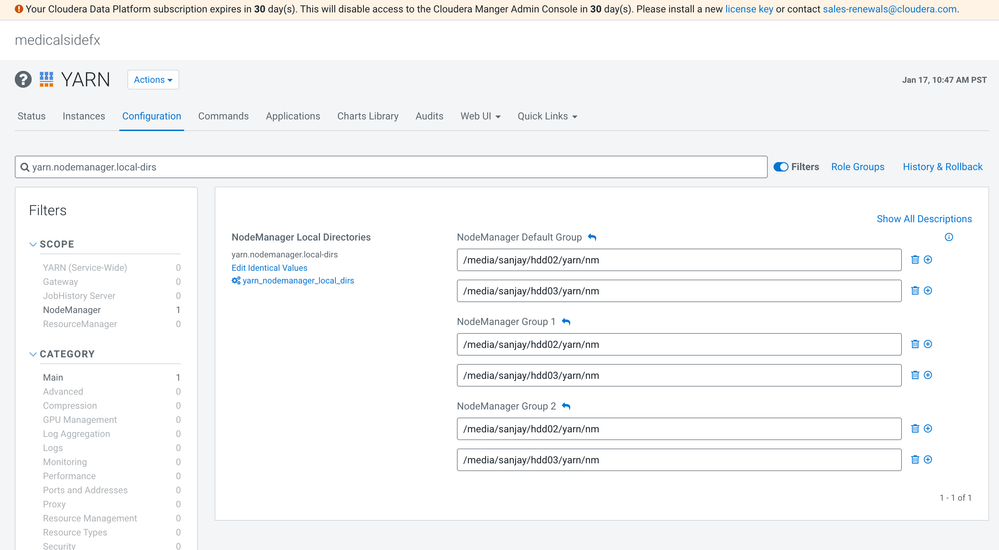

When I go to the Configuration file for the job that failed from the WebUI it shows me this for the property

<property>

<name>yarn.nodemanager.local-dirs</name>

<value>${hadoop.tmp.dir}/nm-local-dir</value>

<final>false</final>

<source>yarn-default.xml</source>

</property>

However in Cloudera Manager the values are differently shown

Also the local directories specified by "yarn.nodemanager.local-dirs" are empty

ls -latr /media/sanjay/hdd0[23]/yarn/nm

/media/sanjay/hdd03/yarn/nm:

total 8

drwxrwxrwx 3 yarn hadoop 4096 Jan 2 11:25 ..

drwxr-xr-x 2 yarn hadoop 4096 Jan 2 11:25 .

/media/sanjay/hdd02/yarn/nm:

total 8

drwxrwxrwx 3 yarn hadoop 4096 Jan 2 11:25 ..

drwxr-xr-x 2 yarn hadoop 4096 Jan 2 11:25 .

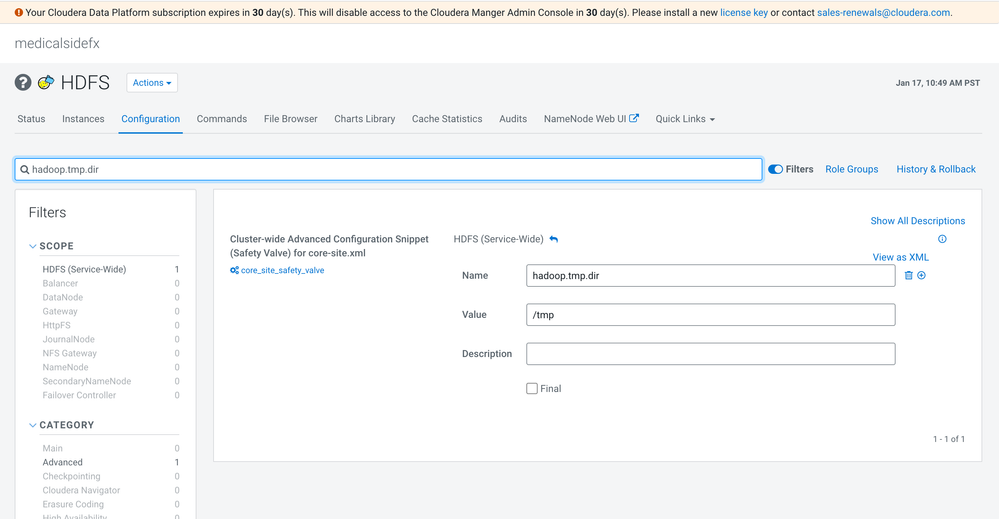

My "hadoop.tmp.dir" is defined here

Created 03-30-2023 04:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Kartik_Agarwal's suggestion works from my side.

i have the problem like yours when i trying to insert one data in a table.

i removed the /data/tmp/hadoop/mapred directory from all the nodes and restart the service, then it works.

Created 03-30-2023 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jjjjanine Thanks for providing your valuable inputs.