Support Questions

- Cloudera Community

- Support

- Support Questions

- putHDFS no output - logs?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

putHDFS no output - logs?

- Labels:

-

Apache Hadoop

-

Apache NiFi

Created on 09-24-2016 11:54 AM - edited 08-18-2019 03:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am a newbie at Hadoop and Nifi. Hopefully that'll change soon.

I have installed a Hortonworks sandbox, and added HDF-2.0.0.0 on it, installing and running nifi as a service.

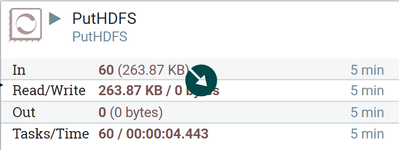

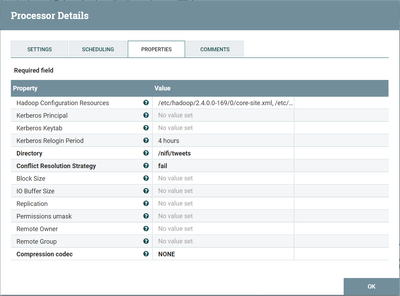

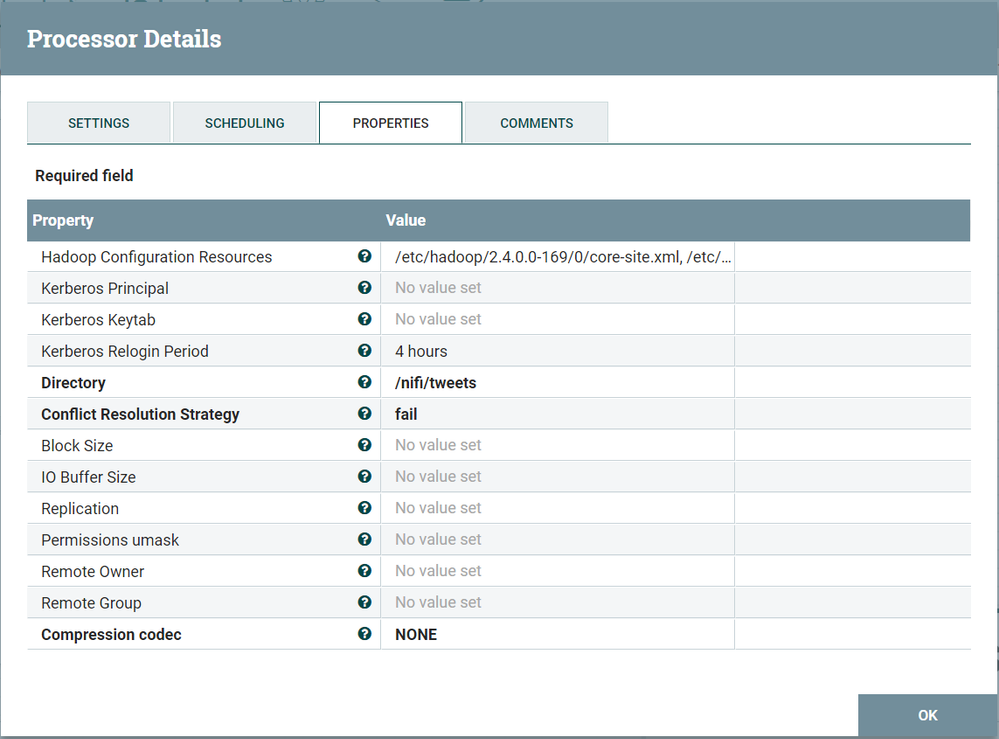

I have configured a dataflow based on the "Pull from Twitter gardenhose" template, which seems to work fine, except that there's no output in the putHDFS processor:

There are no messages or warnings in the nifi UI, except when I changed the directory entry in the properties to "hdfs://sanbox.hortonworks.com/....".

I suspect that I have the wrong core-site.xml and/or hdfs-site.xml files specified (there are a dozen of each on the sandbox...), but I don't know where to look for error descriptions. It could also be related to teh fact that I don't run the sandbox on localhost but in the LAN (with the network setting in the virtualbox set to "bridged").

The files: logs/nifi-app.log, nifi-bootstrap.log and nifi-user.log have no data regarding this process.

Update: I found the bulletins setting, and put it to DEBUG. This helps already. Can I view them somewhere outside the UI? Only the last 3 are showing...

Can anyone point out to me where to find the error logs for this processor?

Many thanks for reading this far already 🙂

Created 09-24-2016 01:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you should be able to use /etc/hadoop/conf/core-site.xml and /etc/hadoop/conf/hdfs-site.xml, and if you are running NiFi/HDF directly on the sandbox there shouldn't be any connectivity problems.

Do you have the relationships of PutHDFS going anywhere, or are they auto-terminated?

It would be helpful to route them somewhere to see if the processor thinks it is a success or failure, you could create two LogAttribute processors, one for success and one for failure and see which it goes to.

Created 09-24-2016 01:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I believe you should be able to use /etc/hadoop/conf/core-site.xml and /etc/hadoop/conf/hdfs-site.xml, and if you are running NiFi/HDF directly on the sandbox there shouldn't be any connectivity problems.

Do you have the relationships of PutHDFS going anywhere, or are they auto-terminated?

It would be helpful to route them somewhere to see if the processor thinks it is a success or failure, you could create two LogAttribute processors, one for success and one for failure and see which it goes to.

Created 09-26-2016 07:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bryan,

Thanks for your sound advice. I put in the core-site.xml and hdfs-site.xml paths you've suggested and created the logAttribute processors. The output is now written to HDFS, and the logAttribute 'succes'-flow is populating accordingly.

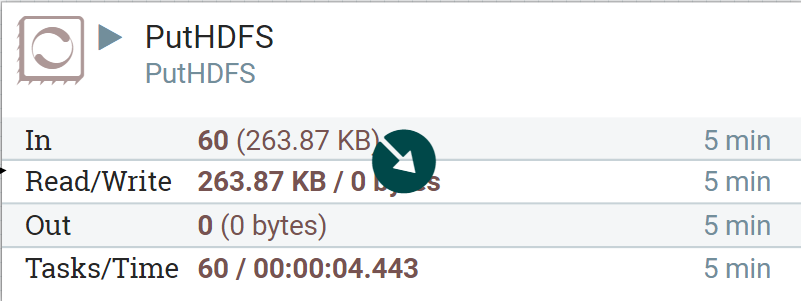

The bytes-written amount remains 0 though. Possibly a bug?

Created 09-26-2016 11:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Read/Write stats on the face of each processor tell you how much content is being read from or written to NiFi's content repository. It is not intended to tell you how much data is written to the next processor or some external system. The purpose is to help dataflow managers understand which processors in their dataflows are disk I/O intensive. The "out" stat tells you how many FlowFiles were routed out of this processor on to one or more output relationships. In the case of a processor like putHDFS, it is typical to auto-terminate the "success" relationship and Loop the "failure" relationship back on to the putHDFS processor itself. Any FlowFile routed to "success" has confirmed delivery to HDFS. FlowFiles routed to failure were unable to be delivered and should produce a bulletin and log messages as to why. If the failure relationship is looped back on your putHDFS, NiFi will try again to deliver the file after the FlowFile penalty has expired.

Matt

Created 09-26-2016 12:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Matt,

Thanks for clarifying. So, no need to report...