Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: "org.apache.hadoop.hive.metastore.HiveMetaExce...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

"org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version" error

- Labels:

-

Apache Ambari

-

Apache Hive

Created 02-08-2016 08:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am unable to start the "Hive Metastore" service through Apache Server . I keep getting the following error. The materials I found on the internet suggest that it could be due to attempt to get schema information with older metastore. But, I used Ambari to install the cluster and all the sevices, so I am sure all the metastore should have been up to date. I have also observed that the service "HiveServer2" is unstable. When I start the service, it starts and shown as green in the dashboard. But after some time, it stops automatically. I do not know the reason why. When I started writing this post, it was green. After I finished writing the post, it was red so I restarted it.

I am adding some more information. The "MySQL server" service is always in red and the status is always "install failed" . I found out through this forum that, since I had installed MySQL manually, during the first step of Ambari Installation, this service cannot be installed. Is this causing the issue?. Here is the error.

Metastore connection URL: jdbc:mysql://mycomputer.mydomain.com/hive?createDatabaseIfNotExist=true Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: hiveuser org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Created 02-08-2016 09:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This occurs if the database is there but somehow corrupted. He cannot read the schema version means he cannot find the table entry that contains the hive version. Can you run any query? I wouldn't think so.

How about recreate it and point to the new correct one in ambari? Guidelines below:

Created 03-28-2016 06:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello guys,

I am facing the same issue. and also following the necessary steps that you people taken to resolve issue.

but still i am facing same issue.I have root password of my sql but for drpping hive data base when i fire command show databases; It give me the ERROR msg.

ERROR 1820 (HY000): You must reset your password using ALTER USER statement before executing this statement.

Please tell me the solution to fix it asap.

Thanks in advance.

Created 03-28-2016 06:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That message indicates that your user password may have expired. However you should be able to run set commands

SET PASSWORD = PASSWORD('new_updated_password');

After this your problem should go away.

Created 07-25-2016 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI, I am trying this post but when I executed this command "$HIVE_HOME/bin/schematool -initSchema -dbType mysql"

I got no such file or directory found

Created 07-25-2016 03:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you need to replace hive home with the actual path? /usr/hdp/<version>/hive/

Also on the node where you run it you need a hive client installed.

Created 07-25-2016 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks.. I am able to find actual path... But how will I replace because everytime I am getting HIVE_HOME not found

Please share how to install hive client..

Is it the right document I am referring https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.3.0/bk_installing_manually_book/content/ch_inst...

Created on 07-25-2016 04:11 PM - edited 08-19-2019 02:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

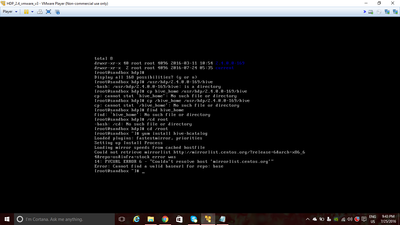

@Benjamin Leonhardi I tried to install thru "yum install hive-hcatalog"

Please find the attached screenshot

Created 07-25-2016 04:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you using HDP? Then you would install them through Ambari. Host-> Add Client

Created 07-25-2016 04:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I am using HDP

Created 07-25-2016 04:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So on the ambari go to hosts, select the host you want and press the big Add+ button

Created 07-25-2016 04:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You still will not have HIVE_HOME because the scripts set it dynamically you need to replace that place holder with /usr/hdp/<yourversionlookitupinlinux>/hive