Support Questions

- Cloudera Community

- Support

- Support Questions

- "org.apache.hadoop.hive.metastore.HiveMetaExceptio...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

"org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version" error

- Labels:

-

Apache Ambari

-

Apache Hive

Created 02-08-2016 08:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am unable to start the "Hive Metastore" service through Apache Server . I keep getting the following error. The materials I found on the internet suggest that it could be due to attempt to get schema information with older metastore. But, I used Ambari to install the cluster and all the sevices, so I am sure all the metastore should have been up to date. I have also observed that the service "HiveServer2" is unstable. When I start the service, it starts and shown as green in the dashboard. But after some time, it stops automatically. I do not know the reason why. When I started writing this post, it was green. After I finished writing the post, it was red so I restarted it.

I am adding some more information. The "MySQL server" service is always in red and the status is always "install failed" . I found out through this forum that, since I had installed MySQL manually, during the first step of Ambari Installation, this service cannot be installed. Is this causing the issue?. Here is the error.

Metastore connection URL: jdbc:mysql://mycomputer.mydomain.com/hive?createDatabaseIfNotExist=true Metastore Connection Driver : com.mysql.jdbc.Driver Metastore connection User: hiveuser org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Created 02-08-2016 09:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This occurs if the database is there but somehow corrupted. He cannot read the schema version means he cannot find the table entry that contains the hive version. Can you run any query? I wouldn't think so.

How about recreate it and point to the new correct one in ambari? Guidelines below:

Created 02-08-2016 09:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This occurs if the database is there but somehow corrupted. He cannot read the schema version means he cannot find the table entry that contains the hive version. Can you run any query? I wouldn't think so.

How about recreate it and point to the new correct one in ambari? Guidelines below:

Created 02-08-2016 09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am unable to find where is hive installed. When I do echo $HIVE_HOME, it comes out blank. I need the path of the hive so that I can edit "hive-site.xml". I do not know where I can find "hive-site.xml". When I executed the find command for "hive-site.xml" I get a huge list. (I cannot paste the list here in the comment, because it exceeds the character limit). I am confused now. I do not which hive-site.xml should I edit?

Created 02-08-2016 12:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Everything is installed in /usr/hdp. For example with my version its /usr/hdp/2.3.4.0-3485/hive/bin/.

Normally you just need to initialize the db in a database of your choice. ( MySQL? ) ( following the commands with init schema etc. )

After that you need to point hive to that store. If your cluster is managed by ambari you do not change the configuration in hive-site.xml. You do it in Ambari. ( You use HDP right? ). Here you can change the database connection settings under the Hive/Config/Advanced section. You cannot change the hive-site manually ambari would overwrite it.

Created 02-08-2016 12:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes I am using HDP. The log also showed some permission issue, so I went into the configuration section and I updated the password of hive user. After I did this, I do not see the schema version exception anymore!!. Now, when I start the Hive Metastore service, I see the two errors (see then end of this comment). I also tried to create new database, but the option 'New MySQL Database" is not clickable. It is disabled. Errors are:

SLF4J: Class path contains multiple SLF4J bindings.

org.apache.hadoop.hive.metastore.HiveMetaException:Schema initialization FAILED!

Created 02-08-2016 12:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pradeep kumar run this

$HIVE_HOME/bin/schematool -initSchema -dbType mysql

Also, check the link that I shared in my response. It talked about permission issue too

Created 04-15-2018 05:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you explain little more as i tired the said command & it is not working, please find the attached snapshot

can you advice me plshive.jpg

Created 02-08-2016 01:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Benjamin. I will accept your answer here, as you are the first to provide me with some guideline on going to ambari configuration section and try out the command. I had to do some additional steps, which you can see in my posted answer.

Created on 02-08-2016 12:16 PM - edited 08-19-2019 02:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

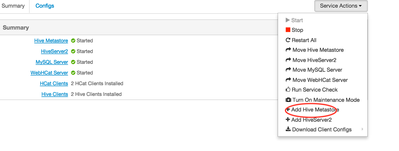

You can always add new Hive metastore using Ambari "If you dont want to use existing mysql that you installed"

I have experienced this issue in the past and it had to do with the permissions ..see link

Created 02-08-2016 01:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I fixed all this issue. This is what I have done. Hope this helps users who are stuck in similar situation. The error "org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version" was fixed by updating the password of the hive user from the Ambari configuration file. It seems I had typed incorrect password in the configuration section. After that I started getting schema reading errors. So I went to mysql console and dropped the hive database and recreated the database and reassigned the hive users to the database. I also executed the command "$HIVE_HOME/bin/schematool -initSchema -dbType mysql" as suggested by Neeraj. After that it all worked perfectly fine!. Special thanks to Neeraj Sabharwal, who have been helping me with all the errors. Thanks Neeraj once again.