Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: this version of libhadoop was built without sn...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

this version of libhadoop was built without snappy support.

- Labels:

-

Apache Hadoop

-

HDFS

Created on 02-23-2016 02:37 PM - edited 09-16-2022 03:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I hope it's the right place to ask the following question 🙂

I try to put in hdfs a file with snappy compression. I write a Java code for that and when I try to run it on my cluster I got the following exception:

Exception in thread "main" java.lang.RuntimeException: native snappy library not available: this version of libhadoop was built without snappy support.

at org.apache.hadoop.io.compress.SnappyCodec.checkNativeCodeLoaded(SnappyCodec.java:65)

at org.apache.hadoop.io.compress.SnappyCodec.getCompressorType(SnappyCodec.java:134)

at org.apache.hadoop.io.compress.CodecPool.getCompressor(CodecPool.java:150)

at org.apache.hadoop.io.compress.CompressionCodec$Util.createOutputStreamWithCodecPool(CompressionCodec.java:131)

at org.apache.hadoop.io.compress.SnappyCodec.createOutputStream(SnappyCodec.java:99)

Apparently the snappy library is not available... I check on the os with the following cmd "rpm -qa | less | grep snappy" and snappy and snappy-devel is present.

In the configuration of hdfs (core-site.xml) org.apache.hadoop.io.compress.SnappyCodec is present in the field io.compression.codecs.

Does anyone has a idea why it's not working?

Thanks in advance

Created on 03-17-2016 08:39 AM - edited 08-18-2019 05:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

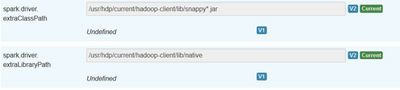

The problem is solve by making the following change in the spark config:

Thanks for the help guys!

Created 02-24-2016 08:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply, In my case it's not a solution because when I'm doing

hadoop checknative -a

I see that the snappy lib is true located at / usr/hdp/2.3.4.0-3485/hadoop/lib/native/libsnappy.so.1.

Created 03-03-2016 01:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have the same problem.

> hadoop checknative -a

snappy: true /usr/hdp/2.3.4.0-3485/hadoop/lib/native/libsnappy.so.1

> rpm -qa snappy

snappy-1.1.0-3.el7.x86_64

What else can I check?

Created 03-08-2016 11:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please confirm that you have the following property set correctly in hadoop-env.sh

Created 03-14-2016 10:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Artem Ervits which property?

Created 03-14-2016 11:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 03-17-2016 09:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have compiled the hadoop again with snappy :

svn checkout http://svn.apache.org/repos/asf/hadoop/common/tags/release-2.5.0

mvn package -Drequire.snappy -Pdist,native,src -DskipTests -Dtar

but got the same exception again...

I have also checked the hadoop-env.sh:

export JAVA_LIBRARY_PATH=${JAVA_LIBRARY_PATH}

Created on 03-17-2016 08:39 AM - edited 08-18-2019 05:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem is solve by making the following change in the spark config:

Thanks for the help guys!

Created 06-09-2016 01:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

just want to add that it seems the spark.driver.extraClassPath is not necessary, at least in my case when I write file in snappy in spark using:

rdd.saveAsTextFile(path, SnappyCodec.class)

Created 03-11-2018 08:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For me adding the line below to spark-defaults.conf helped based on packages installed on my test cluster.

spark.executor.extraLibraryPath /usr/hdp/current/hadoop-client/lib/native/:/usr/hdp/current/share/lzo/0.6.0/lib/native/Linux-amd64-64/

- « Previous

-

- 1

- 2

- Next »