Support Questions

- Cloudera Community

- Support

- Support Questions

- use of nifi with Kafka and Ranger

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

use of nifi with Kafka and Ranger

- Labels:

-

Apache Kafka

-

Apache NiFi

-

Apache Ranger

Created on

10-07-2019

03:37 AM

- last edited on

10-07-2019

08:08 AM

by

ask_bill_brooks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey, folks,

I have a Kerberized server, and for the police I'm using Ranger, via console I can publish and consume the messages of Kafka, But now I must do through Nifi and that I'm very lost someone could help me.

Thank you

Created 10-08-2019 10:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try below flow which is just for testing purposes:

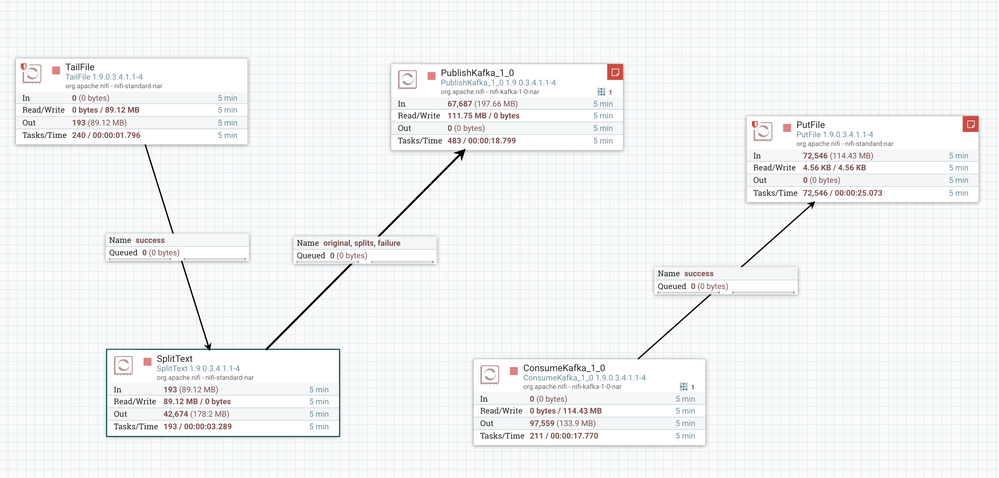

Basically I have a tailFile processor passing data through splitText then these messages are sent to PublishKafka_1_0(use this processor for this test), finally I created a consumer to consume data from the same topic configured in PublishKafka_1_0 storing the data in the file system with putFile.

In putFile I have configured Maximum File Count to 10, to avoid excessive space usage in the file system.

Created 10-07-2019 11:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can start testing a flow like below:

tailFile --> PublishKafka_1_0(2_0 depending on your Kafka version)

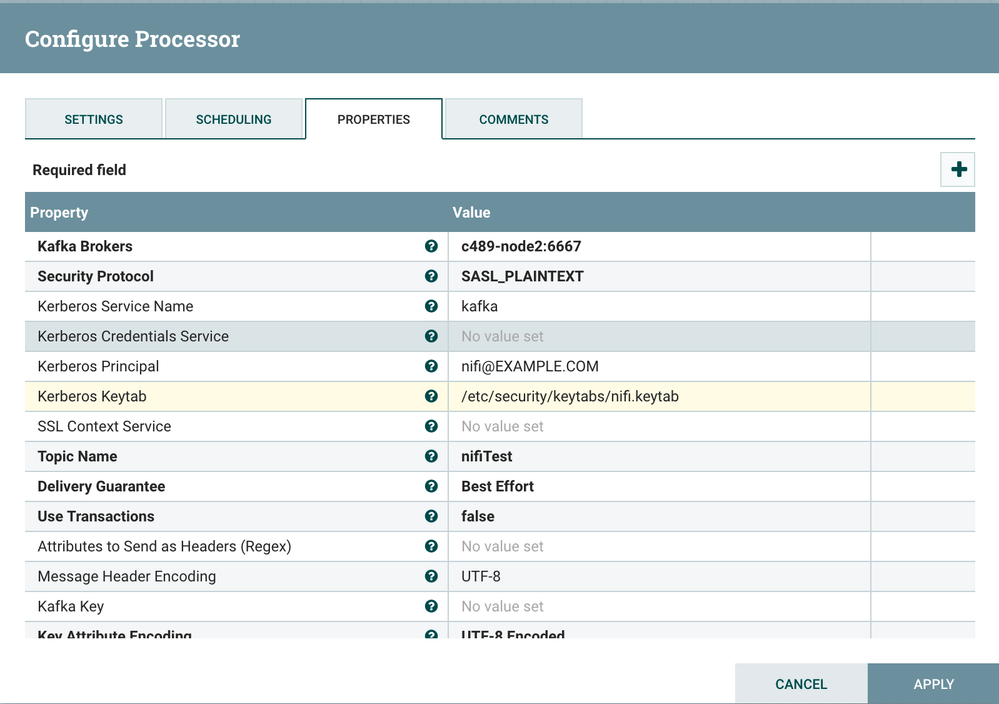

In publishKafka you can use a configuration example like below:

- Ensure that the principal has Ranger authorization to publish data to the topic.

- In Kafka brokers, provide the brokers FQDM, do not use localhost or IPs

Created 10-08-2019 12:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello ManuelCalvo

I don't know if it's asking you too much, as I would have to do a relationship of publisher and consumer. I'm a little new to the subject of Big data. Thank you very much for the info.

Greetings

Created 10-08-2019 10:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can try below flow which is just for testing purposes:

Basically I have a tailFile processor passing data through splitText then these messages are sent to PublishKafka_1_0(use this processor for this test), finally I created a consumer to consume data from the same topic configured in PublishKafka_1_0 storing the data in the file system with putFile.

In putFile I have configured Maximum File Count to 10, to avoid excessive space usage in the file system.