Community Articles

- Cloudera Community

- Support

- Community Articles

- Apache Livy - Apache NiFi - Apache Spark : Execut...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 03-14-2018 09:20 PM - edited 08-17-2019 08:22 AM

What: Executing Scala Apache Spark Code in JARS from Apache NiFi

Why: You don't want all of your scala code in a continuous block like Apache Zeppelin

Tools: Apache NiFi - Apache Livy - Apache Spark - Scala

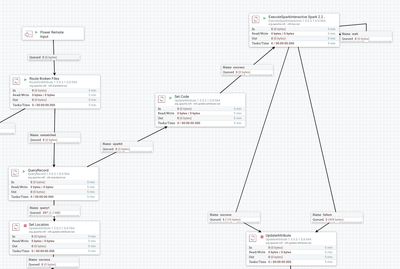

Flows:

Option 1: Inline Scala Code

Apache Zeppelin Running the Same Scala Job (have to add the jar to interpreter for Spark and restart)

Grafana Charts of Apache NiFi Run

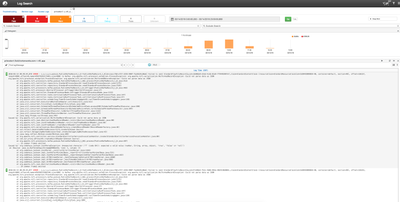

Log Search Helps You Find Errors

Run Code For Your Spark Class

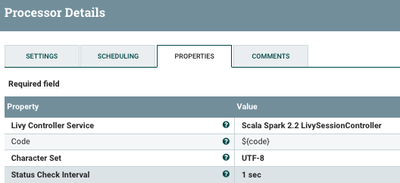

Setting up Your ExecuteSparkInteractive Processor

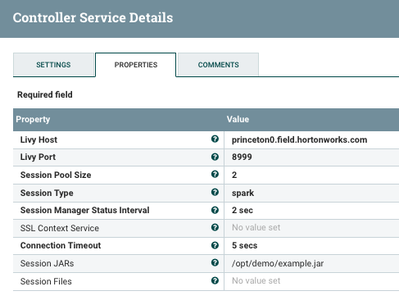

Setting Up Your Spark Service for Scala

Tracking the Job in Livy UI

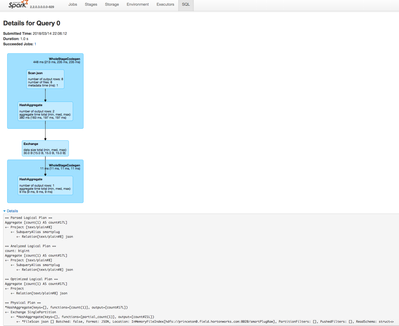

Tracking the Job in Spark UI

I was looking at doing this: Pull code from Git and put into a NiFi attribute, run directly. For bigger projects, you will have many classes and dependencies that may require a full IDE and SBT build cycle. Once I build a Scala jar I want to run against that.

Example Code

package com.dataflowdeveloper.example

import org.apache.spark.sql.SparkSession

class Example () {

def run( spark: SparkSession) {

try {

println("Started")

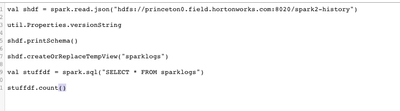

val shdf = spark.read.json("hdfs://princeton0.field.hortonworks.com:8020/smartPlugRaw")

shdf.printSchema()

shdf.createOrReplaceTempView("smartplug")

val stuffdf = spark.sql("SELECT * FROM smartplug")

stuffdf.count()

println("Complete.")

} catch {

case e: Exception =>

e.printStackTrace();

}

}

}

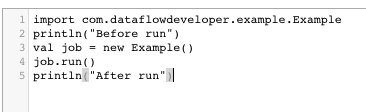

=--- Run that with

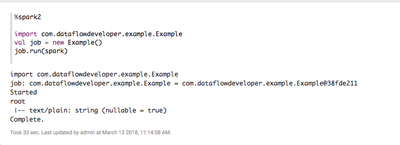

import com.dataflowdeveloper.example.Example

println("Before run")

val job = new Example()

job.run(spark)

println("After run")

=== after run

{"text\/plain":"After run"}Import Tip

You need to put your Jar in Session.jars on the Session control and on the same directory on hdfs.

So I did /opt/demo/example.jar in Linux and

hdfs:// /opt/demo/example.jar.

Make sure Livy and NiFi have read permissions on those.

Github: https://github.com/tspannhw/livysparkjob

Github Release: https://github.com/tspannhw/livysparkjob/releases/tag/v1.1

Apache NiFi Flow Example

Created on 03-15-2018 01:07 AM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Easy to run this code from Spark Shell as well without connection to nifi

runshell.sh

/usr/hdp/current/spark2-client/bin/spark-shell --packages org.apache.spark:spark-sql-kafka-0-10_2.11:2.2.0,org.apache.spark:spark-streaming-kafka-0-8_2.11:2.2.0 --jars /opt/demo/example.jar

Created on 03-15-2018 01:30 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.6.4/bk_spark-component-guide/content/spark-data... http://spark.apache.org/docs/2.2.0/

http://spark.apache.org/docs/2.2.0/rdd-programming-guide.html

http://spark.apache.org/docs/2.2.0/quick-start.html

curl -H "Content-Type: application/json" -H "X-Requested-By: admin" -X POST -d '{"file": "/apps/example.jar","className": "com.dataflowdeveloper.example.Links"}' http://server:8999/batches

curl -H "Content-Type: application/json" -H "X-Requested-By: admin" -X POST -d '{"file": "hdfs://server:8020/apps/example_2.11-1.0.jar","className": "com.dataflowdeveloper.example.Links"}' http://server:8999/batches

FYI

18/03/14 11:54:54 INFO LineBufferedStream: stdout: 18/03/14 11:54:54 INFO Client: Source and destination file systems are the same. Not copying hdfs://server:8020/opt/demo/example.jar