Community Articles

- Cloudera Community

- Support

- Community Articles

- Distributed training of neural networks on GPUs

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-09-2017 11:36 AM - edited 08-17-2019 01:20 PM

The intention of this article is to show how simple is to set up a HDP cluster which is suitable to execute neural network training on GPUs in a distributed fashion. Explanation of how distributed training works is beyond the scope of this article, but if you are interested you can read about that here.

Training neural networks are computationally intensive, involves lots of matrix manipulations, such as multiplication, addition and element-wise operations, these calculations are highly parallelizible and can be significantly speed up by using GPUs instead of general purpose CPUs.

Neural networks are often trained on very large datasets, where training data is too large to fit to one machine and therefore the data is partitioned across several machines. If the data is already on different machines, it might be desirable that during the training the data is not transferred across the computer network, but the processing is moved next to the data and train the neural network in a distributed fashion. This distributed machine learning tutorial uses the following key components:

- Deeplearning4j: open-source distributed ML Library

- Apache Spark: open-source cluster-computing framework

- HDCloud: tool for provisioning and managing HDP clusters on AWS

Preparations

HDCloud 1.14.1 has recently been released and it is required to launch a cluster for this tutorial , if you are not familiar with HDCloud, you can get started with HDCloud documentation that guides you through how to set up your HDCloud controller.

Once your HDCloud controller is up then you can launch a cluster with the cli tool:

wget https://raw.githubusercontent.com/akanto/ml/hcc-cuda-blog/scripts/test-dl4j.json hdc create-cluster --cli-input-json test-dl4j.json

If you are not familiar with the cli you can can find detailed description here and here. The first command above downloads test-dl4j.json file, which is a template that describes your cluster and looks like this:

{

"ClusterName": "test-dl4j",

"HDPVersion": "2.6",

"ClusterType": "Data Science: Apache Spark 1.6, Apache Zeppelin 0.7.0",

"Master": {

"InstanceType": "g2.2xlarge",

"VolumeType": "ephemeral",

"VolumeSize": 60,

"VolumeCount": 1,

"RecoveryMode": "MANUAL",

"Recipes": [

{

"URI": "https://raw.githubusercontent.com/akanto/ml/hcc-cuda-blog/scripts/install-nvidia-driver.sh",

"Phase": "post"

},

{

"URI": "https://raw.githubusercontent.com/akanto/ml/hcc-cuda-blog/scripts/ka-mnist.sh",

"Phase": "post"

}

]

},

"Worker": {

"InstanceType": "g2.2xlarge",

"VolumeType": "ephemeral",

"VolumeSize": 60,

"VolumeCount": 1,

"InstanceCount": 3,

"RecoveryMode": "AUTO",

"Recipes": [

{

"URI": "https://raw.githubusercontent.com/akanto/ml/hcc-cuda-blog/scripts/install-nvidia-driver.sh",

"Phase": "post"

}

]

},

"Compute": {

"InstanceType": "g2.2xlarge",

"VolumeType": "ephemeral",

"VolumeSize": 60,

"VolumeCount": 1,

"InstanceCount": 0,

"RecoveryMode": "AUTO",

"Recipes": [

{

"URI": "https://raw.githubusercontent.com/akanto/ml/hcc-cuda-blog/scripts/install-nvidia-driver.sh",

"Phase": "post"

}

]

},

"SSHKeyName": "REPLACE-WITH-YOUR-KEY",

"RemoteAccess": "0.0.0.0/0",

"WebAccess": true,

"HiveJDBCAccess": true,

"ClusterComponentAccess": true,

"ClusterAndAmbariUser": "admin",

"ClusterAndAmbariPassword": "admin",

"InstanceRole": "CREATE"

}

There are a few things what is worth to notice in this cluster template file:

- g2.2xlarge instances are used, since these machines are shipped with a high-performance Nvidia GPU, with 1536 CUDA cores and 4GB of video memory

- multiple custom scripts are executed as part of the cluster setup: install-nvidia-driver.sh is responsible to install the required Nvidia drivers on each node, ka-mnist.sh checks out the git repository that holds the example source code for training and evaluating the neural network

- SSHKeyName is just a placeholder, you need to add you own SSH key there

After the cluster has successfully been created you need to restart the clusters in order to load the Nvidia kernel modules and libraries. Restart of all nodes simultaneously can be done by executing the following command on master node:

sudo salt -G 'hostgroup:worker' cmd.run 'reboot' && sudo reboot

If you are wondering how the former Salt command works can take a look at this article.

After the restart is finished, ssh again to master node can verify whether Nvidia drivers and libraries are properly loaded:

sudo salt '*' cmd.run 'nvidia-smi -q | head' sudo salt '*' cmd.run 'ldconfig -p | grep cublas'

The result of the above command should look like this:

ip-10-0-91-181.eu-west-1.compute.internal:

==============NVSMI LOG==============

Timestamp : Sun Apr 9 10:58:52 2017

Driver Version : 352.99

Attached GPUs : 1

GPU 0000:00:03.0

Product Name : GRID K520

Product Brand : Grid

ip-10-0-91-181.eu-west-1.compute.internal:

libcublas.so.7.5 (libc6,x86-64) => /opt/nvidia/cuda/lib64/libcublas.so.7.5

libcublas.so (libc6,x86-64) => /opt/nvidia/cuda/lib64/libcublas.so

Before continue, please also ensure that all of the HDP services are running after restart, you can track status of HDP services on Ambari UI.

Execution

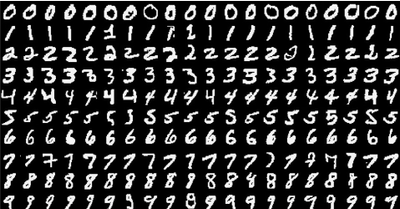

The neural network in this tutorial is trained on MNIST dataset, which is a simple computer vision dataset and consists of images of handwritten digits. An image of a handwritten digit is 28 * 28 pixels large and looks like these:

The MnistSpark is the main class for the neural network training and also for the evaluation. It creates a neural network with two hidden layers. The network has 784 (28 * 28) inputs and output layer has 10 neurons for the different classes (for the different digits). The implementation is based on a so called synchronous parameter averaging method provided by TrainingMaster class, which from birds perspective does the following job in a loop:

- distributes the global model parameters for each worker

- executes the training on each worker parallel but only on a subset of the data

- fetch the changed parameters from each worker, set the global parameters to the average of the parameters from each worker

To see it in action just submit the Spark code as spark user:

sudo su spark cd /home/spark/ml && ./submit.sh

The submit.sh executes the training and also the evaluation and finally it prints out the evaluation result of the network on the test data set:

==========================Scores======================================== Accuracy: 0.9499 Precision: 0.9505 Recall: 0.9489 F1 Score: 0.9497 ========================================================================

While the Spark job is running you can check the GPU utilisation on worker nodes:

sudo nvidia-smi -l 1 +------------------------------------------------------+ | NVIDIA-SMI 352.99 Driver Version: 352.99 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GRID K520 On | 0000:00:03.0 Off | N/A | | N/A 45C P0 50W / 125W | 646MiB / 4095MiB | 31% Default | +-------------------------------+----------------------+----------------------+

If you are interested, you can find more information about Deeplearning4j running on Spark here.