Community Articles

- Cloudera Community

- Support

- Community Articles

- Powering Apache MiNiFi Flows with a Movidius Neura...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on

12-28-2017

06:51 PM

- edited on

03-12-2020

04:38 AM

by

VidyaSargur

Adding a Movidius NCS to a Raspberry Pi is a great way to augment your deep learning edge programming.

Hardware Setup

- Raspberry Pi 3

- Movidius NCS

- PS 3 Eye Camera

- 128GB USB Stick

Software Setup

- Standard NCS Setup

- Python 3

- sudo apt-get update

- sudo apt-get install python-pip python-dev

- sudo apt-get install python3-pip python3-dev

- sudo apt-get install fswebcam

- sudo apt-get install curl wget unzip

- sudo apt-get install oracle-java8-jdk

- sudo apt-get install sense-hat

- sudo apt-get install octave -y

- sudo apt install python3-gst-1.0

- pip3 install psutil

- Apache MiniFi https://www.apache.org/dyn/closer.lua?path=/nifi/minifi/0.3.0/minifi-0.3.0-bin.zip

- git clone https://github.com/movidius/ncsdk.git

- git clone https://github.com/movidius/ncappzoo.git

Run Example

~/workspace/ncappzoo/apps/image-classifier

Shell Script

/root/workspace/ncappzoo/apps/image-classifier/run.sh

#!/bin/bash DATE=$(date +"%Y-%m-%d_%H%M") fswebcam -r 1280x720 --no-banner /opt/demo/images/$DATE.jpg python3 -W ignore image-classifier2.py /opt/demo/images/$DATE.jpg

Forked Python Code

I added some extra variables I like to send from IoT devices (time, ip address, CPU temp) for flows and formatted as JSON.

#!/usr/bin/python3

# ****************************************************************************

# Copyright(c) 2017 Intel Corporation.

# License: MIT See LICENSE file in root directory.

# ****************************************************************************

# How to classify images using DNNs on Intel Neural Compute Stick (NCS)

import mvnc.mvncapi as mvnc

import skimage

from skimage import io, transform

import numpy

import os

import sys

import json

import time

import sys, socket

import subprocess

import datetime

from time import sleep

from time import gmtime, strftime

starttime= strftime("%Y-%m-%d %H:%M:%S",gmtime())

# User modifiable input parameters

NCAPPZOO_PATH = os.path.expanduser( '~/workspace/ncappzoo' )

GRAPH_PATH = NCAPPZOO_PATH + '/caffe/GoogLeNet/graph'

IMAGE_PATH = sys.argv[1]

LABELS_FILE_PATH = NCAPPZOO_PATH + '/data/ilsvrc12/synset_words.txt'

IMAGE_MEAN = [ 104.00698793, 116.66876762, 122.67891434]

IMAGE_STDDEV = 1

IMAGE_DIM = ( 224, 224 )

# ---- Step 1: Open the enumerated device and get a handle to it -------------

# Look for enumerated NCS device(s); quit program if none found.

devices = mvnc.EnumerateDevices()

if len( devices ) == 0:

print( 'No devices found' )

quit()

# Get a handle to the first enumerated device and open it

device = mvnc.Device( devices[0] )

device.OpenDevice()

# ---- Step 2: Load a graph file onto the NCS device -------------------------

# Read the graph file into a buffer

with open( GRAPH_PATH, mode='rb' ) as f:

blob = f.read()

# Load the graph buffer into the NCS

graph = device.AllocateGraph( blob )

# ---- Step 3: Offload image onto the NCS to run inference -------------------

# Read & resize image [Image size is defined during training]

img = print_img = skimage.io.imread( IMAGE_PATH )

img = skimage.transform.resize( img, IMAGE_DIM, preserve_range=True )

# Convert RGB to BGR [skimage reads image in RGB, but Caffe uses BGR]

img = img[:, :, ::-1]

# Mean subtraction & scaling [A common technique used to center the data]

img = img.astype( numpy.float32 )

img = ( img - IMAGE_MEAN ) * IMAGE_STDDEV

# Load the image as a half-precision floating point array

graph.LoadTensor( img.astype( numpy.float16 ), 'user object' )

# ---- Step 4: Read & print inference results from the NCS -------------------

# Get the results from NCS

output, userobj = graph.GetResult()

labels = numpy.loadtxt( LABELS_FILE_PATH, str, delimiter = '\t' )

order = output.argsort()[::-1][:6]

#### Initialization

external_IP_and_port = ('198.41.0.4', 53) # a.root-servers.net

socket_family = socket.AF_INET

host = os.uname()[1]

def getCPUtemperature():

res = os.popen('vcgencmd measure_temp').readline()

return(res.replace("temp=","").replace("'C\n",""))

def IP_address():

try:

s = socket.socket(socket_family, socket.SOCK_DGRAM)

s.connect(external_IP_and_port)

answer = s.getsockname()

s.close()

return answer[0] if answer else None

except socket.error:

return None

cpuTemp=int(float(getCPUtemperature()))

ipaddress = IP_address()

# yyyy-mm-dd hh:mm:ss

currenttime= strftime("%Y-%m-%d %H:%M:%S",gmtime())

row = { 'label1': labels[order[0]], 'label2': labels[order[1]], 'label3': labels[order[2]], 'label4': labels[order[3]], 'label5': labels[order[4]], 'currenttime': currenttime, 'host': host, 'cputemp': round(cpuTemp,2), 'ipaddress': ipaddress, 'starttime': starttime }

json_string = json.dumps(row)

print(json_string)

# ---- Step 5: Unload the graph and close the device -------------------------

graph.DeallocateGraph()

device.CloseDevice()

# ==== End of file ===========================================================

This same device also has a Sense-Hat, so we grab those as well.

Then I combined the two sets of code to produce a row of Sense-Hat sensor data and NCS AI results.

Source Code

https://github.com/tspannhw/rpi-minifi-movidius-sensehat/tree/master

Example Output JSON

{"label1": "b'n03794056 mousetrap'", "humidity": 17.2, "roll": 94.0, "yaw": 225.0, "label4": "b'n03127925 crate'", "cputemp2": 49.39, "label3": "b'n02091134 whippet'", "memory": 16.2, "z": -0.0, "diskfree": "15866.3 MB", "cputemp": 50, "pitch": 3.0, "temp": 34.9, "y": 1.0, "currenttime": "2017-12-28 19:09:32", "starttime": "2017-12-28 19:09:29", "tempf": 74.82, "label5": "b'n04265275 space heater'", "host": "sensehatmovidius", "ipaddress": "192.168.1.156", "pressure": 1032.7, "label2": "b'n02091032 Italian greyhound'", "x": -0.0}

The current version of Apache MiniFi is 0.30.

Make sure you download the 0.30 version of the Toolkit to convert your template created in your Apache NiFi screen to a proper config.yml. This is compatible with Apache NiFi 1.4.

MiNiFi (Java) Version 0.3.0 Release Date: 2017 December 22 (https://cwiki.apache.org/confluence/display/MINIFI/Release+Notes#ReleaseNotes-Version0.3.0)

To Build Your MiniFi YAML

buildconfig.sh YourConfigFileName.xml

minifi-toolkit-0.3.0/bin/config.sh transform $1 config.yml

scp config.yml pi@192.168.1.156:/opt/dmeo/minifi-0.3.0/conf/

MiNiFi Config Version: 3

Flow Controller:

name: Movidius MiniFi

comment: ''

Core Properties:

flow controller graceful shutdown period: 10 sec

flow service write delay interval: 500 ms

administrative yield duration: 30 sec

bored yield duration: 10 millis

max concurrent threads: 1

variable registry properties: ''

FlowFile Repository:

partitions: 256

checkpoint interval: 2 mins

always sync: false

Swap:

threshold: 20000

in period: 5 sec

in threads: 1

out period: 5 sec

out threads: 4

Content Repository:

content claim max appendable size: 10 MB

content claim max flow files: 100

always sync: false

Provenance Repository:

provenance rollover time: 1 min

implementation: org.apache.nifi.provenance.MiNiFiPersistentProvenanceRepository

Component Status Repository:

buffer size: 1440

snapshot frequency: 1 min

Security Properties:

keystore: ''

keystore type: ''

keystore password: ''

key password: ''

truststore: ''

truststore type: ''

truststore password: ''

ssl protocol: ''

Sensitive Props:

key:

algorithm: PBEWITHMD5AND256BITAES-CBC-OPENSSL

provider: BC

Processors: []

Controller Services: []

Process Groups:

- id: 9fdb6f99-2e9f-3d86-0000-000000000000

name: Movidius MiniFi

Processors:

- id: ceab4537-43cb-31c8-0000-000000000000

name: Capture Photo and NCS Image Classifier

class: org.apache.nifi.processors.standard.ExecuteProcess

max concurrent tasks: 1

scheduling strategy: TIMER_DRIVEN

scheduling period: 60 sec

penalization period: 30 sec

yield period: 1 sec

run duration nanos: 0

auto-terminated relationships list: []

Properties:

Argument Delimiter: ' '

Batch Duration:

Command: /root/workspace/ncappzoo/apps/image-classifier/run.sh

Command Arguments:

Redirect Error Stream: 'false'

Working Directory:

- id: 9fbeac0f-1c3f-3535-0000-000000000000

name: GetFile

class: org.apache.nifi.processors.standard.GetFile

max concurrent tasks: 1

scheduling strategy: TIMER_DRIVEN

scheduling period: 0 sec

penalization period: 30 sec

yield period: 1 sec

run duration nanos: 0

auto-terminated relationships list: []

Properties:

Batch Size: '10'

File Filter: '[^\.].*'

Ignore Hidden Files: 'false'

Input Directory: /opt/demo/images/

Keep Source File: 'false'

Maximum File Age:

Maximum File Size:

Minimum File Age: 5 sec

Minimum File Size: 10 B

Path Filter:

Polling Interval: 0 sec

Recurse Subdirectories: 'true'

Controller Services: []

Process Groups: []

Input Ports: []

Output Ports: []

Funnels: []

Connections:

- id: 1bd103a9-09dc-3121-0000-000000000000

name: Capture Photo and NCS Image Classifier/success/0d6447ee-be36-3ec4-b896-1d57e374580f

source id: ceab4537-43cb-31c8-0000-000000000000

source relationship names:

- success

destination id: 0d6447ee-be36-3ec4-b896-1d57e374580f

max work queue size: 10000

max work queue data size: 1 GB

flowfile expiration: 0 sec

queue prioritizer class: ''

- id: 3cdac0a3-aabf-3a15-0000-000000000000

name: GetFile/success/0d6447ee-be36-3ec4-b896-1d57e374580f

source id: 9fbeac0f-1c3f-3535-0000-000000000000

source relationship names:

- success

destination id: 0d6447ee-be36-3ec4-b896-1d57e374580f

max work queue size: 10000

max work queue data size: 1 GB

flowfile expiration: 0 sec

queue prioritizer class: ''

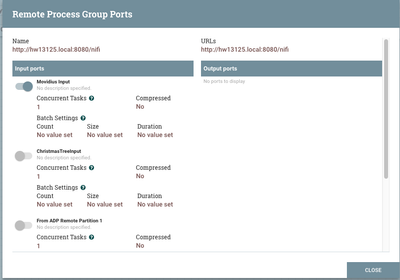

Remote Process Groups:

- id: c8b85dc1-0dfb-3f01-0000-000000000000

name: ''

url: http://hostname.local:8080/nifi

comment: ''

timeout: 60 sec

yield period: 10 sec

transport protocol: HTTP

proxy host: ''

proxy port: ''

proxy user: ''

proxy password: ''

local network interface: ''

Input Ports:

- id: befe3bd8-b4ac-326c-becf-fc448e4a8ff6

name: MiniFi From TX1 Jetson

comment: ''

max concurrent tasks: 1

use compression: false

- id: 0d6447ee-be36-3ec4-b896-1d57e374580f

name: Movidius Input

comment: ''

max concurrent tasks: 1

use compression: false

- id: e43fd055-eb0c-37b2-ad38-91aeea9380cd

name: ChristmasTreeInput

comment: ''

max concurrent tasks: 1

use compression: false

- id: 4689a182-d7e1-3371-bef2-24e102ed9350

name: From ADP Remote Partition 1

comment: ''

max concurrent tasks: 1

use compression: false

Output Ports: []

Input Ports: []

Output Ports: []

Funnels: []

Connections: []

Remote Process Groups: []

NiFi Properties Overrides: {}

Start Apache MiniFi on Your RPI Box

root@sensehatmovidius:/opt/demo/minifi-0.3.0/logs# ../bin/minifi.sh start1686],offset=0,name=2017-12-28_1452.jpg,size=531686], StandardFlowFileRecord[uuid=ca84bb5f-b9ff-40f2-b5f3-ece5278df9c2,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1514490624975-1, container=default, section=1], offset=1592409, length=531879],offset=0,name=2017-12-28_1453.jpg,size=531879], StandardFlowFileRecord[uuid=6201c682-967b-4922-89fb-d25abbc2fe82,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1514490624975-1, container=default, section=1], offset=2124819, length=527188],offset=0,name=2017-12-28_1455.jpg,size=527188]] (2.53 MB) to http://hostname.local:8080/nifi-api in 639 milliseconds at a rate of 3.96 MB/sec 2017-12-28 15:08:35,588 INFO [Timer-Driven Process Thread-8] o.a.nifi.remote.StandardRemoteGroupPort RemoteGroupPort[name=Movidius Input,targets=http://hostname.local:8080/nifi] Successfully sent [StandardFlowFileRecord[uuid=670a2494-5c60-4551-9a59-a1859e794cfe,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1514490883824-2, container=default, section=2], offset=530145, length=531289],offset=0,name=2017-12-28_1456.jpg,size=531289], StandardFlowFileRecord[uuid=39cad0fd-ccba-4386-840d-758b39daf48e,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1514491306665-2, container=default, section=2], offset=0, length=504600],offset=0,name=2017-12-28_1501.jpg,size=504600], StandardFlowFileRecord[uuid=3c2d4a2b-a10b-4a92-8c8b-ab73e6960670,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1514491298789-1, container=default, section=1], offset=0, length=527543],offset=0,name=2017-12-28_1502.jpg,size=527543], StandardFlowFileRecord[uuid=92cbfd2b-e94a-4854-b874-ef575dcf3905,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1514490883824-2, container=default, section=2], offset=0, length=529618],offset=0,name=2017-12-28_1454.jpg,size=529618]] (2 MB) to http://hostname.local:8080/nifi-api in 439 milliseconds at a rate of 4.54 MB/sec 2017-12-28 15:08:41,341 INFO [Timer-Driven Process Thread-2] o.a.nifi.remote.StandardRemoteGroupPort RemoteGroupPort[name=Movidius Input,targets=http://hostname.local:8080/nifi] Successfully sent [StandardFlowFileRecord[uuid=3fb7ca68-3e10-40c6-8f37-49532134689d,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1514491710195-1, container=default, section=1], offset=0, length=567],offset=0,name=21532511724407,size=567]] (567 bytes) to http://hostname.local:8080/nifi-api in 113 milliseconds at a rate of 4.88 KB/sec

Our Apache MiniFi flow sends that data via HTTP to our Apache NiFi server for processing and converting into a standard Apache ORC file in HDFS for Apache Hive queries.

Using InferAvroSchema we build a schema and upload it to the Hortonworks Schema Registry for processing.

Schema

{

"type": "record",

"name": "movidiussense",

"fields": [

{

"name": "cputemp2",

"type": "double",

"doc": "Type inferred from '54.77'"

},

{

"name": "pitch",

"type": "double",

"doc": "Type inferred from '4.0'"

},

{

"name": "label5",

"type": "string",

"doc": "Type inferred from '\"b'n04238763 slide rule, slipstick'\"'"

},

{

"name": "starttime",

"type": "string",

"doc": "Type inferred from '\"2017-12-28 20:08:37\"'"

},

{

"name": "label2",

"type": "string",

"doc": "Type inferred from '\"b'n02871525 bookshop, bookstore, bookstall'\"'"

},

{

"name": "y",

"type": "double",

"doc": "Type inferred from '1.0'"

},

{

"name": "currenttime",

"type": "string",

"doc": "Type inferred from '\"2017-12-28 20:08:39\"'"

},

{

"name": "x",

"type": "double",

"doc": "Type inferred from '-0.0'"

},

{

"name": "label4",

"type": "string",

"doc": "Type inferred from '\"b'n03482405 hamper'\"'"

},

{

"name": "roll",

"type": "double",

"doc": "Type inferred from '94.0'"

},

{

"name": "temp",

"type": "double",

"doc": "Type inferred from '35.31'"

},

{

"name": "host",

"type": "string",

"doc": "Type inferred from '\"sensehatmovidius\"'"

},

{

"name": "diskfree",

"type": "string",

"doc": "Type inferred from '\"15817.0 MB\"'"

},

{

"name": "memory",

"type": "double",

"doc": "Type inferred from '39.2'"

},

{

"name": "label3",

"type": "string",

"doc": "Type inferred from '\"b'n02666196 abacus'\"'"

},

{

"name": "label1",

"type": "string",

"doc": "Type inferred from '\"b'n02727426 apiary, bee house'\"'"

},

{

"name": "yaw",

"type": "double",

"doc": "Type inferred from '225.0'"

},

{

"name": "cputemp",

"type": "int",

"doc": "Type inferred from '55'"

},

{

"name": "humidity",

"type": "double",

"doc": "Type inferred from '17.2'"

},

{

"name": "tempf",

"type": "double",

"doc": "Type inferred from '75.56'"

},

{

"name": "z",

"type": "double",

"doc": "Type inferred from '-0.0'"

},

{

"name": "pressure",

"type": "double",

"doc": "Type inferred from '1033.2'"

},

{

"name": "ipaddress",

"type": "string",

"doc": "Type inferred from '\"192.168.1.156\"'"

}

]

}

Our data is now in HDFS with an Apache Hive table that Apache NiFi has generated the Create Table DDL for as follows:

CREATE EXTERNAL TABLE IF NOT EXISTS movidiussense (cputemp2 DOUBLE, pitch DOUBLE, label5 STRING, starttime STRING, label2 STRING, y DOUBLE, currenttime STRING, x DOUBLE, label4 STRING, roll DOUBLE, temp DOUBLE, host STRING, diskfree STRING, memory DOUBLE, label3 STRING, label1 STRING, yaw DOUBLE, cputemp INT, humidity DOUBLE, tempf DOUBLE, z DOUBLE, pressure DOUBLE, ipaddress STRING) STORED AS ORC LOCATION '/movidiussense'

Resources