Community Articles

- Cloudera Community

- Support

- Community Articles

- How to deploy HDP 2.6 with Cloudbreak 2.5 CLI

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-20-2018 12:40 AM - edited 08-17-2019 07:43 AM

HDP deploy with CB CLI

This article provides step by step instruction for installing cloudbreak 2.5 release candidate on OSX and launch HDP

Environment

- OSX HighSierra (laptop)

- Openstack environment keystone-v3 via VPN (internal facing)

Pre-Requisite

- Homebrew

- Java 1.8+

If you start from scratch on OSX High Sierra

# install homebrew /usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)" # install cask brew tap caskroom/cask #install pre-req brew cask install java8

optionally - installing docker, While the official docs state docker and docker-machine are required, you can skip installing them because cbd brings it with it into ".deps" folder in cloudbreak home.

brew cask install docker brew install docker-machine-driver-xhyve

Concepts and Components

We are going to use the following 3 components

- Cloudbreak Deployer (cbd)

- Cloudbreak Application (14 docker containers with cloudbreak application at the center)

- Cloudbreak Command Line Interfacec (cb)

Cloudbreak Deployer

cloudbreak deployer (cbd) is a collection of bash scripts that are wrapped in a single go executable. cbd provides an easy mechanism to download, deploy and manage the cloudbreak application. I am going to use release candidate 2.5 in this tutorial, the master is currently at 2.6 if you remove the "-s $branch" you can test with the latest build

# get cloudbreak deployer (cbd) export branch='rc-2.5' curl -s https://raw.githubusercontent.com/hortonworks/cloudbreak-deployer/master/install-dev | sh -s $branch mkdir cbd25 cd cbd25 # optionally - the single go executable is placed in /usr/bin or /usr/local/bin but I prefere it isolated mv $(which cbd) . alias cbd=$pws/cbd # version check cbd --version

Cloudbreak is an application composed of a number of services running as docker containers. Since we are running cloudbreak on OSX and the deployer was built before the native docker support was available, it is requiring a docker-mamachine which is a light VM that runs the docker engine and will host all cloudbreak services. (I do believe it is possible to change cloudbreak deployer code to use OSX native docker and will test and report on this in a future post).

cbd machine create docker-machine ls

We need to tell the docker cli to connect with the docker engine in the VM and not the native OSX docker engine (in case it is installed). the following env setting is supplied by docker-machine...

docker-machine env cbd # output # export DOCKER_TLS_VERIFY="1" # export DOCKER_HOST="tcp://192.168.64.10:2376" # export DOCKER_CERT_PATH="/Users/eden/.docker/machine/machines/cbd" # export DOCKER_MACHINE_NAME="cbd" # Run this command to configure your shell: # eval $(docker-machine env cbd)

Before we are going to run cbd pull which will download all the containers for the cloudbreak application, to illustrate the distinction between the OSX native docker and the docker-machine that was installed for you by cbd lets looks at what our docker cli think about the platform it is running on. This will only work if you are using the native docker on OSX

docker info | grep Kernel # before eval, if docker is installed on OSX Kernel Version: 4.9.87-linuxkit-aufs eval $(docker-machine env cbd) docker info | grep Kernel # after eval regardless... Kernel Version: 4.9.89-boot2docker

Notice how now we are running the docker containers inside the docker machine VM and when using docker client we are talking to the docker engine in the VM. We are now ready to pull the containers for the services that compose cloudbreak - this may take some time…

cbd pull

# list all containers

docker ps

# a nicer way to look at it - as you can see there are 14 containers which together make up cloudbreak

docker ps --format '{{.Names}}' | cut -d_ -f2

Cloudbreak deployer is using a file called Profile to generate the docker-compose.yml Cloudbreak deployer will create a default Profile for you but at the very least you need to add a default password by adding UAA_DEFAULT_USER_PW=mypss, the default user is admin@example.com - I am going to change that, but this is an optional step

cat << EOF >> Profile export ULU_SUBSCRIBE_TO_NOTIFICATIONS=true export UAA_DEFAULT_USER_PW='admin' export UAA_DEFAULT_USER_FIRSTNAME='first' export UAA_DEFAULT_USER_LASTNAME='last' export UAA_DEFAULT_USER_EMAIL='email@domain.com' export UAA_ZONE_DOMAIN='domain.com' EOF # user cloudbreak deployer to start cloudbreak cbd start

now when all the containers are up and running you can open cloudbreak UI

eval $(./cbd env export | grep ULU_HOST_ADDRESS) ; open $ULU_HOST_ADDRESS

That's enough UI for one day.. let's go back to the command line and download cloud break CLI

cbd env export - provides a list of environment variables that are set and used by cbd. ULU_HOST_ADDRESS is the address for the cloudbreak login UI. you can login with UAA_DEFAULT_USER_EMAIL and UAA_DEFAULT_USER_PW - in my case this will be enuriel@hortonworks.com / admin

Cloudbreak command line interface (cb)

Cloudbreak cli (cb) is the command line interface wrapper for cloudbreak REST API, which makes automate deployments easier. It resembles aws, openstack and other common clis that wraps REST APIs. as such the first thing we need to do is download and configure the client to talk with the REST endpoint.

# Download cloud break CLI curl -Ls https://s3-us-west-2.amazonaws.com/cb-cli/cb-cli_2.5.0_$(uname -s)_$(uname -m).tgz | sudo tar -xz # Configure the client so we don't have to provide this every time cb configure --server $ULU_HOST_ADDRESS --username email@hdomain.com --password admin #this is where it is stored cat ~/.cb/conf #test cli is working cb blueprint list

Creating a Cluster on openstack IaaS

Create Credentials

A Credential - is a logical container storing the required information to interface with the specific IaaS to provision and manage resources. Cloudbreak can store multiple credentials for the same IaaS for example: two different users or projects on openstack cloud, or different IaaS provider (rackspace, internal). As well as credentials for for other IaaS types (aws, gco, azure, openstack). Cloudbreak architecture allows for extending the support to other infrastructure targets.

We now are now ready to configure credentials for the IaaS (Openstack for example) for openstack at the minimum we require: user/pass, Authentication URL and user-domain

The above information can be obtain from keystone UI "Access and Security" view credential, or if you have osrc file with the relevant environment variables.

# read the required information for i in endpoint domain user pass; do read -p "os$i: " $([[ $i == pass ]] && echo -s) os$i; export os$i ; done

this little snippet will prompt you for the information needed for the openstack credentials and will store it in environment variables

# use cb cli to create the credentials cb credential create openstack keystone-v3 --tenant-user $osuser --tenant-password $ospass --endpoint $osendpoint --user-domain $osdomain --name "cred" # list the credentials stored in cloudbreak cb credential list # you can delete credential by name - like this... cb credential delete --name cred

# you can also create credentials from a saved json file - for example:

cat << EOF >> cred.json

{

"cloudPlatform": "OPENSTACK",

"description": "json credentials for field cloud - internal facing",

"name": "fieldCloud",

"parameters": {

"endpoint": "$osendpoint",

"facing": "internal",

"keystoneAuthScope": "cb-keystone-v3-default-scope",

"selector": "cb-keystone-v3-default-scope",

"keystoneVersion": "cb-keystone-v3",

"password": "$ospass",

"userDomain": "$osdomain",

"userName": "$osuser"

}

}

EOF

cb credential create from-file --cli-input-json cred.json --name cred

# We can now test the credential by listing instance types available in the IaaS. Notice that your region should be entered for --region instead of SE

cb cloud instances --region SE --credential cred

We have a local setup of cloudbreak deployer, cloudbreak and the cloudbreak cli, configured with our openstack credentials, next we can launch our clusters. the cb cli command for cluster creation requires a JSON file with all the information describing the cluster including

- blueprint name

- admin user/pass

- hostgroups and count per group

- network setting - existing, or new network/subnet and security groups

- instances flavor (ram,cpus) per group

- mage catalog, and image for the cluster nodes

- credential (private/publi key pairs) for accessing the cluser nodes (stored in openstack and referenced by name)

- SW repositories, GW, Authentricators, and more…

There are 4 available blueprints Out-Of-The-Box. Additional blueprints can be added using the cli or the UI (whether exported from existing cluster or created with a script/template).There is only one HDF blueprint and it is for a NiFi cluster. To list the available blueprints:

# default JSON output

cb blueprint list

# or as a shell function to list all available blueprints

bps() {'cb blueprint list --output yaml | grep Name | cut -d"'" -f2'}

bps

There are many ways to create the input json file for the cb cluster create command, a few options are:

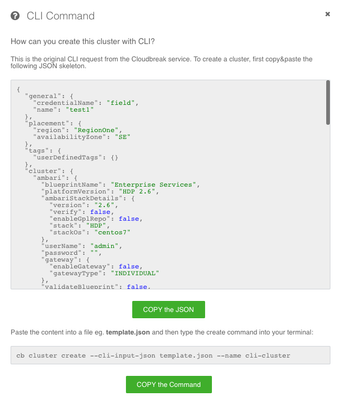

- Use cloudbreak UI cluster creation wizard, filling up the details and just before the create cluster, click on the show-cli which will allow you to copy the cluster creation JSON

- Use cb cluster generate-template command and fill in the required information in the template

- Use environment variable with the cb template or hand write the JSON

- Use jinja2 templates or some other template engine

I chose to go use cloudbreak UI to create a JSON payload, save it and template it with environment variables. my environment has the following constraints:

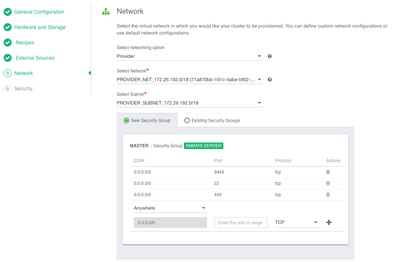

- I cannot create new networks and have to use an existing network and subnet ids

- I cannot create or attach volumes to my instances (which forces me to use instance storage)

- I am using an existing security groups id

I am using a parameter file with key/value pairs as my default values for setting up a new automated cluster deployment script. I then use a create_hdp.sh to read the defaults, and allow the user to overide the parameters and create a set of files that will be used to automate launching clusters with the provided parmeters - this setup allows me to have predefined set of cluster profile that I can launch at will.

The following file is uesd as a starting point, it is called prms

cred="cred" #Cloudbreak credentials we've created. #name="hdfnifi" #Cluster name (HDF default) name="hdpedw" # Cluster name (HDP default - one out of 3 built-in) #bp="Flow Management: Apache NiFi" # Blue print Name (Build in HFD default) bp="EDW-ETL: Apache Hive, Apache Spark 2" # Blue print Name user="admin" # Ambari user pass="admin" # Ambari password sgid="" # Security group ID from openstack nid="" # Existing Network ID from openstack snid="" # Existing Subnet ID from openstack key="mykey" # Existing key-pair from openstack workers=3 # Number of worker nodes masters=1 # Number of master nodes workerType="m3.xlarge" # Instance flavor for workers masterType="m3.xlarge" # Instance flavor for masters

To use my workflow, just use git clone and make your mods to parms, then execute create_hdp.sh

git clone https://github.com/edennuriel/auto-cbcli.git cd auto-cbcli bin/create_hdp.sh

now every time I need a cluster with the specified blueprint and number of workers I jsut cd clustername and execute ./create_clustername.sh

you do not have to use this workflow or those scripts to get your cluster running, you can manually edit the cluster json file with the relevant information of your setup and call the cb create cluster. This is provided as an example of a workflow I use.

If you prefere to follow the wizard in the UI and save the JSON file (for example as clstr.json) you can follow the following steps in the cloudbreak UI

This workflow /scripts is what works for me, alternatively simply create a JSON payload for the cb cluster create and create your cluster. To export it from the cloudbreak UI, follow this steps:

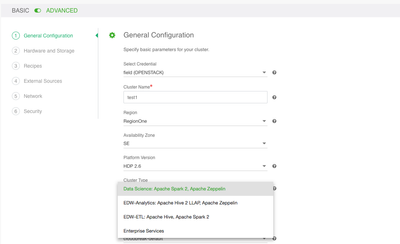

Create cluster - Select Advanced option

Chose general properties like name, platform placement and most importantly the blueprint to use

Configure HW and Storage - instance type, volumes and node count per host group

Skip recipes and external sources, we will go over that in some future how-to, and configure network

finish with security and then click the "SHOW CLI COMMAND"

Copy the JSON and paste it to a file, for example as clstr.json. You can then create the cluster with the following command.

cb cluster create --cli-input-json clstr.json --wait