Community Articles

- Cloudera Community

- Support

- Community Articles

- NiFi: Easy custom logging of diverse sources in me...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 11-05-2016 01:57 AM - edited 08-17-2019 08:31 AM

Overview

In this article I show how to very quickly build custom logging against source log files. Adding new custom logging to the flow takes only seconds: each new custom log is copy-pasted from an existing one and 3 configuration properties are changed. The result is a single flow with many diverse custom logs against your system.

Use Cases

Use cases include:

- Application development: permanent or throway logging focused on debugging software issues or NiFi flows.

- Production: logging for focused metrics gathering, audting, faster troubleshooting, alerting

- All envts: prefiltering Splunk ingests to save on cost and filtering out unneeded log data

- Use your imagingation … how can this flow pattern make your life better / more effective?

Flow Design

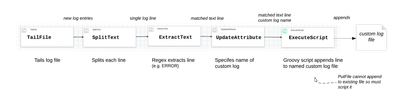

Single customized log

A single customized log is achieved by tailing a log file, splitting the lines of text, extracting lines that match a regex, specifiying a custom log filename for the regex-extracted lines, and appending the lines to that custom log file. The diagram below shows more details, which will be elaborated even more later in the article.

For example, you could extract all lines that are WARN and write them to its own log file, or all lines with a specific java class, or all lines with a specific processor name or id … or particular operation, or user, or something more complex.

Multiple customized logs in same flow

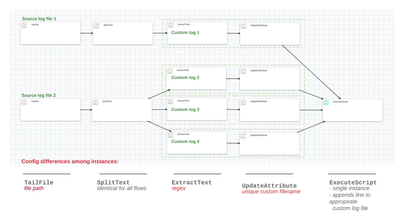

The above shows only one custom logging flow. You can building many custom logs against the same source log file (e.g. one for WARN, one for ERROR, one for pid, and another for username … whatever.

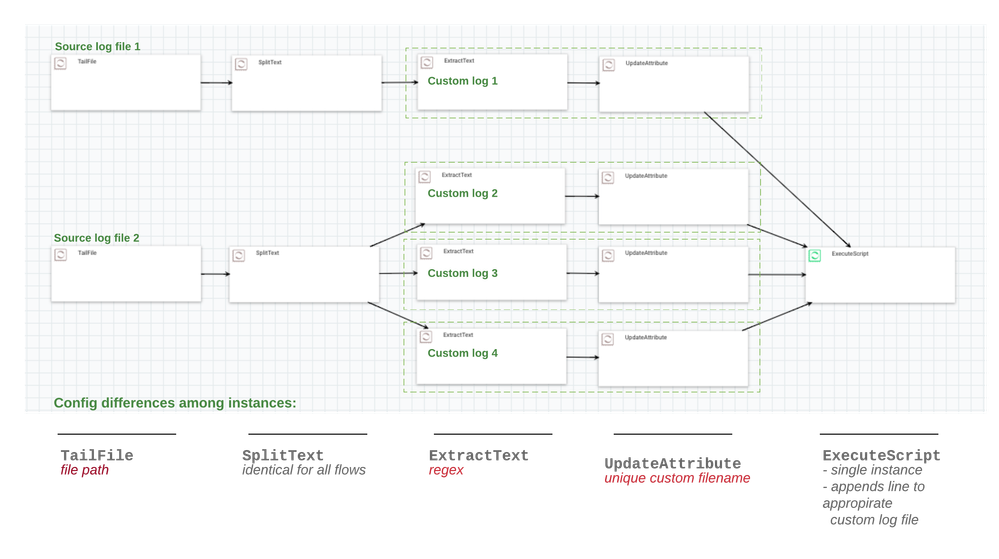

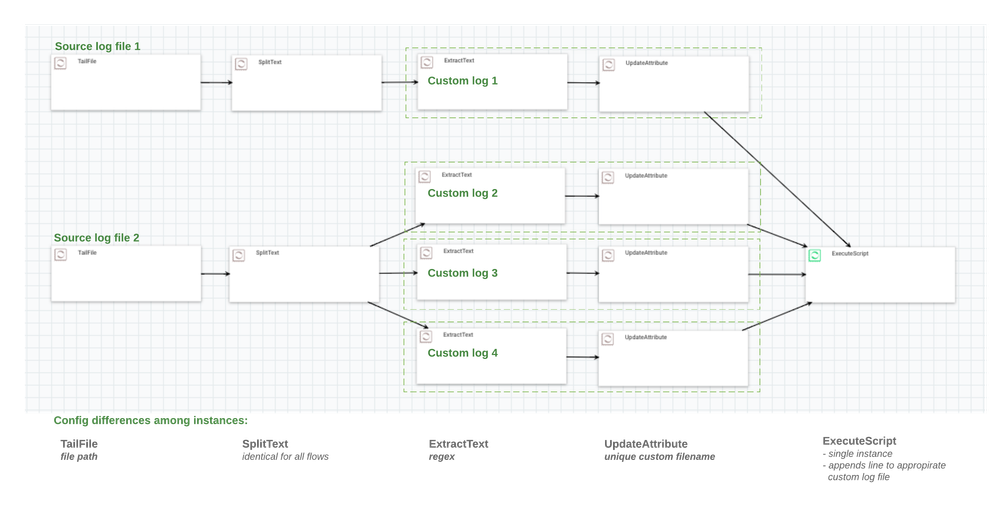

You can also build many custom log files against multiple source logs. The diagram below shows multiple customized logs in the same flow.

The above flow will create 4 separate custom log files … all written out by the same ExecuteScript.

Add a new custom log: Good ol’ copy-paste

Note in the above that:

- each custom log flow is identical except for 3 configuration property changes (if you are adding a custom logging against the same source log, only 2 configuration properties are different)

- all custom logging reuses ExecuteScript to append to a custom log file: Execute script knows which custom file to append to because each FlowFile it receives has the matched text and its associated custom filename

Because all custom log flows are identical except for 3 configs, it is super-fast to create a new flow. Just:

- copy an existing custom log flow (TailFile through UpdateAttribute) via SHFT-click each processor and connection, or lassoing them with SHFT-mouse drag

- paste on palette and connect to ExecuteScript

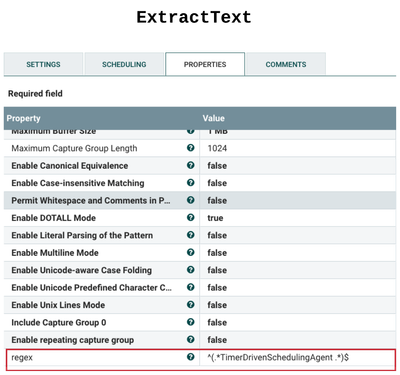

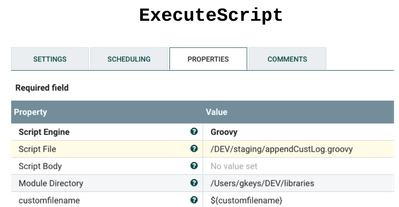

- change the 3 configs (TailFile > File to Tail; ExtractText > regex; UpdateAttribute > customfilename)

Technical note: when you paste to the pallete, each processor and connection retains the same name but is assigned a new uuid, thereby guaranteeing a new instance of each.

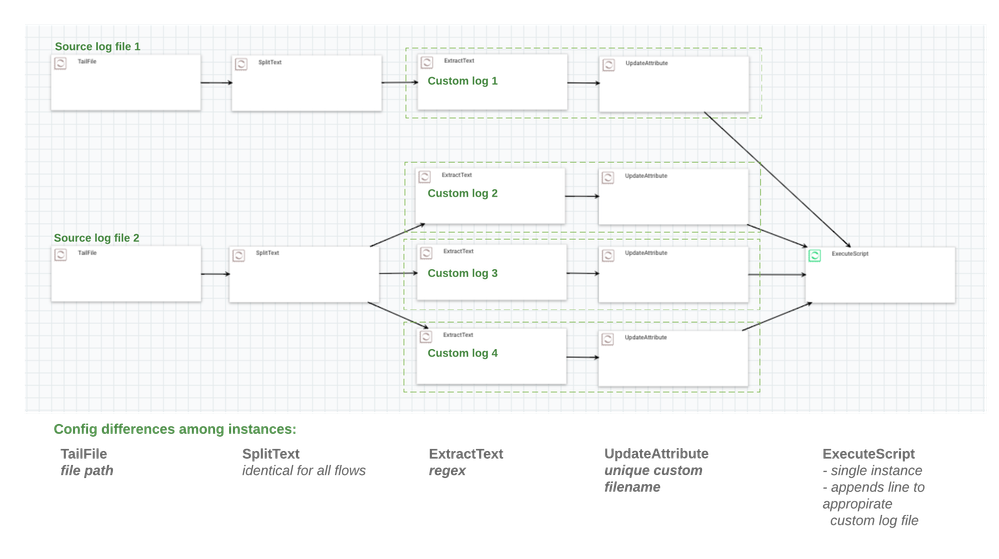

Tidying up

Because you will likely add many custom log flows to the same flow, and each source log file many split into multiple custom log flows (e.g. Source log file 2 above diagram) your pallete may get a bit busy. Dividing each custom log flow into the same logical processor groups helps manage the palette better. It also makes copy-pasting new flows and deleting other custom log flows easier.

Here is what it will look like.

Now you have your choice of managing entire process groups (copy-paste, delete) or processors inside of them (copy-paste, delete), thus giving you more power to quickly add or remove custom logging to your flow:

- To add a custom log to an existing TailFile-SplitText: open the processor group and copy-paste ExtractText-UpdateAttribute then make a config change to each processor.

- To add a custom log against a new source log: copy-paste any two process groups in the subflow, and make 1 config change in the first (TailFile), and necessary changes in the second process group (add/delete custom log flow, 2 config changes for each custom log)

Implementation specifics

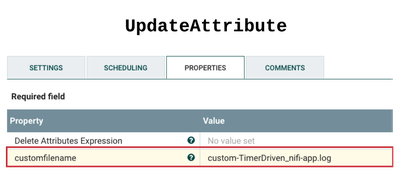

The config changes for each new custom log file

The configs that do not change for each new custom log

The groovy script

import org.apache.commons.io.IOUtils

import java.nio.charset.*

def flowFile = session.get()

if(!flowFile) return

filename = customfilename.evaluateAttributeExpressions(flowFile).value

f = new File("/Users/gkeys/DEV/staging/${filename}")

flowFile = session.write(flowFile, {inputStream, outputStream ->

try {

f.append(IOUtils.toString(inputStream, StandardCharsets.UTF_8)+'\n')

}

catch(e) {

log.error("Error during processing custom logging: ${filename}", e)

}

} as StreamCallback)

session.transfer(flowFile, REL_SUCCESS)Note that the jar for org.apache.commons.io.IOUtils is placed in Module Directory as set in ExecuteScript

Summary

That's it. You can build custom logging from the basic flow shown in the first diagram, and then quickly add new ones in just a few seconds. Because they are so easy and fast to build, you could easily build them as throwaways used only during the development of a piece of code or a project. On the other hand this is NiFi, a first-class enterprise technology for data in motion. Surely custom logging has a place in your production environments.

Extensions

The following could easily be modified or extended:

- for the given groovy code, it is easy to build rolling logic to your custom log files (would be applied to all custom logs)

- instead of appending to a local file, you could stream to a hive table (see link below)

- you could build NiFi alerts against the outputted custom log files (probably best as another flow that tails the custom log and responds to its content)

References

- http://hortonworks.com/apache/nifi/

- https://nifi.apache.org/docs/nifi-docs/html/user-guide.html

- https://nifi.apache.org/docs/nifi-docs/html/expression-language-guide.html

- https://community.hortonworks.com/articles/60868/enterprise-nifi-implementing-reusable-components-a....

- https://community.hortonworks.com/articles/52856/stream-data-into-hive-like-a-king-using-nifi.html

Created on 02-26-2019 04:24 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Very helpful article - thank you. I am implementing this approach right now but I need to rotate my custom log file when it reaches # MB. Can anyone show me how to do that in the Groovy code above? The article says it can be done (for the given groovy code, it is easy to build rolling logic to your custom log files) but leaves it at that.

Created on 02-26-2019 04:34 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi Jim, use the log4j library and there is a configuration to use an appender that defines how the logs rotate. Log4j is pretty standard in the java world

Here is a good tutorial: https://www.journaldev.com/10689/log4j-tutorial