Community Articles

- Cloudera Community

- Support

- Community Articles

- Using Solr's Extracting Request Handler with Apach...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 06-28-2016 10:21 PM - edited 08-17-2019 11:52 AM

The PutSolrContentStream processor in Apache NiFi makes use of Solr's ContentStreamUpdateRequest which means it can stream arbitrary data to Solr. Typically this processor is used to insert JSON documents, but it can be used to stream any kind of data. The following tutorial shows how to use NiFi to stream data to Solr's Extracting Request Handler.

Setup Solr

- Download the latest version of Solr (6.0.0 for writing this) and extract the distribution

- Start Solr with the cloud example: ./bin/solr start -e cloud -noprompt

- Verify Solr is running by going to http://localhost:8983/solr in your browser

Setup NiFi

- Download the latest version of NiFi (0.6.1) and extract the distribution

- Start NiFi: ./bin/nifi.sh start

- Verify NiFi is running by going to http://localhost:8080/nifi in your browser

- Create a directory under the NiFi home for listening for new files:

cd nifi-0.6.1 mkdir data mkdir data/input

Create the NiFi Flow

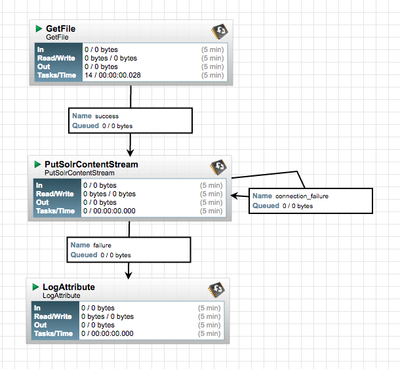

Create a simple flow of GetFile -> PutSolrContentStream -> LogAttribute:

The GetFile Input Directory should be ./data/input corresponding the directory created earlier.

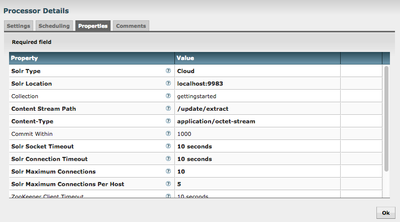

The configuration for PutSolrContentStream should be the following:

- The Solr Type is set to Cloud since we started the cloud example

- The Solr Location is the ZooKeeper connection string for the embedded ZK started by Solr

- The Collection is the example gettingstarted collection created by Solr

- The Content Stream Path is the path of the update handler in Solr used for extracting text, this corresponds to a path in solrconfix.ml

- The Content-Type is application/octet-stream so we can stream over any arbitrary data

The extracting request handler is described in detail here: https://wiki.apache.org/solr/ExtractingRequestHandler

We can see that a parameter called "literal.id" is normally passed on the URL. Any user defined properties on PutSolrContentStream will be passed as URL parameters to Solr, so by clicking the + icon in the top-right we can add this property and set it to the UUID of the flow file:

Ingest & Query

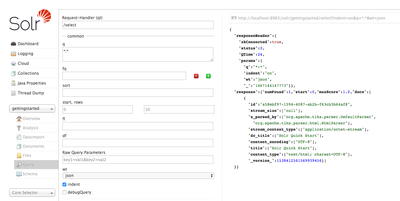

At this point we can copy any document into <nifi_home>/data/input and see if Solr can identify it. For this example I copied quickstart.html file from the Solr docs directory. After going to the Solr Admin UI and querying the "gettingstarted" collection for all documents, you should see the following results:

We can see that Solr identified the document as "text/html", extracted the title as "Solr Quick Start", and has the id as the UUID of the FlowFile from NiFi. We can also see the extraction was done using Tika behind the scenes.

From here you can send in any type of documents, PDF, Word, Excel, etc., and have Solr extract the text using Tika.

Created on 08-21-2017 03:45 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I have passed a text file having data in below input format and created respective fields for the text input in solr admin for collection "gettingstarted".

I opted indexed and stored option while creating fields. but still i am not able to see them while querying.

ex:

"response":{"numFound":3,"start":0,"maxScore":1.0,"docs":[

{

"stream_size":["null"],

"x_parsed_by":["org.apache.tika.parser.DefaultParser",

"org.apache.tika.parser.html.HtmlParser"],

"stream_content_type":["text/html"],

"content_encoding":["ISO-8859-1"],

"content_type":["text/html; charset=ISO-8859-1"],

"id":"df359cd5-ce8f-44c6-9eb9-681e44eba102",

"_version_":1576353324702105600},Input data format : sample.txt

johnd00,pc-1234,john doe,john.doe@dtaa.com,engineer,08/21/2018 00:00:00,deviceplugged,1000.00

fields created in managed-schema : userid,pcname,employeename,email,role,date,activity,score

all data is available under _text_ field ... but not under above fields

what could be the right approach? do i need to add fields to text/extract updater ..?

please let me know

Created on 08-21-2017 04:06 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I think the results you got are expected behavior behavior... The extracting request handler has no way to know the field names for the data you sent in. It is generally used to extract text from files like PDFs, or Word documents, where you basically have a title and content, and everything just goes into the content mostly.

For your scenario, you basically have a CSV where you know the field names. Take a look at Solr's CSV update handler:

You can use this from NiFi by setting the path to /update and setting the Content-Type to application/csv and then add a property fieldnames with your list of fields.

I'd recommend playing around with the update handler outside of NiFi first, just by using curl or a browser tool like Postman, and then once you have the request working the way you want, then get it working in NiFi.

Created on 08-22-2017 03:44 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Thanks for the guidance. I will look into it.

Created on 08-22-2017 03:48 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

It Worked. 🙂