Support Questions

- Cloudera Community

- Support

- Support Questions

- Apache NIFI - How to wait for SQL Insert full comp...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apache NIFI - How to wait for SQL Insert full completion

- Labels:

-

Apache NiFi

Created 12-19-2022 09:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wanted to wait until 'ExecuteSQL' processor finishes inserting big file into SQL database (table: bu_service_template), then move to other section. 'ExecuteSQL' processor inserts row by row content of a file. I wanted to wait until file is fully inserted and then move to a next file.

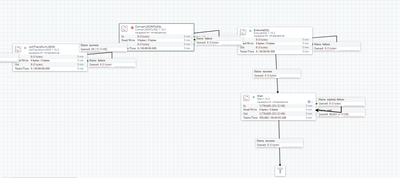

My current flow:

Created 12-19-2022 10:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@anton123

It may be helpful if you described your use case in detail.

Looking at your dataflow above, I am not clear on what you are trying to accomplish.

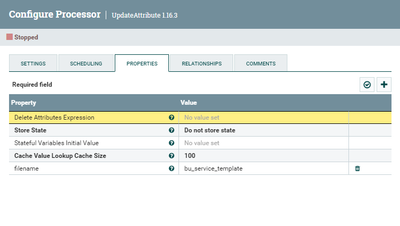

1. Your updateAttribute processor is changing the filename on every NiFi FlowFile that passes through it to "bu_service_template". Why are you doing this?

2. It makes no sense to me that you are looping the "original" relationship in a connection back on the MergeContent processor. All FlowFiles that go into a merged FlowFile get sent to this relationship. This means this loop would just grow and grow in size. Each Merged FlowFile that gets sent to the "merged" relationship connection would just get larger and larger.

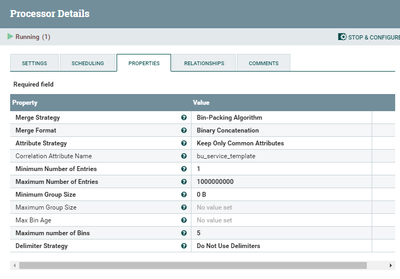

3. Your MergeContent processor configuration is not ideal and in worst case scenario where it actually tries to merge the max configure number of Entries is likely to cause your NiFi to run out of memory since the FlowFile attributes/metadata for every FlowFile allocated to a merge bin is held in heap memory.

4. When trying to merge that many FlowFile, it is important to handle this in a series of mergeContent processors (one after another). Configure first to produce merged FlowFiles of maybe 10,000. Then another that merges yet another 10,000 and finally one last mergeContent that merges 10. The final merged FlowFiles would be 1 billion.

5. I see you are trying to use a correlation attribute in your MergeContent with attribute name "bu_service_template". Where in your dataflow is this attribute getting added to the inbound FlowFiles?

6. Keep in ind that MergeContent will execute as fats as possible and you have min entries set to 1. So it is very possible that at time of execution is sees only one new unbinned FlowFile in inbound connection and adds that to bin. Well now that bin has satisfied the min and thus would be merged with only a single FlowFile in it. So 1 FlowFile goes to merge relationship and 1 FlowFile that made up the merged FlowFile goes to "original" relationship to get merged again. A better configuration would be to set min to 10000 and max to 10000. Then you can also set a "max bin age". The max bin age is used to force a merge even if mins have not been satisfied after x configured amount of time.

3. I am not sure the role you are trying to accomplish with the wait processor after the MergeContent.

I hope some of this configuration guidance helps you with yoru use case.

If you found that the provided solution(s) assisted you with your query, please take a moment to login and click Accept as Solution below each response that helped.

Thank you,

Matt

Created on 12-20-2022 02:12 AM - edited 12-20-2022 02:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

My flow is basically reading big file and loading it into a SQL DB. I'm simply looking to wait until insert statement is fully completed and after that move to some other task.

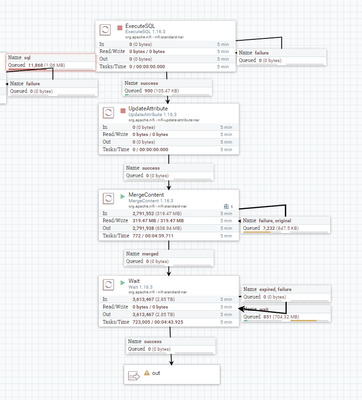

My current flow ( doesn't work as expected right now):

Convert SQL processor:

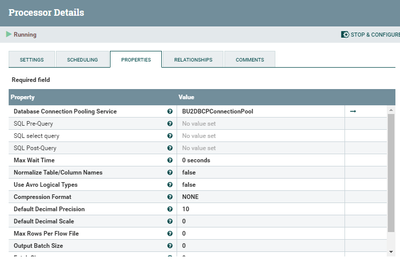

Execute SQL processor:

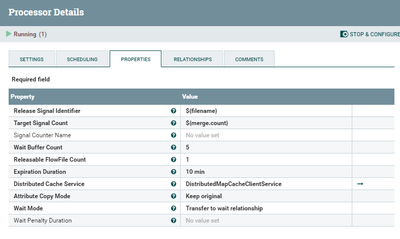

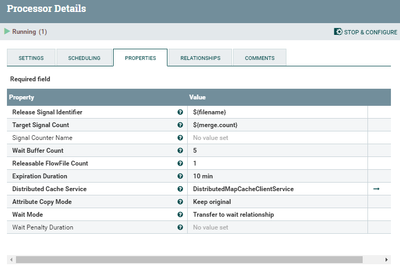

Wait processor:

My question is how to properly configure wait processor to fill success queue only once full insert statement is done?

Full flow:

Created 12-21-2022 06:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@anton123

I am still not completely clear on your use, but correct me if below is not accurate:

1. You fetch a single large file.

2. That file is unpacked in to many smaller files.

3. Each of these smaller files are converted in to SQL and inserted via the putSQL processor.

4. You then have unrelated downstream processing you don't want to start until all files produced by the unpackContent processor have been successfully processed by the putSQL processor.

Correct?

If so, the following exampe use case for the NiFi Wait and Notify processor is probably what you are looking to implement for this use case:

https://pierrevillard.com/2018/06/27/nifi-workflow-monitoring-wait-notify-pattern-with-split-and-mer...

If you found that the provided solution(s) assisted you with your query, please take a moment to login and click Accept as Solution below each response that helped.

Thank you,

Matt