Support Questions

- Cloudera Community

- Support

- Support Questions

- Apache Zeppelin Tech Preview Live

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Apache Zeppelin Tech Preview Live

Created on 10-23-2015 10:12 PM - edited 09-16-2022 02:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

http://hortonworks.com/hadoop-tutorial/apache-zeppelin/

The Zeppelin TP is built against Spark 1.4.1 in HDP. We are also about to publish Spark 1.5.1 TP very soon and once that is out Zeppelin TP will also be revised to carry instructions for Spark 1.5.1.

Please play with it and post here if you run into any issues.

Created 10-24-2015 06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Added a couple of sentences of clarification to get folks to Spark 1.4.1.

If you have an HDP 2.3.0 cluster, it came with Spark 1.3.1, you can either upgrade the entire cluster withAmbari to 2.3.2 to get Spark 1.4.1 or only manually upgrade Spark to 1.4.1

Created on 10-24-2015 10:42 AM - edited 08-19-2019 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

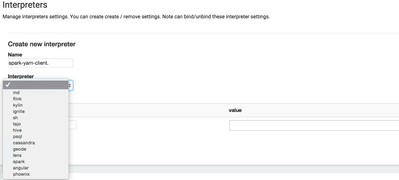

Looks good. Installation was simple.

Blog needs minor editing.

Add the following properties and settings:

spark.driver.extraJavaOptions -Dhdp.version=2.3.2.0-2950 spark.yarn.am.extraJavaOptions -Dhdp.version=2.3.2.0-2950 this should be spark.driver.extraJavaOptions -Dhdp.version=2.3.2.0-2950 spark.yarn.am.extraJavaOptions -Dhdp.version=2.3.2.0-2950

For newbies, we may want to share this in the blog as it works like charm

Created 10-24-2015 06:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Check samples notebook section for a link to a few notebooks.

Created on 10-24-2015 05:54 PM - edited 08-19-2019 06:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

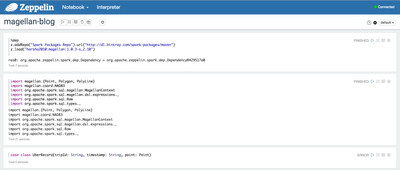

Installed this through the Ambari service for testing and basic Spark, SparkSQL, PySpark seem ok

Couple of issues:

1. tried out the Magellan blog notebook (after modifying it to include the %dep from the blog) and the UberRecord cell errors out:

From the log:

5/10/24 10:48:06 INFO SchedulerFactory: Job remoteInterpretJob_1445708886505 started by scheduler org.apache.zeppelin.spark.SparkInterpreter313266037 15/10/24 10:48:06 ERROR Job: Job failed scala.reflect.internal.Types$TypeError: bad symbolic reference. A signature in Shape.class refers to term geometry in value com.core which is not available. It may be completely missing from the current classpath, or the version on the classpath might be incompatible with the version used when compiling Shape.class. at scala.reflect.internal.pickling.UnPickler$Scan.toTypeError(UnPickler.scala:847) at scala.reflect.internal.pickling.UnPickler$Scan$LazyTypeRef.complete(UnPickler.scala:854) at scala.reflect.internal.pickling.UnPickler$Scan$LazyTypeRef.load(UnPickler.scala:863) at scala.reflect.internal.Symbols$Symbol.typeParams(Symbols.scala:1489) at scala.tools.nsc.transform.SpecializeTypes$$anonfun$scala$tools$nsc$transform$SpecializeTypes$$normalizeMember$1.apply(SpecializeTypes.scala:798) at scala.tools.nsc.transform.SpecializeTypes$$anonfun$scala$tools$nsc$transform$SpecializeTypes$$normalizeMember$1.apply(SpecializeTypes.scala:798) at scala.reflect.internal.SymbolTable.atPhase(SymbolTable.scala:207) at scala.reflect.internal.SymbolTable.beforePhase(SymbolTable.scala:215) at scala.tools.nsc.transform.SpecializeTypes.scala$tools$nsc$transform$SpecializeTypes$$norma

(side note: this notebook doesn't seem to have much documentation on what its doing like the other...would be good to add)

2. The blog currently says the below

This technical preview can be installed on any HDP 2.3.x cluster

...however 2.3.0 comes with Spark 1.3.1 which will not work unless they manually install Spark 1.4.1 TP so either:

a) we may want to include steps for those users too (esp since the current version of the sandbox comes with 1.3.1)

b) explicitly ask users to try the Zeppelin TP with 2.3.2

Created 10-25-2015 10:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Zeppelin Ambari service has been updated to install the updated TP Zeppelin bits for Spark 1.4.1 and 1.3.1. The update will be made for 1.5.1 this week after the TP is out

Also the Magellan notebook has also been updated with documentation and to enable it to run standalone on 1.4.1

Created 10-24-2015 06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Added a couple of sentences of clarification to get folks to Spark 1.4.1.

If you have an HDP 2.3.0 cluster, it came with Spark 1.3.1, you can either upgrade the entire cluster withAmbari to 2.3.2 to get Spark 1.4.1 or only manually upgrade Spark to 1.4.1

Created 10-24-2015 11:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tried this on a 2.3.2 cluster (brand new build) with 1.4.1, and had the same problem with Zeppelin and Magellan. Seems like Zeppelin is doing something to the context.