Community Articles

- Cloudera Community

- Support

- Community Articles

- Using Angular within Apache Zeppelin to create cus...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 01-03-2017 01:38 PM - edited 08-17-2019 06:13 AM

Apache Zeppelin is a flexible, multi-purpose notebook used for code editing and data visualization. It supports a variety of languages (using interpreters) and enables users to quickly prototype, write code, share code, and visualize the results using standard charts.

But as you move towards data apps and front-end development, you may need to create custom visualizations.

Fortunately, Zeppelin comes with built-in support for Angular through both a front-end API and back-end API.

I recently worked on a project to identify networking issues. These issues could range from bad/weak network signals, wiring issues, down nodes, etc. To replicate this, I created an example project where I mapped out the path that a packet takes from my home wifi connection to the google.com servers (using traceroute). The complete project is here, but for this post I want to focus on the steps I took to display the Spark output within Google Maps, all within Zeppelin.

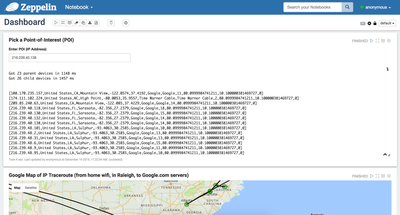

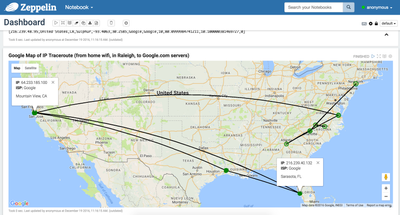

Here's a couple screenshots showing the end result of the traceroute analysis and Google Map within Zeppelin:

Figure 1: Dashboard showing and input (Point-of-Interest, POI) and the Spark output

Figure 2: Google Map created via Angular within Zeppelin Notebook

From Figure 1, you can see that a user-defined point-of-interest (POI) can be entered by the user. This is the node, or IP address, that a user is interested in analyzing. From here, the Spark code parses the data, retrieves all parent and child nodes, checkes the health status of each node, then uses that data within Angular to generate the Google Map.

Here are the steps that I took to generate this output:

Step 1: Create a JS function to bind all required variables to a global object.

I started with a helper JS function, thanks to @randerzander, which allowed me to bind my exposed variables to the JS globally accessible object. Within this code, you'll notice that I've includes 7 vars, which correspond to the variables and I will bind within my Spark code.

%angular

<!-- Avoid constantly editing JS and list the Angular vars you want exposed in an HTML attribute: -->

<div id="dummy" vars="id,parents,children,poidevice,childrenStr,parentsStr,devicetopology"></div>

<script type="text/javascript">

//Given an element in the note & list of values to fetch from Spark

//window.angularVars.myVal will be current value of backend Spark val of same name

function hoist(element){

var varNames = element.attr('vars').split(',');

window.angularVars = {};

var scope = angular.element(element.parent('.ng-scope')).scope().compiledScope;

$.each(varNames, function(i, v){

window[v+'-watcher'] = scope.$watch(v, function(newVal, oldVal){

window.angularVars[v] = newVal;

});

});

}

hoist($('#dummy'));

</script>

Step 2: Use Spark to process the data, then bind each required variable to angular using z.angularBind.

In this example, Spark is used to parse and transform the traceroute data. I chose to use Spark Streaming and stateful in-memory processing, using MapWithState, in order to maintain the current health status of each node within the network topology. The health status and enriched data for each node was then persisted to HBase (via Phoenix), where additional queries and fast, random access read/writes could be performed.

At this point, I have generated all of my Spark variables, but I need to bind them to the angular object in order to create a custom Google Map visualization. Within Spark, here's how you would bind the variables:

z.angularBind("poidevice", POIDevice)

z.angularBind("parents", parentDevicesInfo)

z.angularBind("parentsStr", parentDevicesStr)

z.angularBind("children", childrenDevicesInfo)

z.angularBind("childrenStr", childrenDevicesStr)

z.angularBind("devicetopology", devicetopology)

z.angularBind("id", id)

The code, shown above, will bind my Spark variables (such as parentDevicesInfo) to my angular object (parents). Now that all of my Spark variables are available to my angular code, I can work to produce the Google Maps visualization (shown in Figure 2).

Step 3: Write Angular JS code to display the Google Map

Within Angular, I used the code (below) to read each variable using window.angularVars.<variable_name>. Then, to map each node or point-of-interest, I draw a circle and colored it green (health status = ok) or red (health status = bad).

function initMap() {

var id = window.angularVars.id;

var poidevice = window.angularVars.poidevice;

var children = window.angularVars.children;

var childrenStr = window.angularVars.childrenStr;

var parents = window.angularVars.parents;

var parentsStr = window.angularVars.parentsStr;

var devicetopology = window.angularVars.devicetopology;

var POIs = {};

console.log('POI Value: ' + poidevice[0].values);

console.log('Topology Value: ' + devicetopology[0].values.toString().split("|")[0]);

var USA = {lat: 39.8282, lng: -98.5795};

var map = new google.maps.Map(document.getElementById('map'), {zoom: 5, center: USA });

//**********************************************************************************

//

// Draw circle POI Device

//

//**********************************************************************************

$.each(poidevice, function(i, v){

POIs[v.values[0]] = v.values;

//Create marker for each POI

var pos = {lat: parseFloat(v.values[5]), lng: parseFloat(v.values[4]) };

var color = (v.values[11] == '1.0') ? '#FF0000' : '#008000';

var info = '<b>IP</b>: ' + v.values[0] + '<p><b>ISP:</b> ' + v.values[6] + '<p>' + v.values[3] + ", " + v.values[2];

console.log('Drawing POI device: ' + v.values[0] + ' ' + JSON.stringify(pos) + ' ' + v.values[5] + ',' + v.values[4]);

circle(pos, color, info, map);

});

...end of code snippet...

The project is on my Github and shows the full angular code used to create each edge connection based on all parent and child nodes as well as the Spark Streaming code (which I'll detail in a follow-up HCC article).