Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Configure Storage capacity of Hadoop cluster

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Configure Storage capacity of Hadoop cluster

- Labels:

-

Apache Hadoop

Created on 03-04-2016 10:22 AM - edited 08-19-2019 04:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

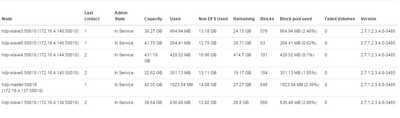

we have 5 node cluster with following configurations for master and slaves.

HDPMaster 35 GB 500 GB HDPSlave1 15 GB 500 GB HDPSlave2 15 GB 500 GB HDPSlave3 15 GB 500 GB HDPSlave4 15 GB 500 GB HDPSlave5 15 GB 500 GB

But the cluster is not taking much space. I am aware of the fact that it will reserve some space for non-dfs use.But,it is taking uneven capacity for each slave node. Is there a way to reconfigure hdfs ?

PFA.

Even though all the nodes have same hard disk, only slave 4 is taking 431GB, remaining all nodes are utilizing very small space. Is there a way to resolve this ?

Created 03-05-2016 10:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have never seen the same number for all the slave nodes because of the data distribution.

To overcome uneven block distribution scenario across the cluster, a utility program called balancer

http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HDFSCommands.html#balancer

Created 03-04-2016 10:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is your replication factor set to 3? Are you using one reducer in your ingestion? You can use hdfs balancer to spread the data around your cluster https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/HDFSCommands.html#Administrat...

Created 03-04-2016 10:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think you're interpreting it wrong it's the opposite, only slave 4 is not taking up data, the other nodes are filled.

Created 03-04-2016 10:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes, Replication factor is 3. But how spreading the data around the cluster help us in changing capacity of the nodes ?

Created 03-04-2016 10:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's remaining capacity not total

Created 03-04-2016 10:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yeah probably. I am new to this and I am not able to understand this whole configuration thing. Capacity is available space and non dfs is the space available for linux system use, if i am not wrong. so I still didn't understand the answer to my question. Why is the capacity(the available space ) is more for slave 4 alone when all the nodes including master have the same harddisk capacity.

Created 03-04-2016 10:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Go to the node and investigate the data dir directory you specified. Run hdfs fsck / command see if you have issue with hdfs, post screenshot of main page of Ambari with all widgets.

Created 03-04-2016 12:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Cluster is new. It hardly contain any data in it.

Created 03-04-2016 01:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OK you need to confirm which directories you specified for datanode in Ambari > hdfs > configs

Created 03-07-2016 05:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

/opt/hadoop/hdfs/data,/tmp/hadoop/hdfs/data,/usr/hadoop/hdfs/data,/usr/local/hadoop/hdfs/data