Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Consistency check failed on Ambari

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Consistency check failed on Ambari

- Labels:

-

Apache Ambari

Created on 02-09-2016 06:11 PM - edited 08-19-2019 02:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

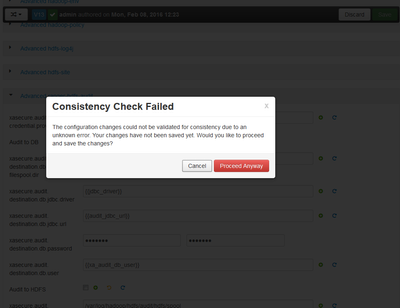

I installed Ambari 2.1.2.1 and HDP 2.3.2 on RH Linux 6.6. Installation went fine and services are all up and running but when I try to make any config change on Ambari UI, I see the following message.

The configuration changes could not be validated for consistency due to an unknown error. Your changes have not been saved yet. Would you like to proceed and save the changes?

How to get rid of this error?

Created 02-09-2016 06:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What happens when you hit proceed? and while doing that do tail -f on /var/log/ambari-server/ambari-server.log

Created 02-09-2016 06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is the first time I see this, you might have problems in the ambari database. Can you inspect the browser console and see what commands it executes against database @rbalam

Created 02-09-2016 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using Firefox 44.0

and here is the trace from it.

TRACE: The url is: /api/v1/clusters/hwtest?fields=Clusters/health_report,Clusters/total_hosts,alerts_summary_hosts&minimal_response=true app.js:154162:7 Status code 200: Success. app.js:54173:3 App.componentsStateMapper execution time: timer started app.js:55315 App.componentsStateMapper execution time: 29.04ms app.js:55360 TRACE: The url is: /api/v1/clusters/hwtest/host_components?fields=HostRoles/component_name,HostRoles/host_name&minimal_response=true app.js:154162:7 Status code 200: Success. app.js:54173:3 TRACE: The url is: /api/v1/clusters/hwtest/configurations?type=cluster-env app.js:154162:7 Status code 200: Success. app.js:54173:3 App.componentConfigMapper execution time: timer started app.js:55076 App.componentConfigMapper execution time: 5.95ms app.js:55125

Config validation failed: Object { readyState: 4, setRequestHeader: .ajax/v.setRequestHeader(), getAllResponseHeaders: .ajax/v.getAllResponseHeaders(), getResponseHeader: .ajax/v.getResponseHeader(), overrideMimeType: .ajax/v.overrideMimeType(), abort: .ajax/v.abort(), done: f.Callbacks/p.add(), fail: f.Callbacks/p.add(), progress: f.Callbacks/p.add(), state: .Deferred/h.state(), 14 more… } error Internal Server Error Object { type: "POST", timeout: 180000, dataType: "json", statusCode: Object, headers: Object, url: "/api/v1/stacks/HDP/versions/2.3/val…", data: "{"hosts":["falbdcdq0001v.farmersinsurance.com","falbdcdq0002v.farmersinsurance.com","falbdcdq0003v.farmersinsurance.com","falbdcdq0004v.farmersinsurance.com","falbdcdq0005v.farmersinsurance.com","falbdcdq0006v.farmersinsurance.com","falbdcdq0007v.farmersinsurance.com"],"services":["AMBARI_METRICS","ATLAS","FALCON","FLUME","HBASE","HDFS","HIVE","KAFKA","KNOX","MAPREDUCE2","OOZIE","PIG","RANGER","RANGER_KMS","SPARK","SQOOP","STORM","TEZ","YARN","ZOOKEEPER"],"validate":"configurations","recommendations":{"blueprint":{"host_groups":[{"name":"host-group-1","components":[{"name":"FALCON_CLIENT"},{"name":"HBASE_CLIENT"},{"name":"HDFS_CLIENT"},{"name":"HCAT"},{"name":"HIVE_CLIENT"},{"name":"MAPREDUCE2_CLIENT"},{"name":"OOZIE_CLIENT"},{"name":"PIG"},{"name":"SPARK_CLIENT"},{"name":"SQOOP"},{"name":"TEZ_CLIENT"},{"name":"YARN_CLIENT"},{"name":"ZOOKEEPER_CLIENT"},{"name":"METRICS_MONITOR"},{"name":"FALCON_CLIENT"},{"name":"HBASE_CLIENT"},{"name":"HCAT"},{"name":"HDFS_CLIENT"},{"name":"HIVE_CLIENT"[…], context: Object, beforeSend: ajax<.send/opt.beforeSend(), success: ajax<.send/opt.success(), 2 more… } app.js:64175:5 Error code 500: Internal Error on server side. app.js:54195:3 Config validation failed. Going ahead with saving of configs app.js:64188:7 TRACE: The url is: /api/v1/clusters?fields=Clusters/provisioning_state app.js:154162:7 Status code 200: Success. app.js:54173:3 TRACE: The url is: /api/v1/clusters/hwtest/requests?to=end&page_size=10&fields=Requests app.js:154162:7 Status code 200: Success. app.js:54173:3

Created 02-09-2016 06:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What happens when you hit proceed? and while doing that do tail -f on /var/log/ambari-server/ambari-server.log

Created 02-09-2016 06:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 02-09-2016 06:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rbalam Clean the browser cache and refresh the ambari console to see the behavior before any other change.

Created 02-09-2016 07:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rbalam You may want to check few settings on permissions...I had the same issue with this

[root@sandbox ~]# ls -l /etc/login.defs

-rw-r--r--. 1 root root 1816 2015-03-27 13:14 /etc/login.defs

[root@sandbox ~]#

Created 02-09-2016 06:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

try restarting ambari server and agents, then try again. HTTP code 500 means a problem on the server side.

Created 09-13-2018 01:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have experienced this problem after changing Ambari Server to run as a non privileged user "ambari-server". In my case I can see in the logs (/var/log/ambari-server/ambari-server.log) the following:

12 Sep 2018 22:06:57,515 ERROR [ambari-client-thread-6396] BaseManagementHandler:61 - Caught a system exception while attempting to create a resource: Error occured during stack advisor command invocation: Cannot create /var/run/ambari-server/stack-recommendations

This error happens because in CentOS/RedHat /var/run is really a symlink to /run which is a tmpfs filesystem mounted at boot time from RAM. So if I manually create the folder with the required privileges it won't survive a reboot and because the unprivileged user running Ambari Server is unable to create the required directory the error occurs.

I was able to partially fix this using systemd-tmpfiles feature by creating a file /etc/tmpfiles.d/ambari-server.conf with following content:

d /run/ambari-server 0775 ambari-server hadoop - d /run/ambari-server/stack-recommendations 0775 ambari-server hadoop -

With this file in place running "systemd-tmpfiles --create" will create the folders with the required privileges. According to the following RedHat documentation this should be automagically run at boot time to setup everything:

However sometimes this doesn't happens (I don't know why) and I have to run the previous command manually to fix this error.