Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS is almost full 90% but data node disks ar...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS is almost full 90% but data node disks are around 50%

Created on 09-03-2018 05:05 PM - edited 08-18-2019 02:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi all

we have ambari cluster version 2.6.1 & HDP version 2.6.4

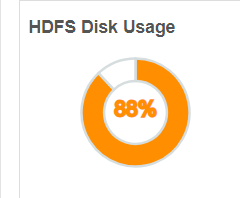

from the dashboard we can see that HDFS DISK Usage is almost 90%

but all data-node disk are around 90%

so why HDFS show 90% , while datanode disk are only 50%

/dev/sdc 20G 11G 8.7G 56% /data/sdc /dev/sde 20G 11G 8.7G 56% /data/sde /dev/sdd 20G 11G 9.0G 55% /data/sdd /dev/sdb 20G 8.9G 11G 46% /data/sdb

is it problem of fine-tune ? or else

we also performed re-balance from the ambari GUI but this isn't help

Created on 09-05-2018 12:56 AM - edited 08-18-2019 02:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the NameNode Report and UI (including ambari UI) shows that your DFS used is reaching almsot 87% to 90% hence it will be really good if you can increase the DFS capacity.

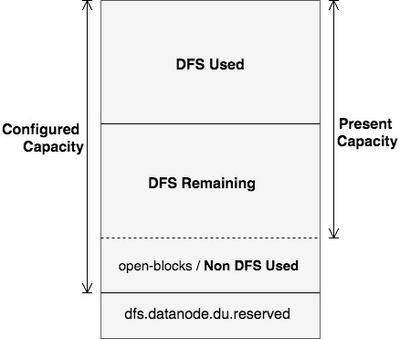

In order to understand in detail about the Non DFS Used = Configured Capacity - DFS Remaining - DFS Used

YOu can refer to the following article which aims at explaining the concepts of Configured Capacity, Present Capacity, DFS Used,DFS Remaining, Non DFS Used, in HDFS. The diagram below clearly explains these output space parameters assuming HDFS as a single disk.

https://community.hortonworks.com/articles/98936/details-of-the-output-hdfs-dfsadmin-report.html

.

The above is one of the best article to understand the DFS and Non-DFS calculations and remedy.

You add capacity by giving dfs.datanode.data.dir more mount points or directories. In Ambari that section of configs is I believe to the right depending the version of Ambari or in advanced section, the property is in hdfs-site.xml. the more new disk you provide through comma separated list the more capacity you will have. Preferably every machine should have same disk and mount point structure.

.

Created 09-04-2018 10:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes possibly @Michael Bronson. You could also check "Trash" files size as @Geoffrey Shelton Okot suggests.

In general it is advised not to store too many small files in HDFS. In general, HDFS is good for storing large files.

Created 09-05-2018 09:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 09-05-2018 10:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Karthik Palanisamy : I'm sorry to be wrong. Consider two blocks (64 MB, 64MB) and only I've used only (2MB, 3MB) in each block. Means can I reuse the remaining (123MB) to another one block of 64MB?

Created 09-05-2018 10:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, you can use remaining space to other blocks.

Created 09-05-2018 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

so what is the final conclution , why we have a gap between what the disks size and the HDFS as displayed on the amabri dasborad ?

Created 09-05-2018 07:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As I said earlier, It's hard to tell you the exact cause without reviewing namenode and datanode log regarding disk registration. As we see in the UI that configured capacity is 154 GB which means it registered only two disks from each datanode.

If you don't have any concern then share your logs which should be after the service restart.

I still waiting for your reply to my previous question,

Did you validated in local machine, hdfs-site.xml without ambari?

# grep dfs.datanode.data.dir -A1 /etc/hadoop/conf/hdfs-site.xml

Created 09-05-2018 09:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is the file: , and its look fine

<name>dfs.datanode.data.dir</name>

<value>/data/sdb/hadoop/hdfs/data,/data/sdc/hadoop/hdfs/data,/data/sdd/hadoop/hdfs/data,/data/sde/hadoop/hdfs/data</value>

--

<name>dfs.datanode.data.dir.perm</name>

<value>750</value>

Created 09-05-2018 09:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

let me know if you have some conclusions , as you saw the configuration in HDFS and in the XML is correct , so I show you the real status and disk are corectly configured in HDFS ,,

Created 09-04-2018 10:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have heavily deleted files then it could still be in the .Trash.

HDFS trash is just like the Recycle Bin. Its purpose is to prevent you from unintentionally deleting something. You can enable this feature by setting this property:

fs.trash.interval

with a number greater than 0 in core-site.xml. After the trash feature is enabled, when you remove something from HDFS by using the rm command, files or directories will not be wiped out immediately; instead, they will be moved to a trash directory (/user/${username}/.Trash, see example).

$ hdfs dfs -rm -r /user/bob/5gb file 15/09/18 20:34:48 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 360 minutes, Emptier interval = 0 minutes. Moved: ‘hdfs://hdp2.6/user/bob/5gb’ to trash at: hdfs://hdp2.6/user/bob/.Trash/Current

If you want to empty the trashor just delete the entire trash directory use the HDFS command line utility to do that:

$ hdfs dfs -expunge

Or use the -skipTrash

hdfs dfs -rm -skipTrash /path/to/file/you/want/to/remove/permanently

Can you check that the hidden directory .Trash ?

Created 09-04-2018 11:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content