Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: HDFS is almost full 90% but data node disks ar...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

HDFS is almost full 90% but data node disks are around 50%

Created on 09-03-2018 05:05 PM - edited 08-18-2019 02:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi all

we have ambari cluster version 2.6.1 & HDP version 2.6.4

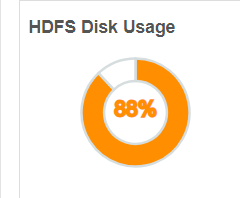

from the dashboard we can see that HDFS DISK Usage is almost 90%

but all data-node disk are around 90%

so why HDFS show 90% , while datanode disk are only 50%

/dev/sdc 20G 11G 8.7G 56% /data/sdc /dev/sde 20G 11G 8.7G 56% /data/sde /dev/sdd 20G 11G 9.0G 55% /data/sdd /dev/sdb 20G 8.9G 11G 46% /data/sdb

is it problem of fine-tune ? or else

we also performed re-balance from the ambari GUI but this isn't help

Created on 09-05-2018 12:56 AM - edited 08-18-2019 02:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the NameNode Report and UI (including ambari UI) shows that your DFS used is reaching almsot 87% to 90% hence it will be really good if you can increase the DFS capacity.

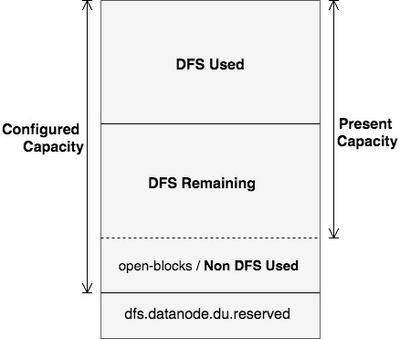

In order to understand in detail about the Non DFS Used = Configured Capacity - DFS Remaining - DFS Used

YOu can refer to the following article which aims at explaining the concepts of Configured Capacity, Present Capacity, DFS Used,DFS Remaining, Non DFS Used, in HDFS. The diagram below clearly explains these output space parameters assuming HDFS as a single disk.

https://community.hortonworks.com/articles/98936/details-of-the-output-hdfs-dfsadmin-report.html

.

The above is one of the best article to understand the DFS and Non-DFS calculations and remedy.

You add capacity by giving dfs.datanode.data.dir more mount points or directories. In Ambari that section of configs is I believe to the right depending the version of Ambari or in advanced section, the property is in hdfs-site.xml. the more new disk you provide through comma separated list the more capacity you will have. Preferably every machine should have same disk and mount point structure.

.

Created 09-04-2018 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jay , yes I agree , but how it can be the datanode disk are with capacity of 50% and HDFS show 88% ? , and why we not used all the size of datanode disks ? , I am really not understant that

Created 09-05-2018 09:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi

per your request , this is the file

<name>dfs.datanode.data.dir</name>

<value>/data/sdb/hadoop/hdfs/data,/data/sdc/hadoop/hdfs/data,/data/sdd/hadoop/hdfs/data,/data/sde/hadoop/hdfs/data</value>

--

<name>dfs.datanode.data.dir.perm</name>

<value>750</value>

Created 09-06-2018 06:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks good to me. Just do one more check, what was the config that getting loaded into NN in-memory?

http://<active nn host>:50070/conf

and find it "dfs.datanode.data.dir".

You must share us the logs. No point in going with assumptions. 🙂

Created 09-06-2018 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is the relevant info from the file ( long file , but I think you want to look on the relevant disks )

<value> /data/sdb/hadoop/hdfs/data,/data/sdc/hadoop/hdfs/data,/data/sdd/hadoop/hdfs/data,/data/sde/hadoop/hdfs/data </value> <source>hdfs-site.xml</source> </property> <value> /data/sdb/hadoop/hdfs/data,/data/sdc/hadoop/hdfs/data,/data/sdd/hadoop/hdfs/data,/data/sde/hadoop/hdfs/data </value> <source>hdfs-site.xml</source> </property>

Created 09-06-2018 07:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

about the logs , please remind me what the logs that you want to look for ?

Created 09-06-2018 07:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the confirmation. I need namenode and datanode log after HDFS service restart.

Created 09-06-2018 07:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

because the logs are huge , do you want to search specific sting in the logs ?

Created 09-06-2018 07:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can do tail in namenode and datanode log, also you can redirect output to dummy log file during restart.

#tailf <namenode log> >/tmp/namenode-`hostname`.log

#tailf <datanode log> >/tmp/datanode-`hostname`.log

Created 09-04-2018 10:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HDFS originally splits and stores the data in blocks. Each block is 64MB or 128MB by default based on your HDFS version. Consider a file which is of size (2MB) is stored in a block. The remaining 62MB (considering the default size to be 64MB) is not used by HDFS. Which means here the HDFS used space is 64MB but the actual hard disk used space is 2MB.

Hope this is relevant to what you are asking.

Created 09-04-2018 10:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Rabbit , so in our case based on that 88% is HDFS uses , the only one option to be with more HDFS space is to add adisk in each datanode ? am I right ?