Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hiveserver 2 shutdown

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hiveserver 2 shutdown

Created on

10-11-2021

10:58 PM

- last edited on

10-12-2021

04:44 AM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone,

I am new for cloudera software. I practice how to use hive and impala query from Hue

but I got this error from Hue when I start query.

Could not connect to any of [('10.4.150.58', 10000)] (code THRIFTTRANSPORT): TTransportException("Could not connect to any of [('10.4.150.58', 10000)]",)

then I did some search and I know that because Hiveserver2 is down. I see log file and I got this.

12:18:07.280 PM ERROR HiveServer2

[main]: Error starting HiveServer2

java.lang.Error: Max start attempts 5 exhausted

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1084) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.access$1400(HiveServer2.java:138) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2$StartOptionExecutor.execute(HiveServer2.java:1333) [hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.main(HiveServer2.java:1177) [hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_232]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_232]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_232]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_232]

at org.apache.hadoop.util.RunJar.run(RunJar.java:318) [hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.util.RunJar.main(RunJar.java:232) [hadoop-common-3.1.1.7.1.7.0-551.jar:?]

Caused by: org.apache.hive.service.ServiceException: org.apache.hive.service.ServiceException: Unable to setup tez session pool

at org.apache.hive.service.server.HiveServer2.start(HiveServer2.java:721) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1059) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

... 9 more

Caused by: org.apache.hive.service.ServiceException: Unable to setup tez session pool

at org.apache.hive.service.server.HiveServer2.initAndStartTezSessionPoolManager(HiveServer2.java:825) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startOrReconnectTezSessions(HiveServer2.java:795) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.start(HiveServer2.java:718) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1059) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

... 9 more

Caused by: org.apache.tez.dag.api.TezException: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request! Cannot allocate containers as requested resource is greater than maximum allowed allocation. Requested resource type=[memory-mb], Requested resource=<memory:2048, vCores:1>, maximum allowed allocation=<memory:1024, vCores:4>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:1024, vCores:4>

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.throwInvalidResourceException(SchedulerUtils.java:491)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.checkResourceRequestAgainstAvailableResource(SchedulerUtils.java:387)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.validateResourceRequest(SchedulerUtils.java:315)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndValidateRequest(SchedulerUtils.java:293)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.validateAndCreateResourceRequest(RMAppManager.java:580)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.createAndPopulateNewRMApp(RMAppManager.java:392)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.submitApplication(RMAppManager.java:330)

at org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.submitApplication(ClientRMService.java:664)

at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationClientProtocolPBServiceImpl.submitApplication(ApplicationClientProtocolPBServiceImpl.java:290)

at org.apache.hadoop.yarn.proto.ApplicationClientProtocol$ApplicationClientProtocolService$2.callBlockingMethod(ApplicationClientProtocol.java:611)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:533)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1070)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:989)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:917)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2894)

at org.apache.tez.client.TezClient.start(TezClient.java:410) ~[tez-api-0.9.1.7.1.7.0-551.jar:0.9.1.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.startSessionAndContainers(TezSessionState.java:536) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.openInternal(TezSessionState.java:374) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.open(TezSessionState.java:313) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolSession.open(TezSessionPoolSession.java:118) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.startInitialSession(TezSessionPool.java:359) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.startUnderInitLock(TezSessionPool.java:171) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.start(TezSessionPool.java:123) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolManager.startPool(TezSessionPoolManager.java:115) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.initAndStartTezSessionPoolManager(HiveServer2.java:822) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startOrReconnectTezSessions(HiveServer2.java:795) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.start(HiveServer2.java:718) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1059) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

... 9 more

Caused by: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request! Cannot allocate containers as requested resource is greater than maximum allowed allocation. Requested resource type=[memory-mb], Requested resource=<memory:2048, vCores:1>, maximum allowed allocation=<memory:1024, vCores:4>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:1024, vCores:4>

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.throwInvalidResourceException(SchedulerUtils.java:491)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.checkResourceRequestAgainstAvailableResource(SchedulerUtils.java:387)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.validateResourceRequest(SchedulerUtils.java:315)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndValidateRequest(SchedulerUtils.java:293)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.validateAndCreateResourceRequest(RMAppManager.java:580)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.createAndPopulateNewRMApp(RMAppManager.java:392)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.submitApplication(RMAppManager.java:330)

at org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.submitApplication(ClientRMService.java:664)

at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationClientProtocolPBServiceImpl.submitApplication(ApplicationClientProtocolPBServiceImpl.java:290)

at org.apache.hadoop.yarn.proto.ApplicationClientProtocol$ApplicationClientProtocolService$2.callBlockingMethod(ApplicationClientProtocol.java:611)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:533)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1070)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:989)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:917)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2894)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_232]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_232]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_232]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_232]

at org.apache.hadoop.yarn.ipc.RPCUtil.instantiateException(RPCUtil.java:53) ~[hadoop-yarn-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.yarn.ipc.RPCUtil.instantiateYarnException(RPCUtil.java:75) ~[hadoop-yarn-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.yarn.ipc.RPCUtil.unwrapAndThrowException(RPCUtil.java:116) ~[hadoop-yarn-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.submitApplication(ApplicationClientProtocolPBClientImpl.java:304) ~[hadoop-yarn-common-3.1.1.7.1.7.0-551.jar:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_232]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_232]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_232]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_232]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:431) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:362) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at com.sun.proxy.$Proxy43.submitApplication(Unknown Source) ~[?:?]

at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.submitApplication(YarnClientImpl.java:328) ~[hadoop-yarn-client-3.1.1.7.1.7.0-551.jar:?]

at org.apache.tez.client.TezYarnClient.submitApplication(TezYarnClient.java:77) ~[tez-api-0.9.1.7.1.7.0-551.jar:0.9.1.7.1.7.0-551]

at org.apache.tez.client.TezClient.start(TezClient.java:405) ~[tez-api-0.9.1.7.1.7.0-551.jar:0.9.1.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.startSessionAndContainers(TezSessionState.java:536) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.openInternal(TezSessionState.java:374) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.open(TezSessionState.java:313) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolSession.open(TezSessionPoolSession.java:118) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.startInitialSession(TezSessionPool.java:359) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.startUnderInitLock(TezSessionPool.java:171) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.start(TezSessionPool.java:123) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolManager.startPool(TezSessionPoolManager.java:115) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.initAndStartTezSessionPoolManager(HiveServer2.java:822) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startOrReconnectTezSessions(HiveServer2.java:795) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.start(HiveServer2.java:718) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1059) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

... 9 more

Caused by: org.apache.hadoop.ipc.RemoteException: Invalid resource request! Cannot allocate containers as requested resource is greater than maximum allowed allocation. Requested resource type=[memory-mb], Requested resource=<memory:2048, vCores:1>, maximum allowed allocation=<memory:1024, vCores:4>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:1024, vCores:4>

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.throwInvalidResourceException(SchedulerUtils.java:491)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.checkResourceRequestAgainstAvailableResource(SchedulerUtils.java:387)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.validateResourceRequest(SchedulerUtils.java:315)

at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndValidateRequest(SchedulerUtils.java:293)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.validateAndCreateResourceRequest(RMAppManager.java:580)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.createAndPopulateNewRMApp(RMAppManager.java:392)

at org.apache.hadoop.yarn.server.resourcemanager.RMAppManager.submitApplication(RMAppManager.java:330)

at org.apache.hadoop.yarn.server.resourcemanager.ClientRMService.submitApplication(ClientRMService.java:664)

at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationClientProtocolPBServiceImpl.submitApplication(ApplicationClientProtocolPBServiceImpl.java:290)

at org.apache.hadoop.yarn.proto.ApplicationClientProtocol$ApplicationClientProtocolService$2.callBlockingMethod(ApplicationClientProtocol.java:611)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:533)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1070)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:989)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:917)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2894)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1562) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1508) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.ipc.Client.call(Client.java:1405) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:118) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at com.sun.proxy.$Proxy42.submitApplication(Unknown Source) ~[?:?]

at org.apache.hadoop.yarn.api.impl.pb.client.ApplicationClientProtocolPBClientImpl.submitApplication(ApplicationClientProtocolPBClientImpl.java:301) ~[hadoop-yarn-common-3.1.1.7.1.7.0-551.jar:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_232]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_232]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_232]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_232]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:431) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:362) ~[hadoop-common-3.1.1.7.1.7.0-551.jar:?]

at com.sun.proxy.$Proxy43.submitApplication(Unknown Source) ~[?:?]

at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.submitApplication(YarnClientImpl.java:328) ~[hadoop-yarn-client-3.1.1.7.1.7.0-551.jar:?]

at org.apache.tez.client.TezYarnClient.submitApplication(TezYarnClient.java:77) ~[tez-api-0.9.1.7.1.7.0-551.jar:0.9.1.7.1.7.0-551]

at org.apache.tez.client.TezClient.start(TezClient.java:405) ~[tez-api-0.9.1.7.1.7.0-551.jar:0.9.1.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.startSessionAndContainers(TezSessionState.java:536) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.openInternal(TezSessionState.java:374) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.open(TezSessionState.java:313) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolSession.open(TezSessionPoolSession.java:118) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.startInitialSession(TezSessionPool.java:359) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.startUnderInitLock(TezSessionPool.java:171) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPool.start(TezSessionPool.java:123) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolManager.startPool(TezSessionPoolManager.java:115) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.initAndStartTezSessionPoolManager(HiveServer2.java:822) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startOrReconnectTezSessions(HiveServer2.java:795) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.start(HiveServer2.java:718) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.startHiveServer2(HiveServer2.java:1059) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

... 9 more

12:18:07.341 PM INFO HiveServer2

[shutdown-hook-0]: Shutting down HiveServer2

12:18:07.341 PM ERROR HiveServer2

[shutdown-hook-0]: Error stopping schq

java.lang.IllegalStateException: The current ScheduledQueryExecutionService INSTANCE is invalid

at org.apache.hadoop.hive.ql.scheduled.ScheduledQueryExecutionService.close(ScheduledQueryExecutionService.java:312) ~[hive-exec-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.stop(HiveServer2.java:892) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at org.apache.hive.service.server.HiveServer2.lambda$init$0(HiveServer2.java:439) ~[hive-service-3.1.3000.7.1.7.0-551.jar:3.1.3000.7.1.7.0-551]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_232]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_232]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_232]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_232]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_232]

12:18:07.342 PM INFO HiveServer2

[shutdown-hook-0]: Web UI has stopped

12:18:07.342 PM INFO ZooKeeperHiveHelper

[shutdown-hook-0]: Server instance removed from ZooKeeper.

12:18:07.342 PM INFO HiveServer2

[shutdown-hook-0]: Stopping/Disconnecting tez sessions.

12:18:07.342 PM INFO HiveServer2

[shutdown-hook-0]: Stopped tez session pool manager.

12:18:07.342 PM INFO HiveServer2

[shutdown-hook-0]: Shutting down HiveServer2

Then I find to figure this problem and I found this link.

https://community.cloudera.com/t5/Support-Questions/Hiveserver-Not-Starting/m-p/322600

This topic match my log error so I do follow step to solve but I decide to skip only this step

because I don't understand.

"There may be a chance the The property "tez.history.logging.proto-base-dir" is pointing to wrong HDFS path location so check that once and correct if needed."

After do all that things. I log error still exist.

Can someone help me please Thanks you.

Created 10-12-2021 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

As you mentioned you are practicing, so what is your cluster configuration?

Does it have enough resources allocated to yarn and hive?

seems resource availability issue:

Caused by: org.apache.hadoop.ipc.RemoteException: Invalid resource request! Cannot allocate containers as requested resource is greater than maximum allowed allocation. Requested resource type=[memory-mb], Requested resource=<memory:2048, vCores:1>, maximum allowed allocation=<memory:1024, vCores:4>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:1024, vCores:4>

Created 10-12-2021 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

As you mentioned you are practicing, so what is your cluster configuration?

Does it have enough resources allocated to yarn and hive?

seems resource availability issue:

Caused by: org.apache.hadoop.ipc.RemoteException: Invalid resource request! Cannot allocate containers as requested resource is greater than maximum allowed allocation. Requested resource type=[memory-mb], Requested resource=<memory:2048, vCores:1>, maximum allowed allocation=<memory:1024, vCores:4>, please note that maximum allowed allocation is calculated by scheduler based on maximum resource of registered NodeManagers, which might be less than configured maximum allocation=<memory:1024, vCores:4>

Created 10-12-2021 05:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

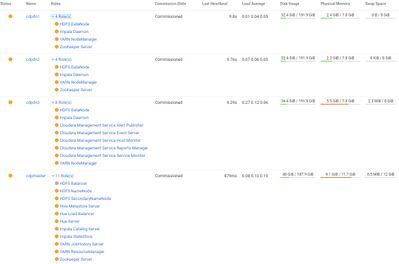

Here is my cluster. I don't know that enough or not.

Could you please give me more information about it. Thanks

Created 10-12-2021 09:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 10-13-2021 05:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

its my first comment on cloudera community portal and glad it worked !!!

Lets keep helping each other...