Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Hortonworks Tutorial error - Zeppelin - loadin...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hortonworks Tutorial error - Zeppelin - loading data with spark

- Labels:

-

Apache Zeppelin

Created 02-13-2019 02:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have this problem with the sandbox running on Azure. (HDP 2.6.5)

I run through the tutorial 'Getting Started with HDP' and try to load the data from hfs file 'geolocation.csv' with Spark into Hive using the Zeppelin Notebook service.

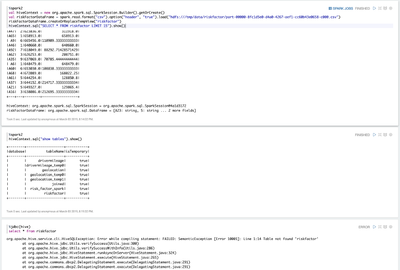

Installation of the HiveContext does work. The smoke test 'show tables' does work, it gives me this table as a result:

+--------+-------------+-----------+ |database| tableName|isTemporary| +--------+-------------+-----------+ | default| avgmileage| false| | default|drivermileage| false| | default| geolocation| false| | default| sample_07| false| | default| sample_08| false| | default| truckmileage| false| | default| trucks| false| +--------+-------------+-----------+

But the next step, where the load shall be done, does not finish. I only see 10% progress, and there it starves.

When I kill the application using 'yarn application -kill <appid>'. Message is:

org.apache.spark.SparkException: Job 0 cancelled because SparkContext was shut down at org.apache.spark.scheduler.DAGScheduler$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:820) at org.apache.spark.scheduler.DAGScheduler$anonfun$cleanUpAfterSchedulerStop$1.apply(DAGScheduler.scala:818) at scala.collection.mutable.HashSet.foreach(HashSet.scala:78) at org.apache.spark.scheduler.DAGScheduler.cleanUpAfterSchedulerStop(DAGScheduler.scala:818) at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onStop(DAGScheduler.scala:1750) at org.apache.spark.util.EventLoop.stop(EventLoop.scala:83) at org.apache.spark.scheduler.DAGScheduler.stop(DAGScheduler.scala:1669) at org.apache.spark.SparkContext$anonfun$stop$8.apply$mcV$sp(SparkContext.scala:1928) at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1317) at org.apache.spark.SparkContext.stop(SparkContext.scala:1927) at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend$MonitorThread.run(YarnClientSchedulerBackend.scala:108) at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:630) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2029) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2050) at org.apache.spark.SparkContext.runJob(SparkContext.scala:2069) at org.apache.spark.sql.execution.SparkPlan.executeTake(SparkPlan.scala:336) at org.apache.spark.sql.execution.CollectLimitExec.executeCollect(limit.scala:38) at org.apache.spark.sql.Dataset.org$apache$spark$sql$Dataset$collectFromPlan(Dataset.scala:2861)

Rerunning the complete notebook, results in this error:

java.lang.IllegalStateException: Cannot call methods on a stopped SparkContext. This stopped SparkContext was created at: org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:915) sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) java.lang.reflect.Method.invoke(Method.java:498) org.apache.zeppelin.spark.Utils.invokeMethod(Utils.java:38) org.apache.zeppelin.spark.Utils.invokeMethod(Utils.java:33) org.apache.zeppelin.spark.SparkInterpreter.createSparkSession(SparkInterpreter.java:362) org.apache.zeppelin.spark.SparkInterpreter.getSparkSession(SparkInterpreter.java:233) org.apache.zeppelin.spark.SparkInterpreter.open(SparkInterpreter.java:832) org.apache.zeppelin.interpreter.LazyOpenInterpreter.open(LazyOpenInterpreter.java:69) org.apache.zeppelin.interpreter.remote.RemoteInterpreterServer$InterpretJob.jobRun(RemoteInterpreterServer.java:493) org.apache.zeppelin.scheduler.Job.run(Job.java:175)

I couldn't find any hint, how to reset the notebook to be able to rerun this stuff.

I'm new to Hadoop and just started a few weeks ago with setting up the sandbox and starting with the tutorials.

Any reply, suggestion and help is appreciated.

Thanks in advance,

Rainer

Created on 03-07-2019 04:25 AM - edited 08-17-2019 02:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a similar error with the tutorial and Zeppelin. Sadly I don't have an answer, and this broken community website won't let me post a question of my own.

Created 04-01-2019 08:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ran into this error too, the problem is that the tutorial is wrong. It assumes you can visualize notebooks for temporary tables without using the spark sql interpreter. You need to use the spark sql interpreter to run the query against the table, this gives you visualisation options.

Created 04-01-2019 08:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had this error too. Only solution I found was to shut down and reboot. Apparently sometimes when you shut down the spark context in zeppelin it still somehow persists and hangs. Workaround for me was to stop all services in ambari, reboot the system and start again. Then the session is flushed.

(Found it here: https://stackoverflow.com/questions/35515120/why-does-sparkcontext-randomly-close-and-how-do-you-res... )