Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Using Spark2 on HDP 2.5

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Using Spark2 on HDP 2.5

- Labels:

-

Apache Spark

Created 03-07-2017 09:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I followed the below tutorial to install Jupyter and all is fine:

https://hortonworks.com/hadoop-tutorial/using-ipython-notebook-with-apache-spark/

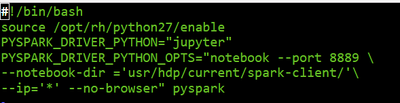

Now I want to use Spark 2 instead. I changed the spark_home to the /usr/hdp/current/spark2-client folder and trying to run the start_ipython_notebook.sh command. It says

IPYTHON and IPYTHON_OPTS are removed in Spark 2.0+. Remove these from the environment and set PYSPARK_DRIVER_PYTHON and PYSPARK_DRIVER_PYTHON_OPTS instead.

Can someone suggest what change should I make to the ipython startup script?

Created 03-08-2017 08:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From https://github.com/apache/spark/blob/master/bin/pyspark

# In Spark 2.0, IPYTHON and IPYTHON_OPTS are removed and pyspark fails to launch if either option is set in the user's environment. Instead, users should set PYSPARK_DRIVER_PYTHON=ipython to use IPython and set PYSPARK_DRIVER_PYTHON_OPTS to pass options when starting the Python driver# (e.g. PYSPARK_DRIVER_PYTHON_OPTS='notebook'). This supports full customization of the IPython# and executor Python executables.

Created 03-08-2017 08:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From https://github.com/apache/spark/blob/master/bin/pyspark

# In Spark 2.0, IPYTHON and IPYTHON_OPTS are removed and pyspark fails to launch if either option is set in the user's environment. Instead, users should set PYSPARK_DRIVER_PYTHON=ipython to use IPython and set PYSPARK_DRIVER_PYTHON_OPTS to pass options when starting the Python driver# (e.g. PYSPARK_DRIVER_PYTHON_OPTS='notebook'). This supports full customization of the IPython# and executor Python executables.

Created 03-08-2017 06:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just what i was looking for. thanks!

Created on 01-11-2018 01:44 AM - edited 08-19-2019 12:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Arvind Kandaswamy, can you please share the code that you used to solve the problem? It is not working for me.

Created 04-25-2018 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please someone please answer